Cost tracking

Opik has been designed to track and monitor costs for your LLM applications by measuring token usage across all traces. Using the Opik dashboard, you can analyze spending patterns and quickly identify cost anomalies. All costs across Opik are estimated and displayed in USD.

Monitoring Costs in the Dashboard

You can use the Opik dashboard to review costs at three levels: spans, traces, and projects. Each level provides different insights into your application’s cost structure.

Span-Level Costs

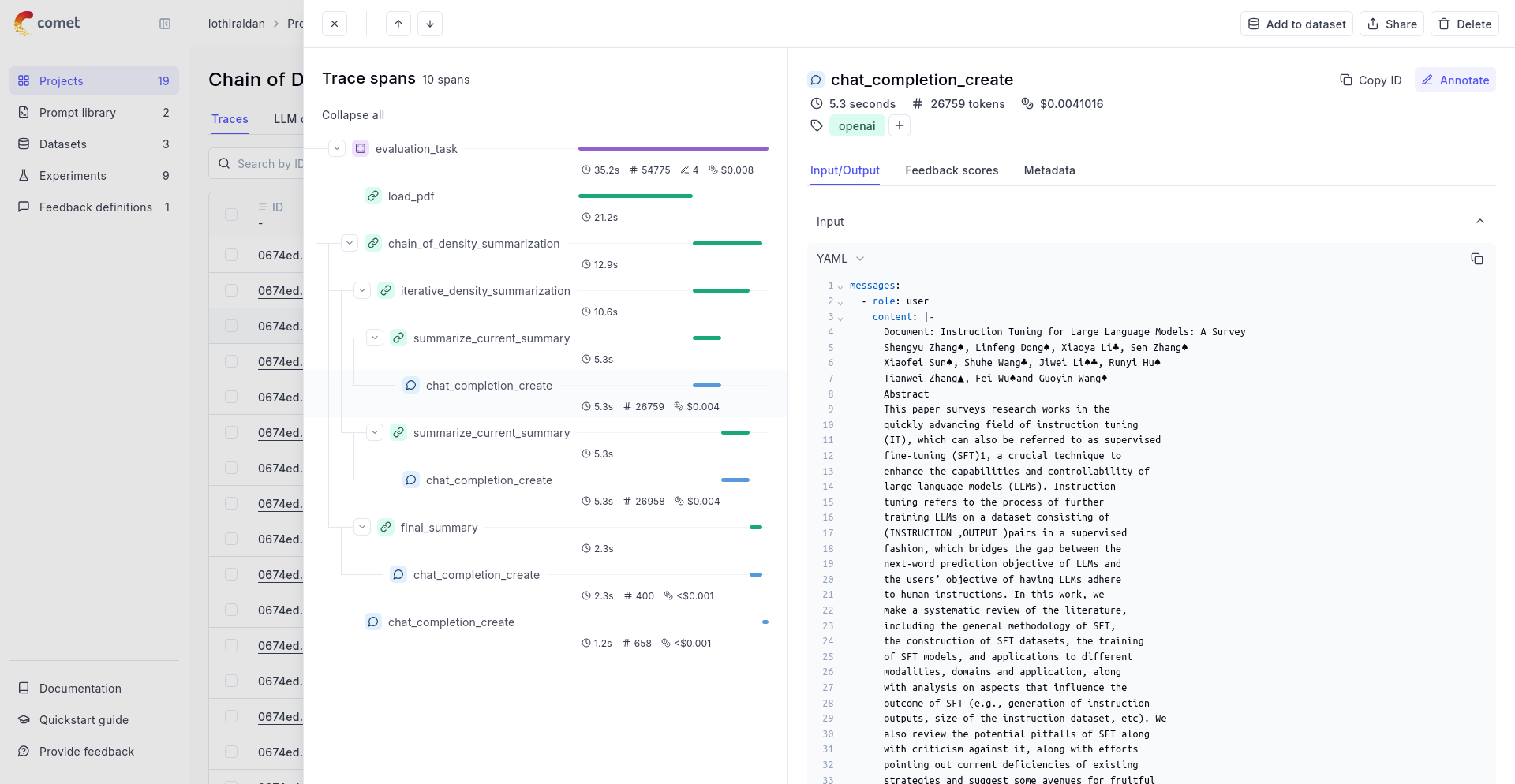

Individual spans show the computed costs (in USD) for each LLM spans of your traces:

Trace-Level Costs

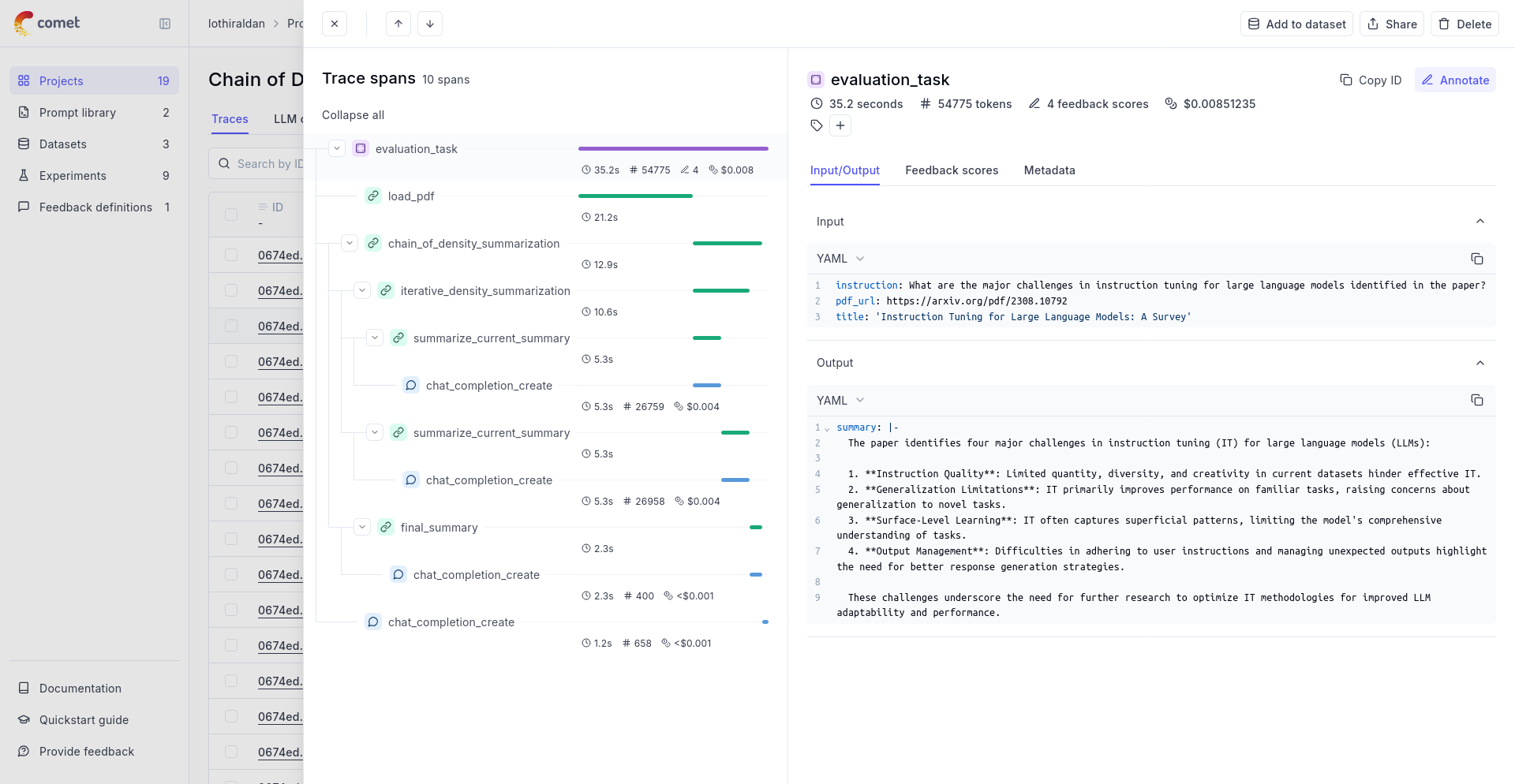

If you are using one of Opik’s integrations, we automatically aggregates costs from all spans within a trace to compute total trace costs:

Project-Level Analytics

Track your overall project costs in:

-

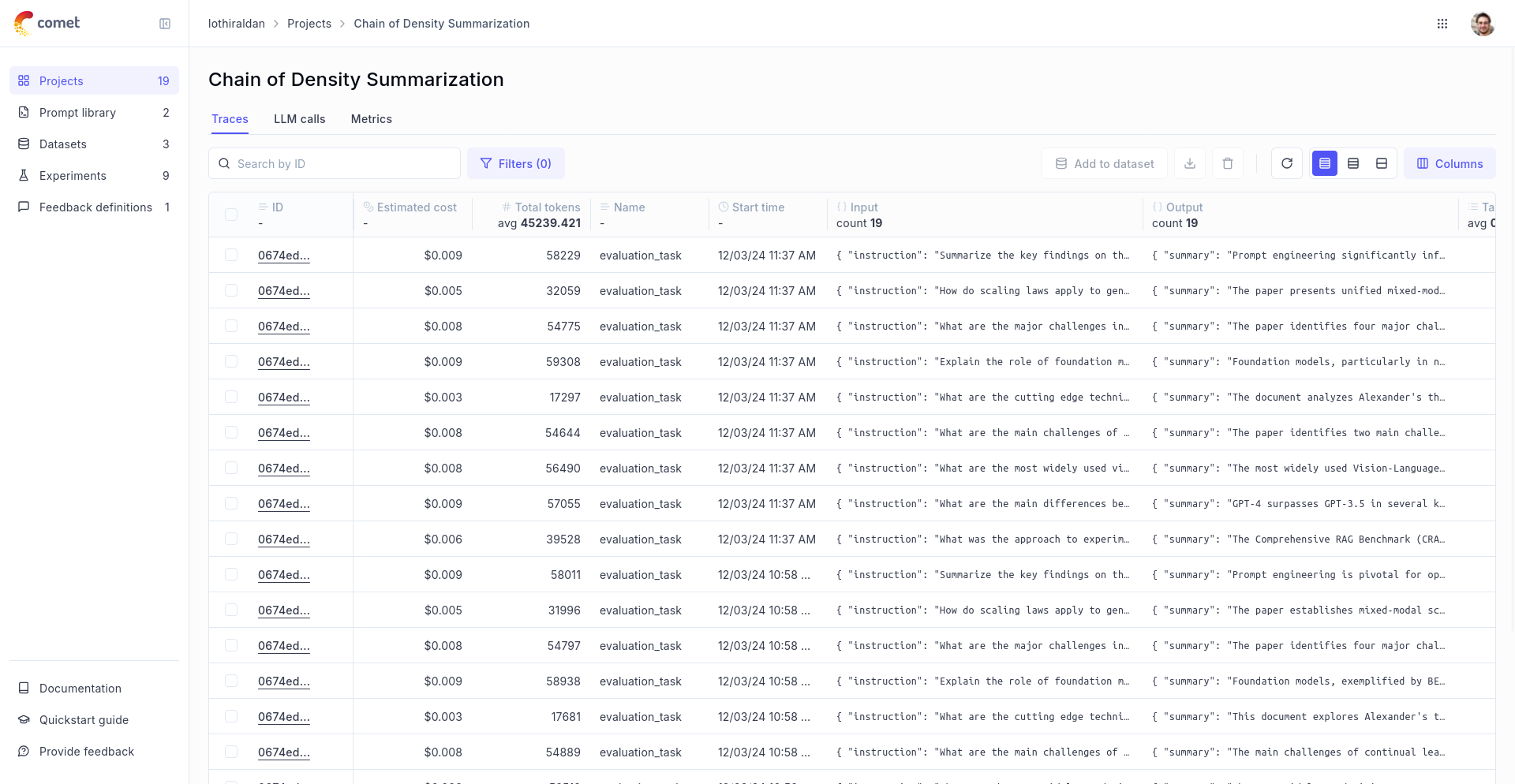

The main project view, through the Estimated Cost column:

-

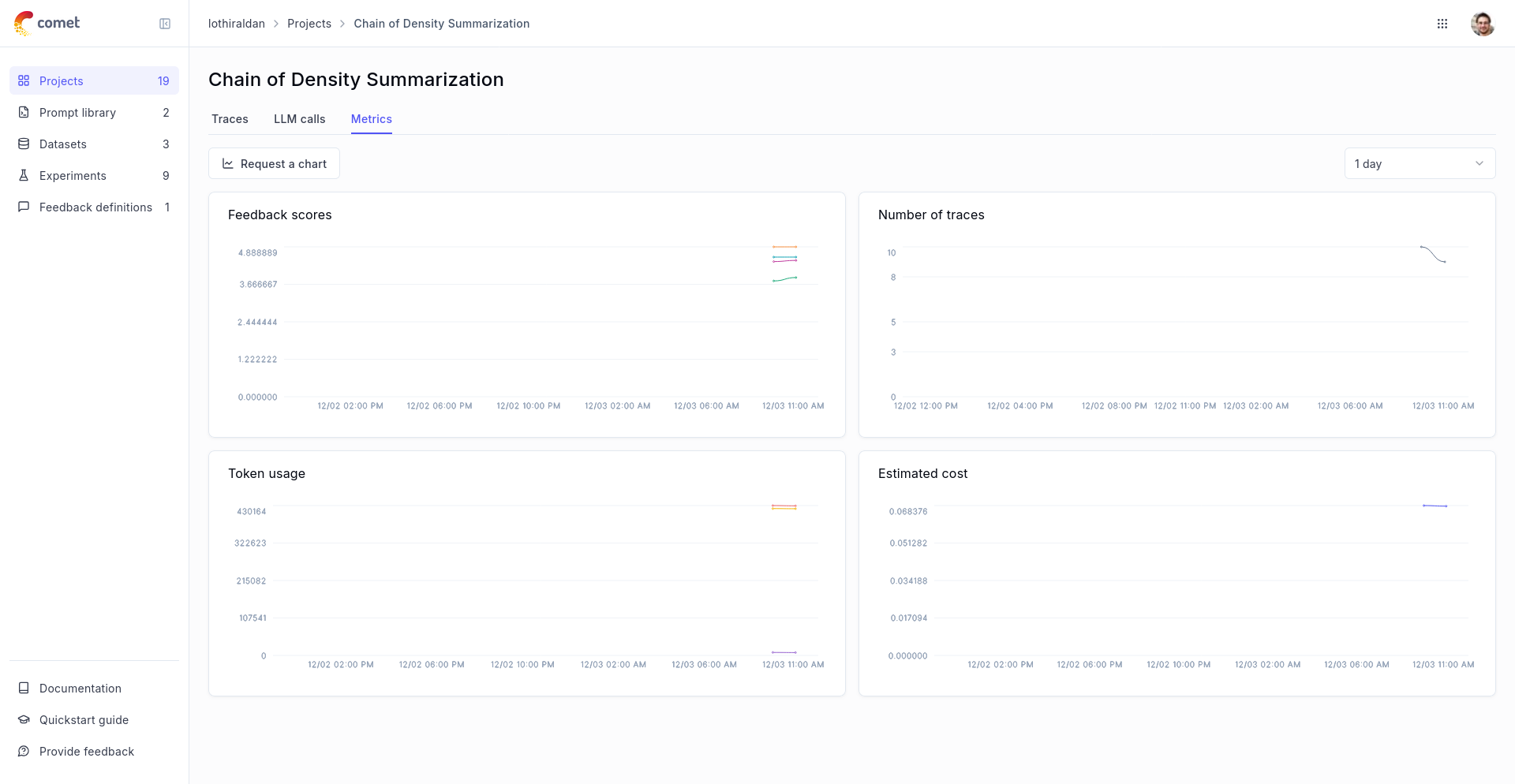

The project Metrics tab, which shows cost trends over time:

Retrieving Costs Programmatically

You can retrieve the estimated cost programmatically for both spans and traces. Note that the cost will be None if the span or trace used an unsupported model. See Exporting Traces and Spans for more ways of exporting traces and spans.

Retrieving Span Costs

Retrieving Trace Costs

Manually setting the provider and model name

If you are not using one of Opik’s integration, Opik can still compute the cost. For you will need to ensure the

span type is llm and you will need to pass:

provider: The name of the provider, typicallyopenai,anthropicorgoogle_aifor example (the most recent providers list can be found inopik.LLMProviderenum object)model: The name of the modelusage: The input, output and total tokens for this LLM call.

You can then update your code to log traces and spans:

Function decorator

Low level Python SDK

If you are using function decorators, you will need to use the update_current_span method:

Manually Setting Span Costs

When you need to set a custom cost or use an unsupported model, you can manually set the cost of a span. There are two approaches depending on your use case:

Setting Costs During Span Creation

If you’re manually creating spans, you can set the cost directly when creating the span:

Updating Costs After Span Completion

With Opik integrations, spans are automatically created and closed, preventing updates while they’re open. However, you can update the cost afterward using the update_span method. This works well for implementing periodic cost estimation jobs:

This approach is particularly useful when:

- Using models or providers not yet supported by automatic cost tracking

- You have a custom pricing agreement with your provider

- You want to track additional costs beyond model usage

- You need to implement cost estimation as a background process

- Working with integrations where spans are automatically managed

You can run the cost update function as a CRON job to automatically update costs for spans created without cost information. This is especially valuable in production environments where accurate cost data for all spans is essential.

Supported Models, Providers, and Integrations

Opik currently calculates costs automatically for all LLM calls in the following Python SDK integrations:

- Google ADK Integration

- AWS Bedrock Integration

- LangChain Integration

- OpenAI Integration

- LiteLLM Integration

- Anthropic Integration

- CrewAI Integration

- Google AI Integration

- Haystack Integration

- LlamaIndex Integration

Supported Providers

Cost tracking is supported for the following LLM providers (as defined in opik.LLMProvider enum):

- OpenAI (

openai) - Models hosted by OpenAI (https://platform.openai.com) - Anthropic (

anthropic) - Models hosted by Anthropic (https://www.anthropic.com) - Anthropic on Vertex AI (

anthropic_vertexai) - Anthropic models hosted by Google Vertex AI - Google AI (

google_ai) - Gemini models hosted in Google AI Studio (https://ai.google.dev/aistudio) - Google Vertex AI (

google_vertexai) - Gemini models hosted in Google Vertex AI (https://cloud.google.com/vertex-ai) - AWS Bedrock (

bedrock) - Models hosted by AWS Bedrock (https://aws.amazon.com/bedrock) - Groq (

groq) - Models hosted by Groq (https://groq.com)

You can find a complete list of supported models for these providers in the model_prices_and_context_window.json file.

We are actively expanding our cost tracking support. Need support for additional models or providers? Please open a feature request to help us prioritize development.