Quickstart

This guide helps you integrate the Opik platform with your existing LLM application. The goal of this guide is to help you log your first LLM calls and chains to the Opik platform.

Prerequisites

Before you begin, you’ll need to choose how you want to use Opik:

- Opik Cloud: Create a free account at comet.com/opik

- Self-hosting: Follow the self-hosting guide to deploy Opik locally or on Kubernetes

Logging your first LLM calls

Opik makes it easy to integrate with your existing LLM application, here are some of our most popular integrations:

Python SDK

TypeScript SDK

OpenAI (Python)

OpenAI (TS)

AI Vercel SDK

Ollama

ADK

LangGraph

AI Wizard

All integrations

If you are using the Python function decorator, you can integrate by:

Analyze your traces

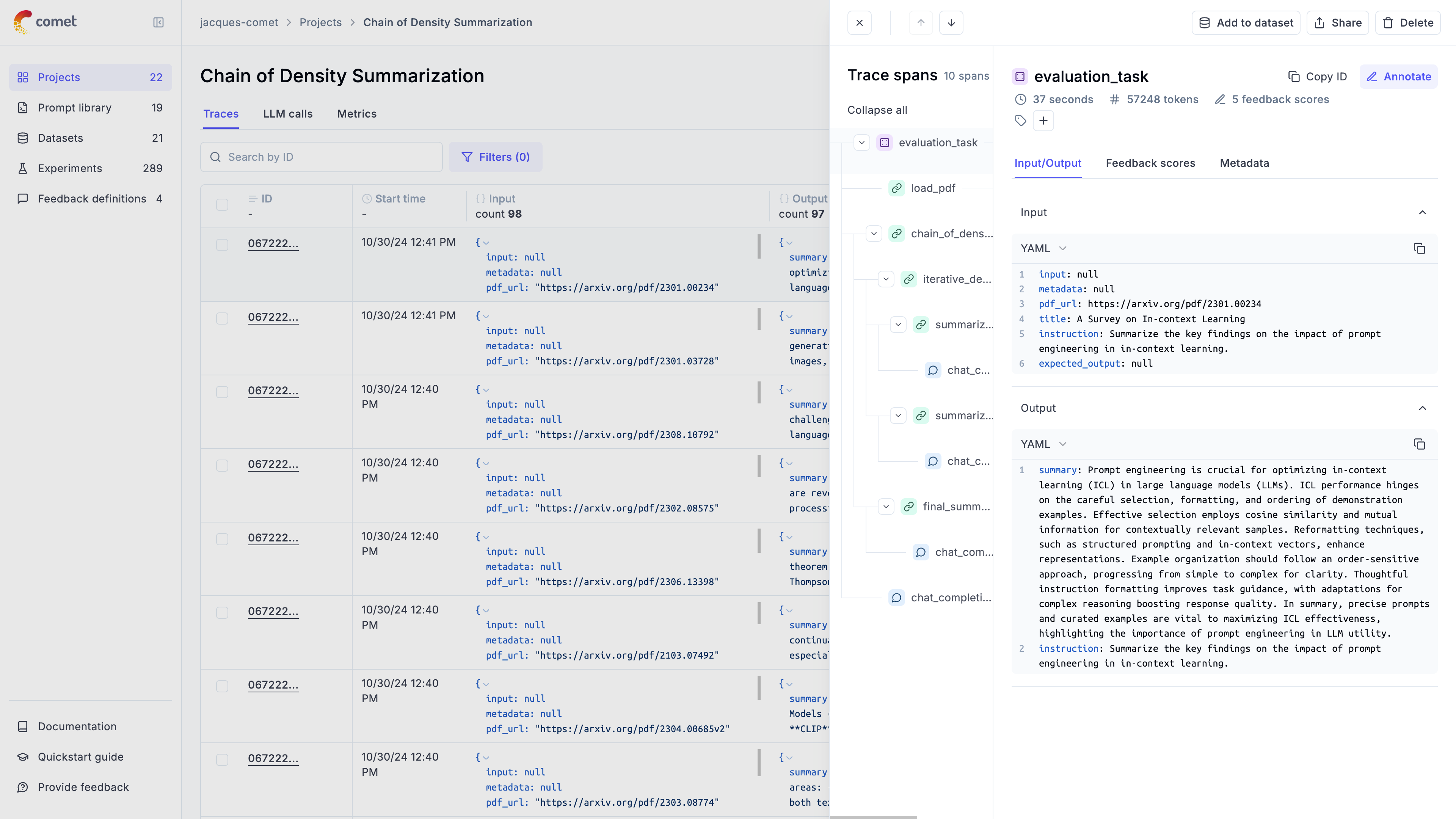

After running your application, you will start seeing your traces in your Opik dashboard:

If you don’t see traces appearing, reach out to us on Slack or raise an issue on GitHub and we’ll help you troubleshoot.

Next steps

Now that you have logged your first LLM calls and chains to Opik, why not check out:

- In depth guide on agent observability: Learn how to customize the data that is logged to Opik and how to log conversations.

- Opik Experiments: Opik allows you to automated the evaluation process of your LLM application so that you no longer need to manually review every LLM response.

- Opik’s evaluation metrics: Opik provides a suite of evaluation metrics (Hallucination, Answer Relevance, Context Recall, etc.) that you can use to score your LLM responses.