Gateway

Describes how to use the Opik LLM gateway and how to integrate with the Kong AI Gateway

An LLM gateway is a proxy server that forwards requests to an LLM API and returns the response. This is useful for when you want to centralize the access to LLM providers or when you want to be able to query multiple LLM providers from a single endpoint using a consistent request and response format.

The Opik platform includes a light-weight LLM gateway that can be used for development and testing purposes. If you are looking for an LLM gateway that is production ready, we recommend looking at the Kong AI Gateway.

The Opik LLM Gateway

The Opik LLM gateway is a light-weight proxy server that can be used to query different LLM API using the OpenAI format.

In order to use the Opik LLM gateway, you will first need to configure your LLM provider credentials in the Opik UI. Once this is done, you can use the Opik gateway to query your LLM provider:

Opik Cloud

Opik self-hosted

The Opik LLM gateway is currently in beta and is subject to change. We recommend using the Kong AI gateway for production applications.

Kong AI Gateway

Kong is a popular Open-Source API gatewy that has recently released an AI Gateway. If you are looking for an LLM gateway that is production ready and supports many of the expected enterprise use cases (authentication mechanisms, load balancing, caching, etc), this is the gateway we recommend.

You can learn more about the Kong AI Gateway here.

We have developed a Kong plugin that allows you to log all the LLM calls from your Kong server to the Opik platform. The plugin is open source and available at comet-ml/opik-kong-plugin.

Once the plugin is installed, you can enable it by running:

You can find more information about the Opik Kong plugin the opik-kong-plugin repository.

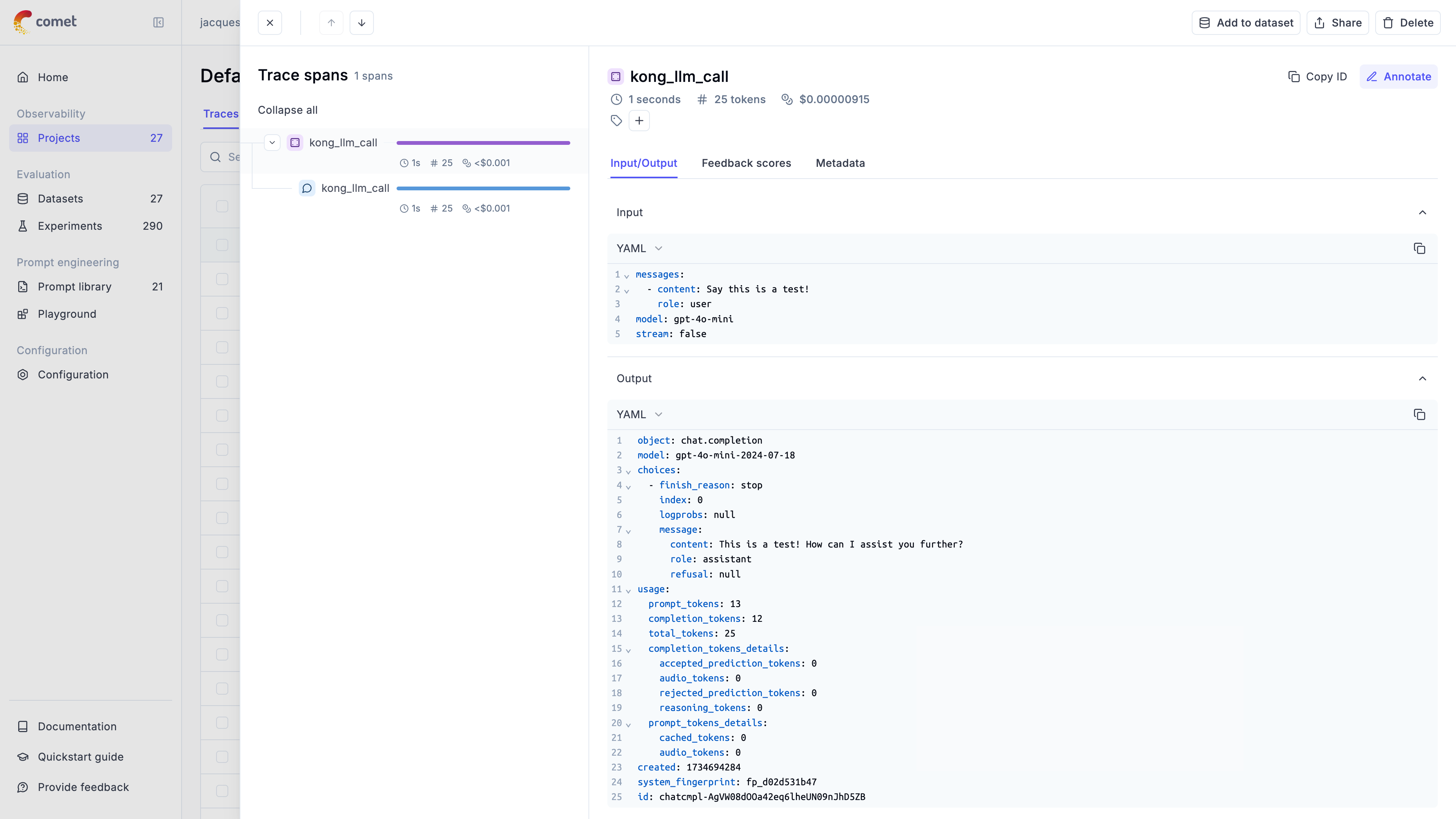

Once configured, you will be able to view all your LLM calls in the Opik dashboard: