Evaluate your LLM application

Step by step guide on how to evaluate your LLM application

Evaluating your LLM application allows you to have confidence in the performance of your LLM application. In this guide, we will walk through the process of evaluating complex applications like LLM chains or agents.

In this guide, we will focus on evaluating complex LLM applications. If you are looking at evaluating single prompts you can refer to the Evaluate A Prompt guide.

The evaluation is done in five steps:

- Add tracing to your LLM application

- Define the evaluation task

- Choose the

Datasetthat you would like to evaluate your application on - Choose the metrics that you would like to evaluate your application with

- Create and run the evaluation experiment

1. Add tracking to your LLM application

While not required, we recommend adding tracking to your LLM application. This allows you to have full visibility into each evaluation run. In the example below we will use a combination of the track decorator and the track_openai function to trace the LLM application.

Here we have added the track decorator so that this trace and all its nested steps are logged to the platform for

further analysis.

2. Define the evaluation task

Once you have added instrumentation to your LLM application, we can define the evaluation task. The evaluation task takes in as an input a dataset item and needs to return a dictionary with keys that match the parameters expected by the metrics you are using. In this example we can define the evaluation task as follows:

If the dictionary returned does not match with the parameters expected by the metrics, you will get inconsistent evaluation results.

3. Choose the evaluation Dataset

In order to create an evaluation experiment, you will need to have a Dataset that includes all your test cases.

If you have already created a Dataset, you can use the Opik.get_or_create_dataset function to fetch it:

If you don’t have a Dataset yet, you can insert dataset items using the Dataset.insert method. You can call this method multiple times as Opik performs data deduplication before ingestion:

4. Choose evaluation metrics

Opik provides a set of built-in evaluation metrics that you can choose from. These are broken down into two main categories:

- Heuristic metrics: These metrics that are deterministic in nature, for example

equalsorcontains - LLM-as-a-judge: These metrics use an LLM to judge the quality of the output; typically these are used for detecting

hallucinationsorcontext relevance

In the same evaluation experiment, you can use multiple metrics to evaluate your application:

Each metric expects the data in a certain format. You will need to ensure that the task you have defined in step 1 returns the data in the correct format.

5. Run the evaluation

Now that we have the task we want to evaluate, the dataset to evaluate on, and the metrics we want to evaluate with, we can run the evaluation:

You can use the experiment_config parameter to store information about your evaluation task. Typically we see teams

store information about the prompt template, the model used and model parameters used to evaluate the application.

Advanced usage

Using async evaluation tasks

The evaluate function does not support async evaluation tasks, if you pass

an async task you will get an error similar to:

As it might not always be possible to convert all your LLM logic to not rely on async logic,

we recommend using asyncio.run within the evaluation task:

This should solve the issue and allow you to run the evaluation.

If you are running in a Jupyter notebook, you will need to add the following line to the top of your notebook:

otherwise you might get the error RuntimeError: asyncio.run() cannot be called from a running event loop

The evaluate function uses multi-threading under the hood to speed up the evaluation run. Using both

asyncio and multi-threading can lead to unexpected behavior and hard to debug errors.

If you run into any issues, you can disable the multi-threading in the SDK by setting task_threads to 1:

Missing arguments for scoring methods

When you face the opik.exceptions.ScoreMethodMissingArguments exception, it means that the dataset item and task output dictionaries do not contain all the arguments expected by the scoring method. The way the evaluate function works is by merging the dataset item and task output dictionaries and then passing the result to the scoring method. For example, if the dataset item contains the keys user_question and context while the evaluation task returns a dictionary with the key output, the scoring method will be called as scoring_method.score(user_question='...', context= '...', output= '...'). This can be an issue if the scoring method expects a different set of arguments.

You can solve this by either updating the dataset item or evaluation task to return the missing arguments or by using the scoring_key_mapping parameter of the evaluate function. In the example above, if the scoring method expects input as an argument, you can map the user_question key to the input key as follows:

Linking prompts to experiments

The Opik prompt library can be used to version your prompt templates.

When creating an Experiment, you can link the Experiment to a specific prompt version:

The experiment will now be linked to the prompt allowing you to view all experiments that use a specific prompt:

Logging traces to a specific project

You can use the project_name parameter of the evaluate function to log evaluation traces to a specific project:

Evaluating a subset of the dataset

You can use the nb_samples parameter to specify the number of samples to use for the evaluation. This is useful if you only want to evaluate a subset of the dataset.

Disabling threading

In order to evaluate datasets more efficiently, Opik uses multiple background threads to evaluate the dataset. If this is causing issues, you can disable these by setting task_threads and scoring_threads to 1 which will lead Opik to run all calculations in the main thread.

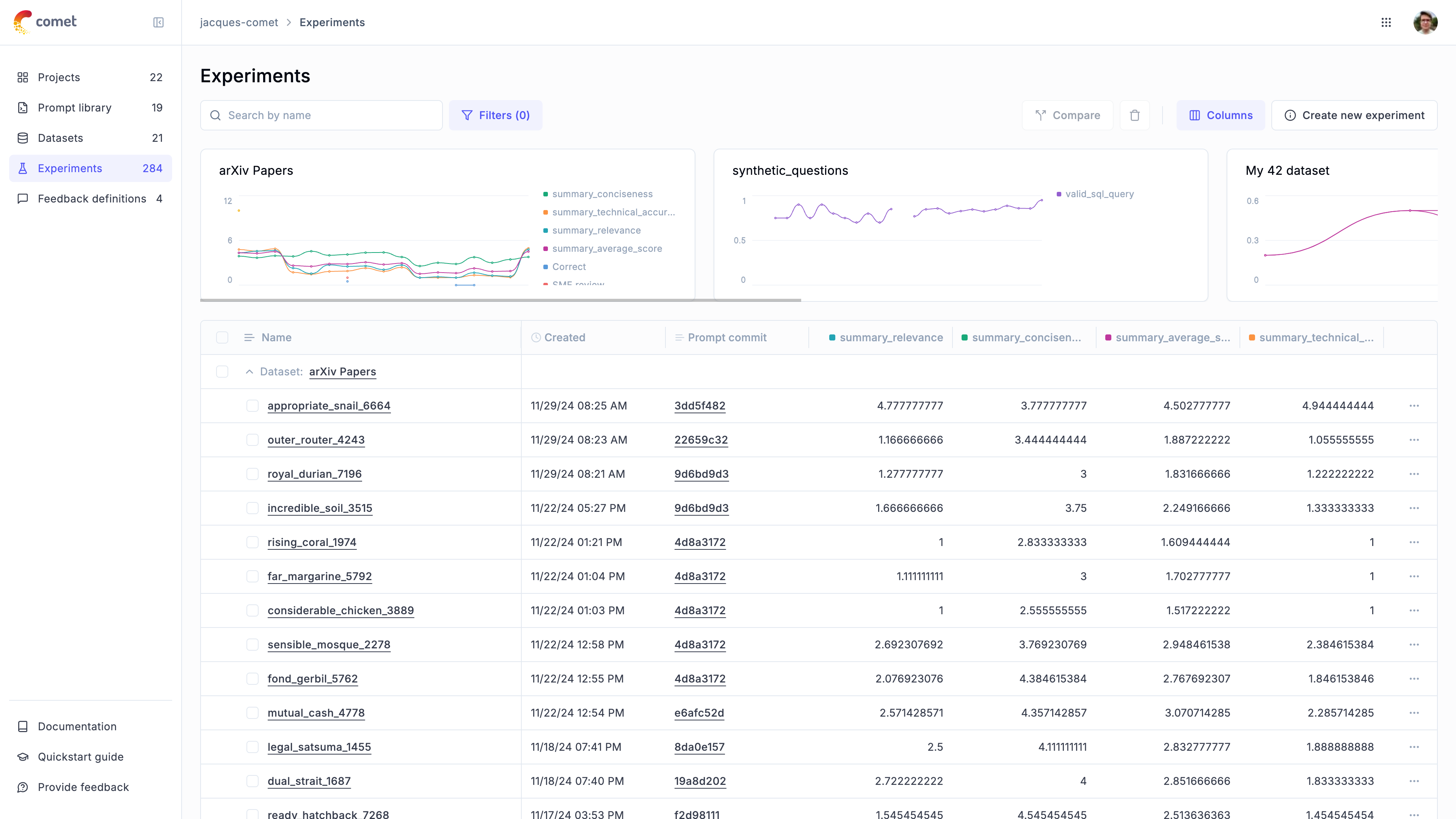

Accessing logged experiments

You can access all the experiments logged to the platform from the SDK with the Opik.get_experiments_by_name and Opik.get_experiment_by_id methods: