Agent Optimization

Opik Agent Optimizer is a turnkey, open-source agent and prompt optimization SDK. It automatically tunes prompts, tools, and agent workflows using the datasets, metrics, and traces you already log to Opik. Instead of hand-editing instructions and re-running evaluations, pick an optimizer (MetaPrompt, HRPO, Evolutionary, GEPA, etc.) and let it iterate for you online or fully offline inside Docker and Kubernetes.

Why teams choose Opik Agent Optimizer

- Automatic prompt optimization – end-to-end workflow that installs in minutes and runs locally or in your stack.

- Open-source and framework agnostic – no lock-in, use Opik’s first-party optimizers or community favorites like GEPA in the same SDK.

- Agent-aware – optimize beyond system prompts, including MCP tool signatures, function-calling schemas, and multi-step agent workflows.

- Deep observability – every trial logs prompts, tool calls, traces, and metric reasons to Opik so you can explain and ship changes confidently.

Key capabilities

MetaPrompt, HRPO, Few-Shot Bayesian, Evolutionary, GEPA, Parameter tuning. Swap optimizers without changing your workflow.

Optimize full agent systems with multiple prompts, tools, and orchestration logic, not just a single system message.

Optimize tool schemas and function calling alongside prompt text with the same metrics and datasets.

Track trials, candidates, datasets, and trace-level evidence to explain and ship improvements confidently.

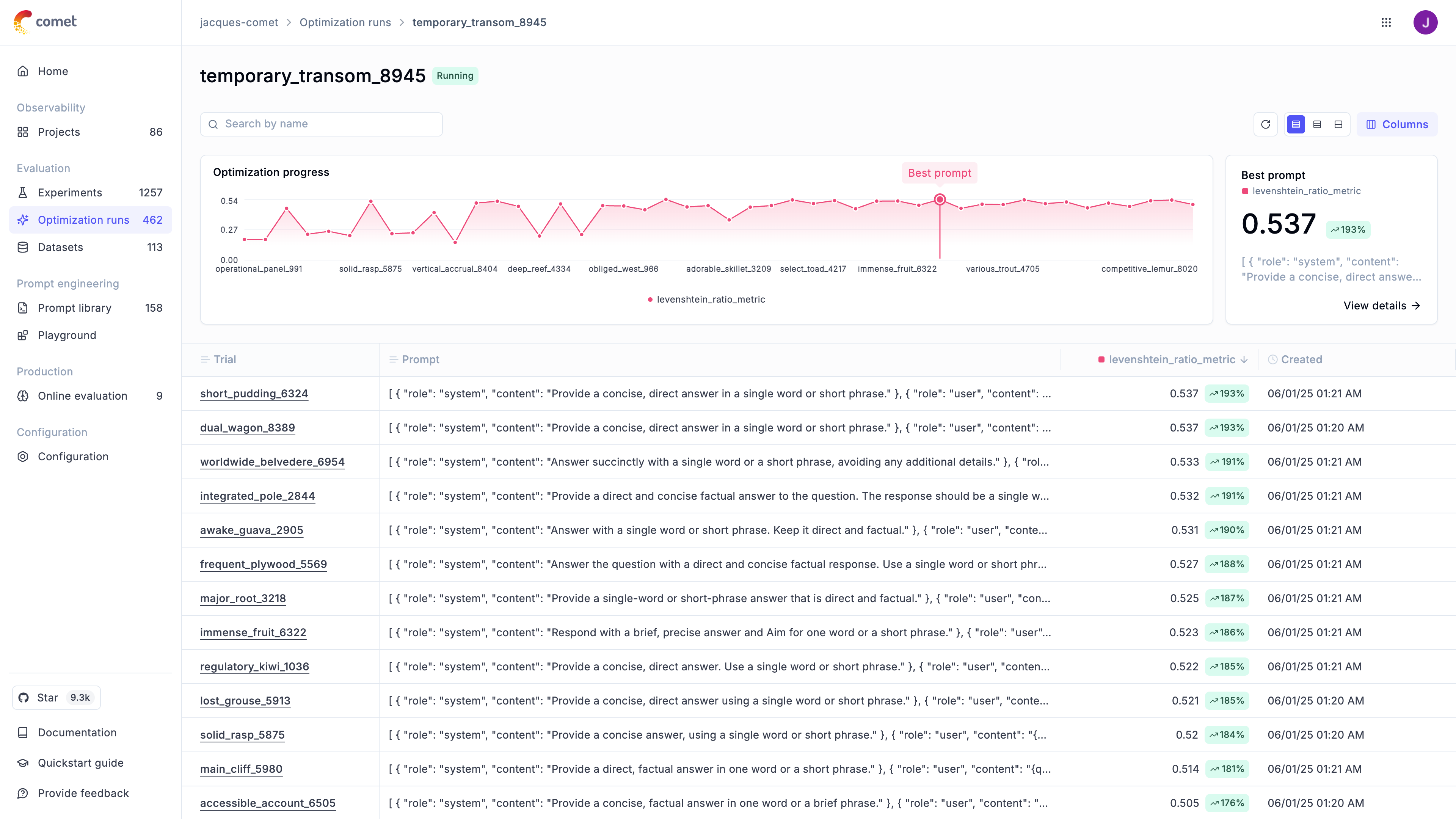

Run optimizer workflows directly from the UI with no-code configuration and result review.

Run the SDK locally or inside Opik Docker to keep data inside your network.

How it works

1. Prepare data & metrics

Use Opik datasets (CSV upload, API, or trace exports) plus deterministic metrics/ScoreResult functions. See Define datasets and Define metrics.

2. Pick an optimizer

Choose the best algorithm for your task (see Optimization algorithms). All optimizers expose the same API, so you can swap them easily or chain runs.

Start fast

- Want a no-code workflow? Use Optimization Studio to run optimizations from the Opik UI.

- Follow the Quickstart to run your first optimization locally.

- Prefer notebooks? Launch the Quickstart notebook.

- Need scenario-specific guidance? Explore the Cookbooks.

Optimization Algorithms

The optimizer implements both proprietary and open-source optimization algorithms. Each one has its strengths and weaknesses, we recommend first trying out either GEPA or HRPO (Hierarchical Reflective Prompt Optimizer) as a first step:

Want to see numbers? Check the new optimizer benchmarks page for the latest performance table and instructions for running the benchmark suite yourself.

Next Steps

- Explore specific Optimizers for algorithm details.

- Refer to the FAQ for common questions and troubleshooting.

- Refer to the API Reference for detailed configuration options.

🚀 Want to see Opik Agent Optimizer in action? Check out our Example Projects & Cookbooks for runnable Colab notebooks covering real-world optimization workflows, including HotPotQA and synthetic data generation.