Pytest integration

Ensuring your LLM applications is working as expected is a crucial step before deploying to production. Opik provides a Pytest integration so that you can easily track the overall pass / fail rates of your tests as well as the individual pass / fail rates of each test.

Using the Pytest Integration

We recommend using the llm_unit decorator to wrap your tests. This will ensure that Opik can track the results of your tests and provide you with a detailed report. It also works well when used in conjunction with the track decorator used to trace your LLM application.

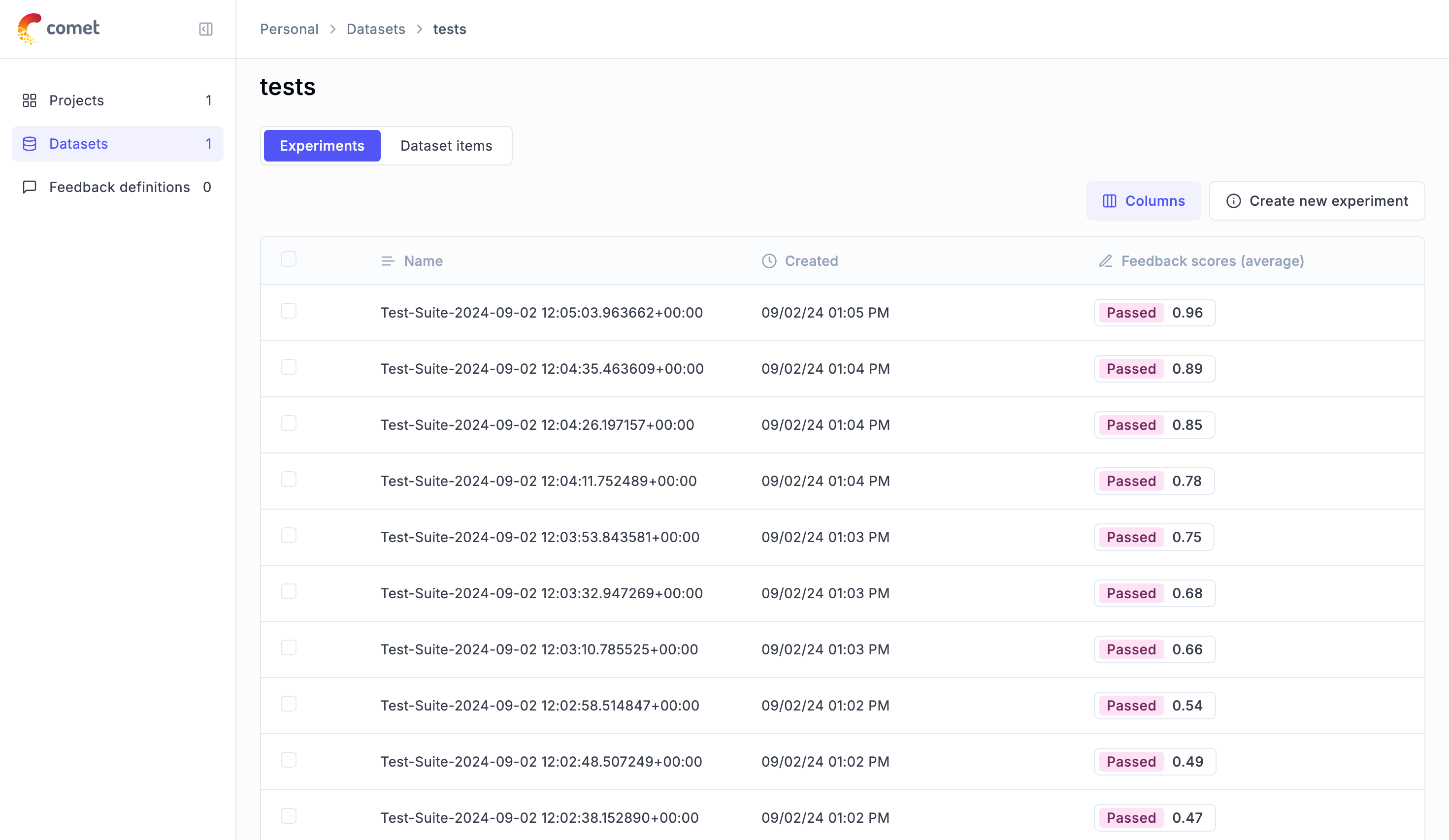

When you run the tests, Opik will create a new experiment for each run and log each test result. By navigating to the tests dataset, you will see a new experiment for each test run.

If you are evaluating your LLM application during development, we recommend using the evaluate function as it will

provide you with a more detailed report. You can learn more about the evaluate function in the evaluation

documentation.

Advanced Usage

The llm_unit decorator also works well when used in conjunctions with the parametrize Pytest decorator that allows you to run the same test with different inputs: