Observability for OpenAI (Python) with Opik

If you are using OpenAI’s Agents framework, we recommend using the OpenAI Agents integration instead.

This guide explains how to integrate Opik with the OpenAI Python SDK. By using the track_openai method provided by opik, you can easily track and evaluate your OpenAI API calls within your Opik projects as Opik will automatically log the input prompt, model used, token usage, and response generated.

Account Setup

Comet provides a hosted version of the Opik platform, simply create an account and grab your API Key.

You can also run the Opik platform locally, see the installation guide for more information.

Getting Started

Installation

First, ensure you have both opik and openai packages installed:

Configuring Opik

Configure the Opik Python SDK for your deployment type. See the Python SDK Configuration guide for detailed instructions on:

- CLI configuration:

opik configure - Code configuration:

opik.configure() - Self-hosted vs Cloud vs Enterprise setup

- Configuration files and environment variables

Configuring OpenAI

In order to configure OpenAI, you will need to have your OpenAI API Key. You can find or create your OpenAI API Key in this page.

You can set it as an environment variable:

Or set it programmatically:

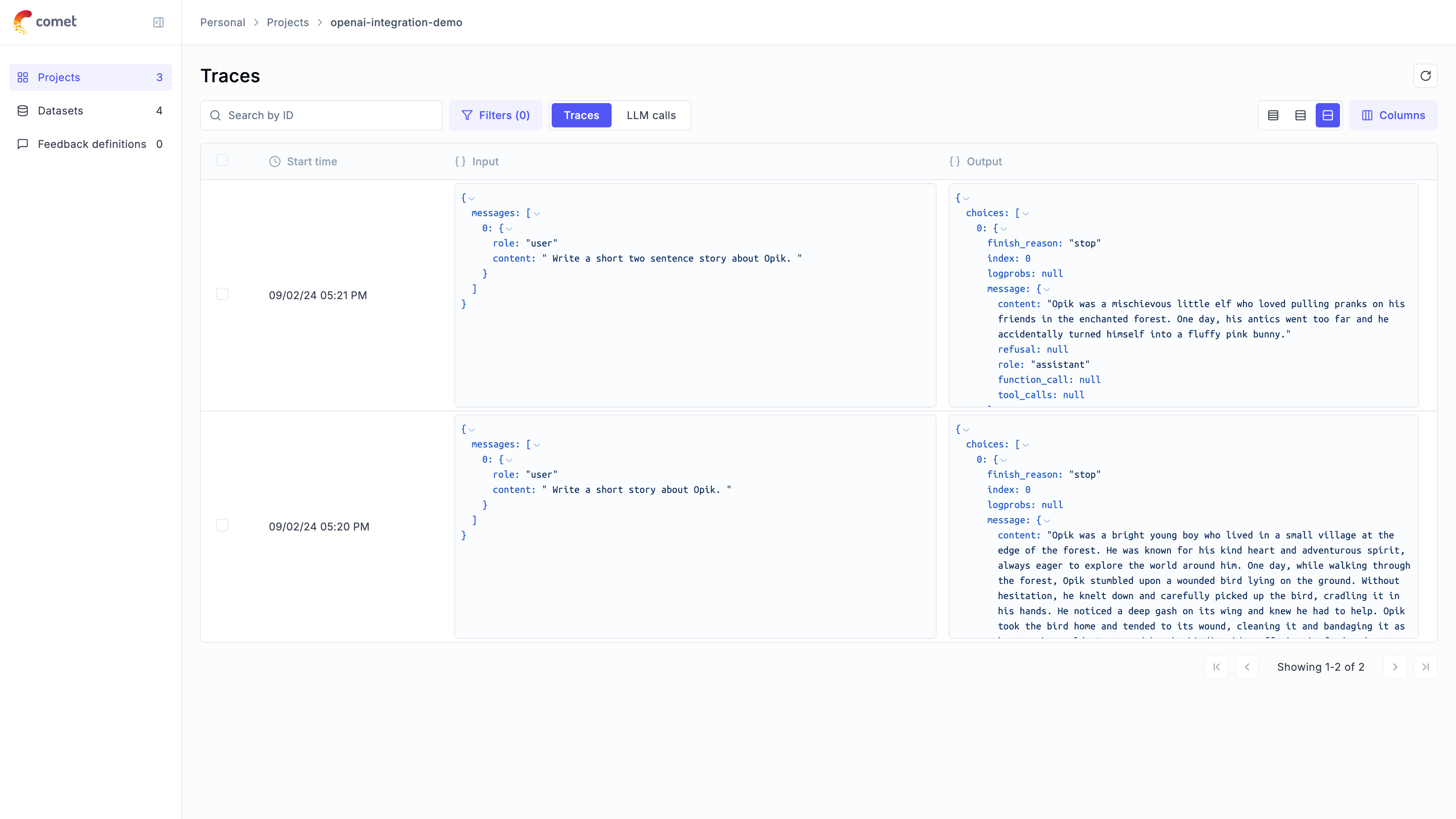

Logging LLM calls

In order to log the LLM calls to Opik, you will need to wrap the OpenAI client with track_openai. When making calls with that wrapped client, all calls will be logged to Opik:

Advanced Usage

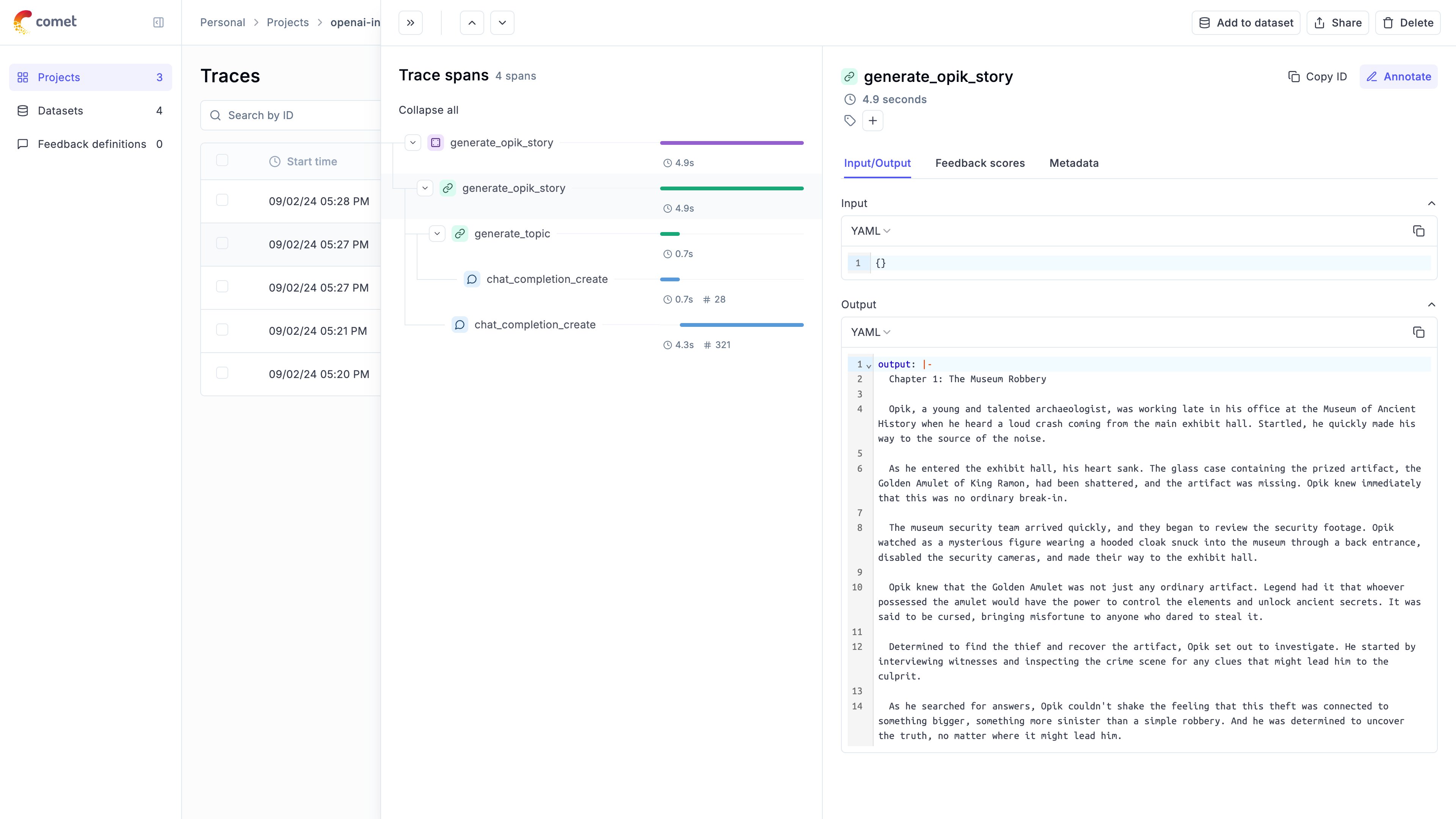

Using with the @track decorator

If you have multiple steps in your LLM pipeline, you can use the @track decorator to log the traces for each step. If OpenAI is called within one of these steps, the LLM call will be associated with that corresponding step:

The trace can now be viewed in the UI with hierarchical spans showing the relationship between different steps:

Using Azure OpenAI

The OpenAI integration also supports Azure OpenAI Services. To use Azure OpenAI, initialize your client with Azure configuration and use it with track_openai just like the standard OpenAI client:

Cost Tracking

The track_openai wrapper automatically tracks token usage and cost for all supported OpenAI models.

Cost information is automatically captured and displayed in the Opik UI, including:

- Token usage details

- Cost per request based on OpenAI pricing

- Total trace cost

View the complete list of supported models and providers on the Supported Models page.

Grouping traces into conversational threads using thread_id

Threads in Opik are collections of traces that are grouped together using a unique thread_id.

The thread_id can be passed to the OpenAI client as a parameter, which will be used to group all traces into a single thread.

More information on logging chat conversations can be found in the Log conversations section.

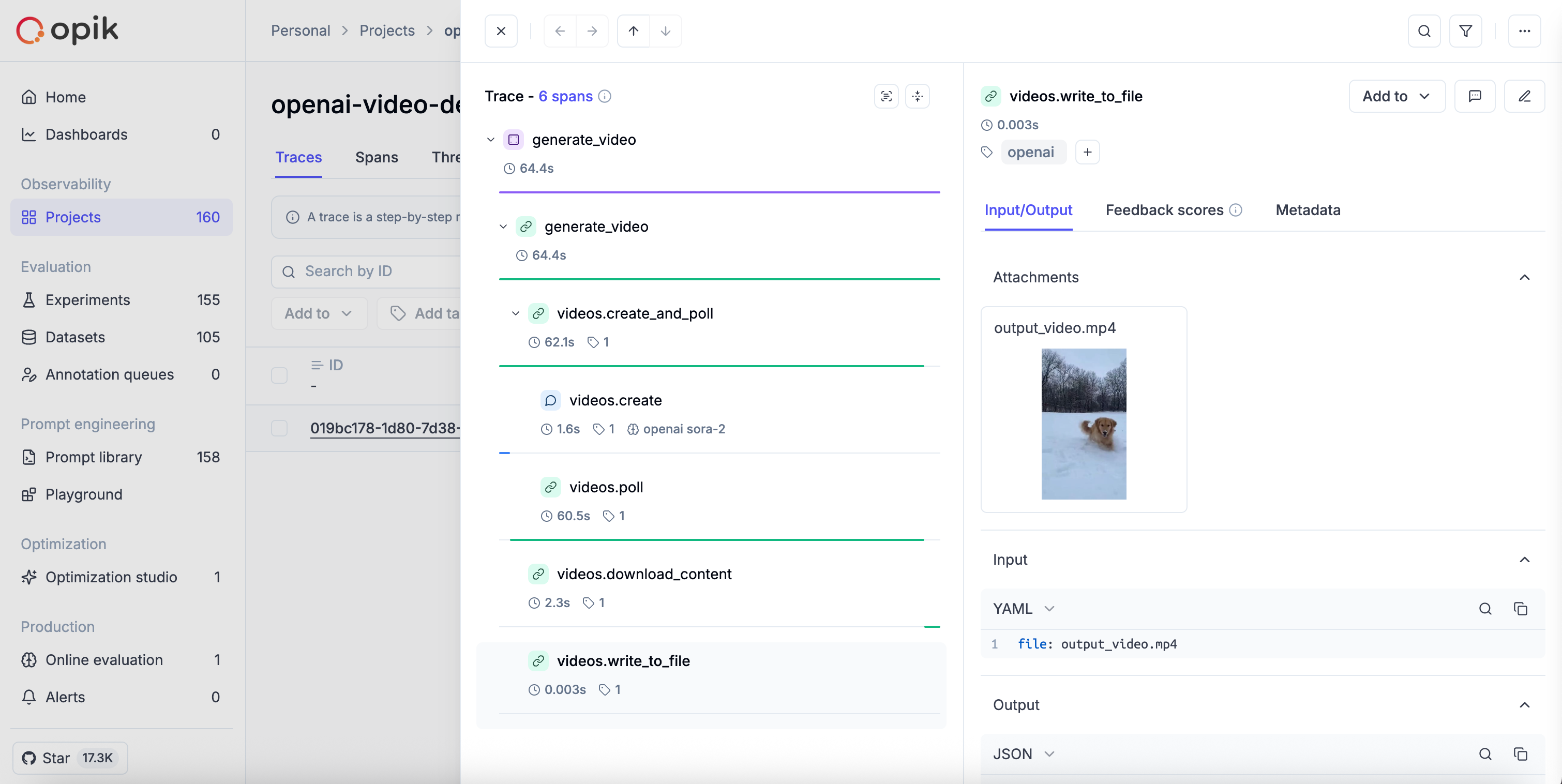

Video Generation (Sora)

The track_openai wrapper also supports OpenAI’s video generation API (Sora). When you generate videos, Opik automatically tracks the video creation process and logs the generated video as an attachment when you download it via the client API.

The trace will show the full video generation workflow including the video creation, polling, download, and the generated video as an attachment:

The videos.retrieve method is intentionally not tracked because the poll method makes many retrieve calls internally, which would create excessive noise in your traces. If you need to track retrieve calls (e.g. if you don’t use poll and check the progress manually), you can decorate the method yourself:

Supported OpenAI methods

The track_openai wrapper supports the following OpenAI methods:

openai_client.chat.completions.create(), including support for stream=True mode.openai_client.beta.chat.completions.parse()openai_client.beta.chat.completions.stream()openai_client.responses.create()openai_client.videos.create()openai_client.videos.create_and_poll()openai_client.videos.poll()openai_client.videos.download_content()openai_client.videos.list()openai_client.videos.delete()

If you would like to track another OpenAI method, please let us know by opening an issue on GitHub.