Using Opik with Instructor

Instructor is a Python library for working with structured outputs for LLMs built on top of Pydantic. It provides a simple way to manage schema validations, retries and streaming responses.

Creating an account on Comet.com

Comet provides a hosted version of the Opik platform, simply create an account and grab your API Key.

You can also run the Opik platform locally, see the installation guide for more information.

Opik Config

Configure your development environment (If you click the key icon on the left side, you can set API keys that are reusable across notebooks.)

For this demo we are going to use an OpenAI, Anthropic and Gemini, so we will need to configure our API keys:

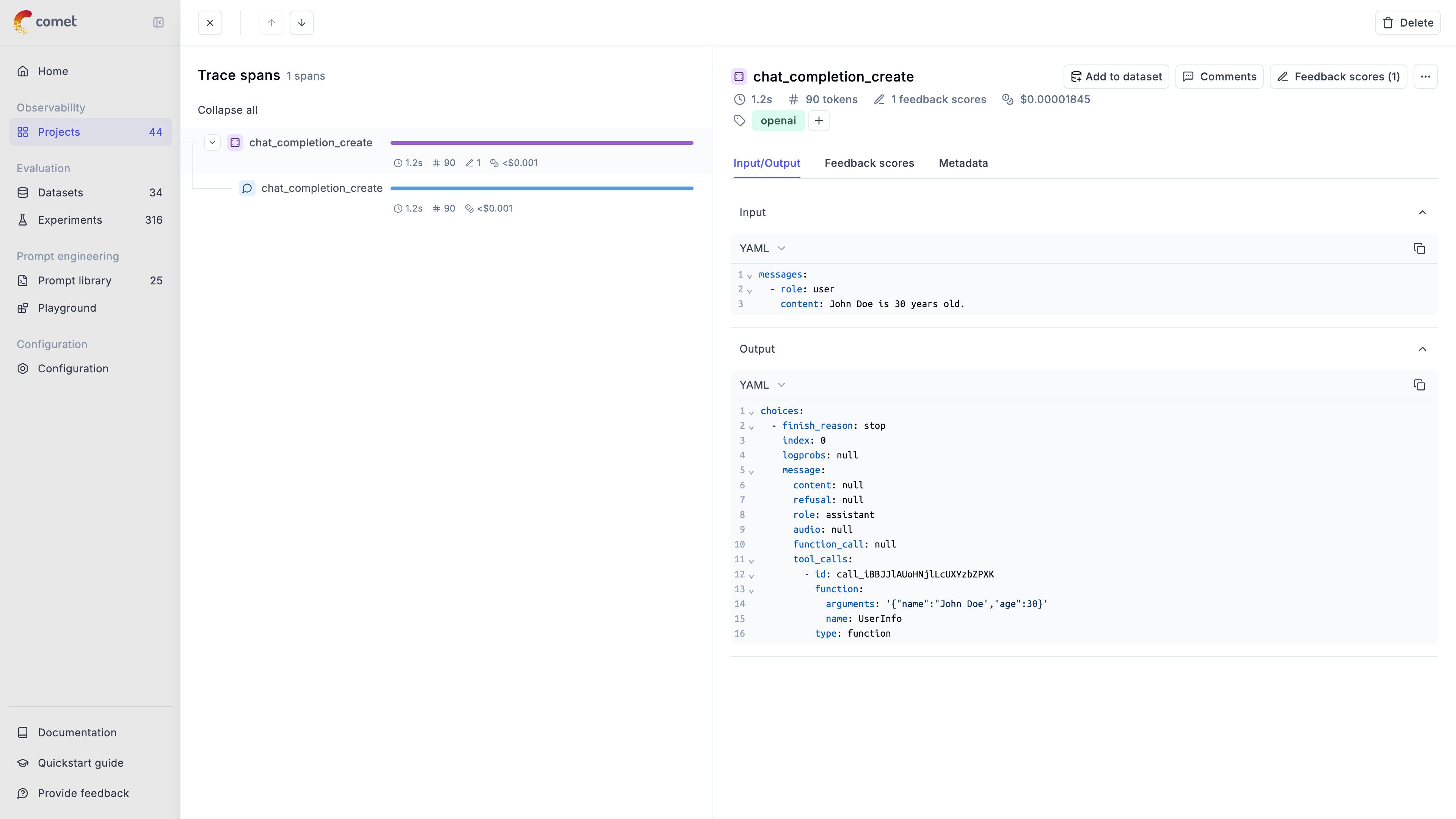

Using Opik with Instructor library

In order to log traces from Instructor into Opik, we are going to patch the instructor library. This will log each LLM call to the Opik platform.

For all the integrations, we will first add tracking to the LLM client and then pass it to the Instructor library:

Thanks to the track_openai method, all the calls made to OpenAI will be logged to the Opik platform. This approach also works well if you are also using the opik.track decorator as it will automatically log the LLM call made with Instructor to the relevant trace.

Integrating with other LLM providers

The instructor library supports many LLM providers beyond OpenAI, including: Anthropic, AWS Bedrock, Gemini, etc. Opik supports the majority of these providers aswell.

Here are two additional code snippets needed for the integration.