Using Opik with Ollama

Ollama allows users to run, interact with, and deploy AI models locally on their machines without the need for complex infrastructure or cloud dependencies.

In this notebook, we will showcase how to log Ollama LLM calls using Opik by utilizing either the OpenAI or LangChain libraries.

Getting started

Configure Ollama

In order to interact with Ollama from Python, we will to have Ollama running on our machine. You can learn more about how to install and run Ollama in the quickstart guide.

Configuring Opik

Opik is available as a fully open source local installation or using Comet.com as a hosted solution. The easiest way to get started with Opik is by creating a free Comet account at comet.com.

If you’d like to self-host Opik, you can learn more about the self-hosting options here.

In addition, you will need to install and configure the Opik Python package:

Tracking Ollama calls made with OpenAI

Ollama is compatible with the OpenAI format and can be used with the OpenAI Python library. You can therefore leverage the Opik integration for OpenAI to trace your Ollama calls:

Your LLM call is now traced and logged to the Opik platform.

Tracking Ollama calls made with LangChain

In order to trace Ollama calls made with LangChain, you will need to first install the langchain-ollama package:

You will now be able to use the OpikTracer class to log all your Ollama calls made with LangChain to Opik:

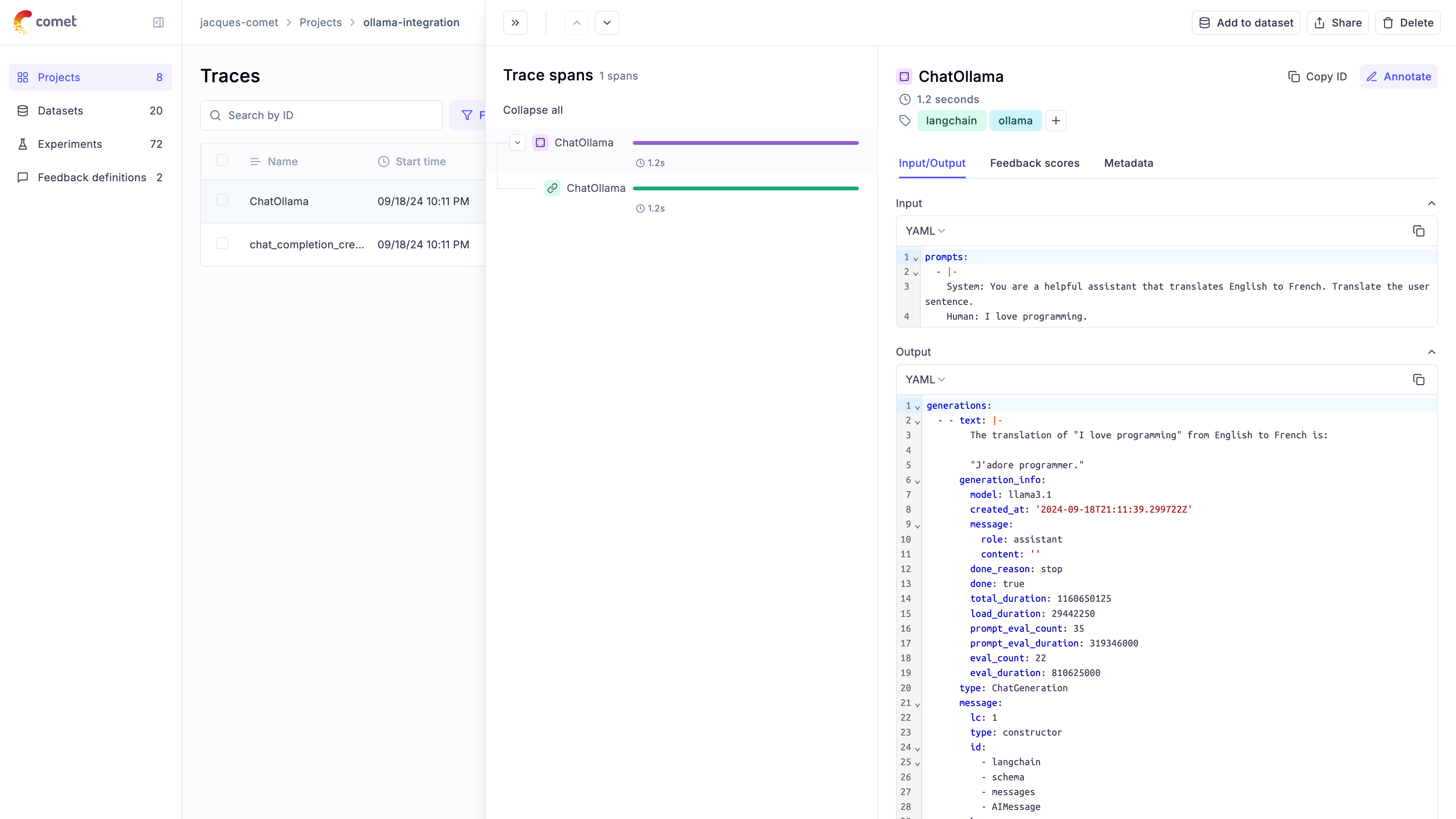

You can now go to the Opik app to see the trace: