Putting Machine Learning Models Successfully into Production

Lessons from speakers at Convergence, a new machine learning industry conference

Machine learning applications are becoming a key component for organizations to drive innovation and gain competitive advantages; by leveraging their data with AI, companies are able to automate processes, deliver better user experiences, and drive business value with production-level machine learning initiatives.

However, if you’ve been paying attention to the conversation in the industry over the past couple years, then you likely have heard one version of this sentiment or another: deploying models successfully and driving business value with them is hard work.

More specifically, managing ML projects effectively goes beyond the development of traditional software, and most models never make it to production. Accessible data, close collaboration between data science and engineering teams and many more factors are essential keys for successful model deployment.

We had the opportunity to sit down with experienced data scientists and industry leaders speaking at the upcoming Convergence 2022 Conference, and we asked them about the biggest challenges and best practices to successfully put machine learning models into production.

If what these experts has to say piques your interest, then be sure to join us on March 2, 2022, for Convergence: a one-day, free-to-attend virtual conference, featuring ML practitioners and leaders from amazing organizations like AI2, Slice, Hugging Face, GitLab, Mailchimp, and (of course) Comet!

What do you consider the biggest challenge when putting machine learning models successfully into production?

The challenges behind successfully deploying production-level machine learning models are myriad, and they span the entire model development life cycle, from building reliable datasets, to model development, to deployment and beyond.

The Convergence speakers had some interesting takes on these challenges:

“The biggest challenge is when the deployed model does not work as expected during the model building stage. A large part of it is attributed to the black-box nature of ML models, i.e. when data scientists are not able to reason out the model output. The model once deployed calls for periodic model monitoring and data drift to ensure the model is performing as intended.” —Vidhi Chugh, Staff Data Scientist at Walmart Global Tech

“Testing models and surrounding predictive feature engineering code is intricate, difficult, and time consuming. At the most basic level, testing probabilistic code is an art in and of itself. Integration testing with these heavy, complex, sometimes hardware specific systems (GPU/TPU) is also quite involved.” —Emily Curtin, Senior Machine Learning Engineer at Mailchimp

“The hardest part is not deploying the model for the first time. It’s the second, third, fourth, and n-th times after that. For most projects I’ve worked on, it’s not easy to figure out how to iterate on your model or whether you can trust that a new model you deploy is clearly better than previous models.” —Peter Gao, CEO at Aquarium

What’s your recipe to get ML models deployed successfully? What has worked for you?

As discussed above, successfully deploying machine learning models is challenging; however, there are some key things you and your team can focus on to increase the chances of putting an ML project successfully into production.

Following a strategic approach when starting an ML project is one of them, according to Vidhi Chugh: “A clear understanding of the business requirements and mapping them with machine learning capabilities is a very crucial preliminary step. Quite often, the organizations fail to address what the limitations of the ML model are and whether it is acceptable by business, commonly called a feasibility check, and is often ignored. Hence, comprehensive workshops with a clear indication of business objectives, data availability, pros, and cons of using ML algorithms early on in the projects prove to be very effective in successful model building and deployment.”

Another key to success is getting (and building processes to act upon) feedback from models in production. Peter Gao of Aquarium noted: “Always deploy a model with some level of monitoring or feedback from production. Get into a steady habit of auditing your production performance and going through a retrain + validation + release of a new model.”

Emily Curtin adds the importance of configurations: “Cattle, not pets. This is a classic DevOps principle originally describing server configuration that applies perfectly to model deployment. Models should be treated as commodities, should share as much configuration as possible, and should not be given special treatment.”

If you could give one piece of advice to organizations trying to build data science/ML teams and/or applications, what would it be?

According to Emily Curtin, quality should come before quantity when embracing ML: “It’s up to the organization to set up the right incentives for quality ML. Nobody wants to ship crap, but if the organization implicitly values having more ML over having good ML, corners will get cut because good ML is really, really hard. When ML orgs are encouraged to ship more models faster, you could end up with models that might be untested, trained on unknown data, or lacking any kind of observability. Incentivize quality by measuring it: get a big dashboard with your test coverage percentages, your system uptime, your model response time, and watch those pieces start to get prioritized.”

Another piece of advice from Vidhi Chugh centers on the importance for organizations to be agile: “The organizations are bound to be process-driven and thus end up working in silos. The time-consuming approval process to enable data pipelines is the primary deterrent to building ML PoCs. Hence, the organizations need to be agile and act fast in terms of trying multiple AI/ML approaches in time, and that can only happen if the time-consuming process overhead is reduced significantly.”

Moreover, operations can enhance the chances of successful ML projects, says Peter Gao: “The constraining resource in building out ML pipelines is invariably engineering bandwidth. This is the time where bringing on non-technical team members can add a lot of value in shipping better models faster. With the right tools, an operations person can help maintain and improve ML pipelines without needing a bunch of engineering attention.”

What are your preferred tools and frameworks for building and deploying machine learning models?

Having the right combination tools in place is essential when bringing machine learning to life. Here are the speakers favorite tools and frameworks that could help you succeed with your projects:

“As a MLOps professional it’s part of my job to deal with the problems that each of these tools and frameworks present. This is a young field in a fast-changing ecosystem, where very little is standardized. That means there are some exciting tools coming of age that are providing value; with that there’s some that are simply creating noise and hype. Discernment is important. To me, a hallmark of a good tool is that it covers more than one ML library. I’ve seen plenty of Awesome Testing Frameworks for only Sci-Kit models or Really Great Experiment Tracking just for TensorFlow. One good ol’ standby is Spark. It certainly has warts, as all distributed computing frameworks do, but it really can’t be beaten for providing a user-friendly API to Data Scientists for doing feature engineering and batch inference on huge datasets.” —Emily Curtin

“AWS Sagemaker is a very effective tool to build, train and deploy highly scalable ML models. MLflow is an open-source tool that facilitates experimentation, reproducibility, and deployment. MLflow is flexible with respect to programming and different machine learning libraries. —Vidhi Chugh

“Favorite is different than effective! PyTorch was pretty fun but wasn’t critical for us. Google Dataflow and TensorRT were useful but definitely not fun. Majority of my truly favorite tools were internal to Cruise.” —Peter Gao, CEO at Aquarium

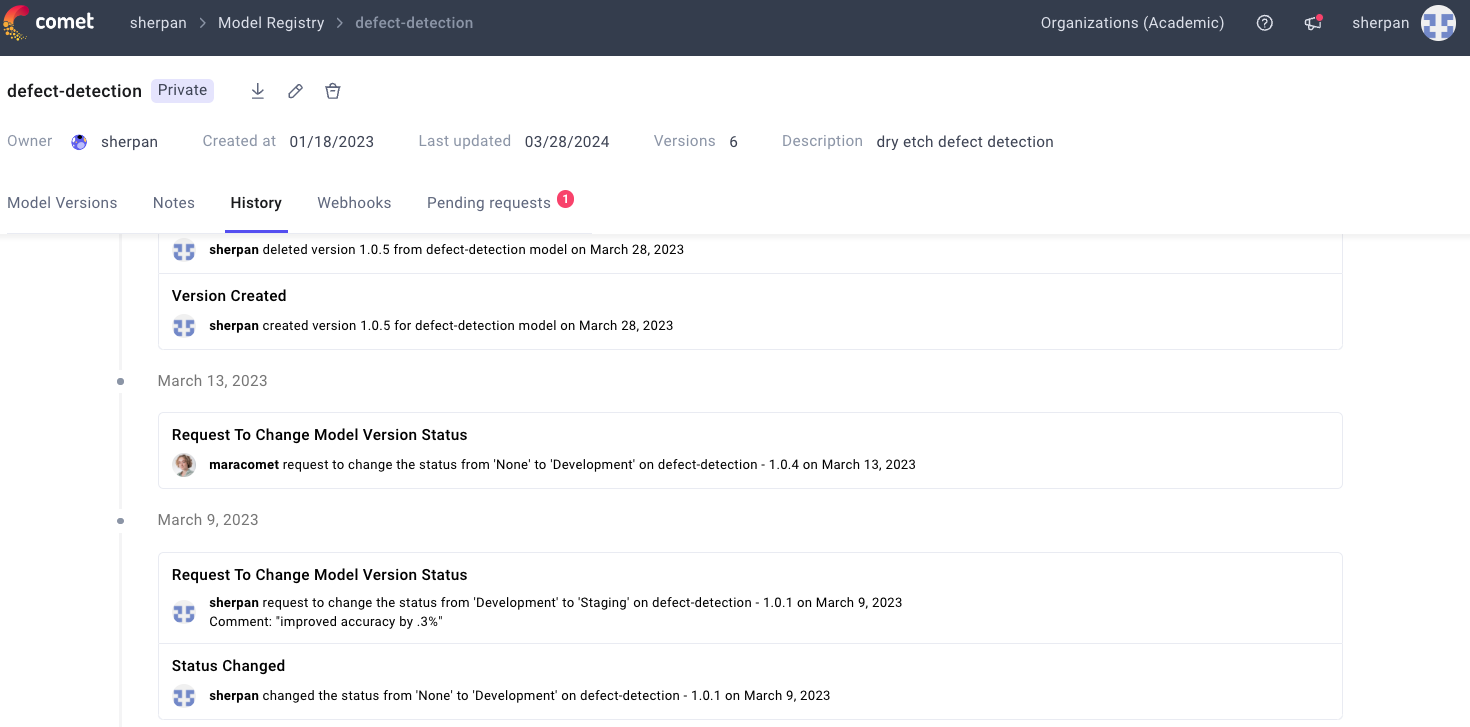

“(An) AB Testing Framework and a Model Registry are must. I prefer the pipeline orchestration to be the one your organization already uses (Airflow, Argo, etc) to avoid maintaining a specific stack.” —Eduardo Bonet, Staff Full Stack Engineer at MLOps at GitLab

Join These Speakers and Others at Convergence!

While there’s a lot to take away from the insights above, that’s just a tiny, minuscule example of what’s to come during Convergence. We’d love for you to join us virtually to learn more about how these leaders and others embrace machine learning to create business value, and discover what’s needed to manage a machine learning project from start to finish effectively.

Enjoy both business and technical tracks, with expert presentations from senior data practitioners and leaders who share their best practices and insights on developing and implementing enterprise machine learning strategies. Check out the full speaker lineup, event agenda, and register right here for free!