OPEN SOURCE LLM EVALUATION

Track. Evaluate. Test. Ship. Repeat.

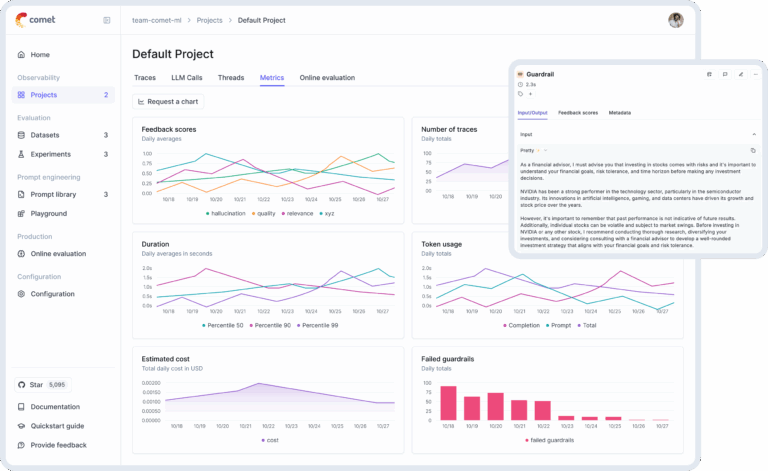

Debug, evaluate, and monitor your LLM applications, RAG systems, and agentic workflows with tracing, eval metrics, and production-ready dashboards.

Now with automated agent optimization and built-in guardrails.

Optimize and Benchmark Your LLM Applications With Ease

Log traces and spans, define and compute evaluation metrics, score LLM outputs, compare performance across app versions, and more.

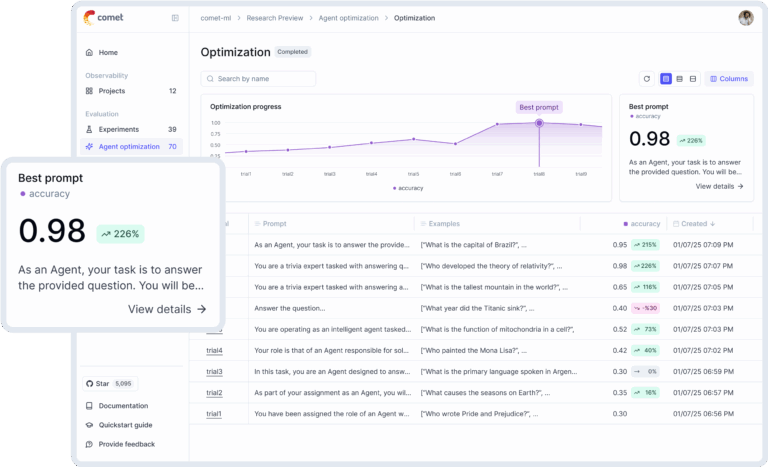

Automatically Optimize

Prompts & Agents

- Automate prompt engineering for agents and tools based on your LLM eval metrics.

- Iterate to achieve elite system prompts and freeze them into reusable, production-ready assets.

- Run 4 powerful optimizers: Few-shot Bayesian, MIPRO, evolutionary, & LLM-powered MetaPrompt.

Maximize Trust & Safety

With Guardrails

- Screen user inputs and LLM outputs to stop unwanted content in its tracks.

- Detect and redact PII, competitor mentions, off-topic discussions, and more.

- Choose Opik’s powerful built-in models or your favorite third-party guardrails libraries.

Log Traces & Spans

- Record, sort, search, and understand each step your LLM app takes to generate a response.

- Manually annotate, view, and compare LLM responses in a user-friendly table.

- End-to-end LLM observability: Log traces during development and in production.

Evaluate Your LLM Application’s Performance

- Run experiments with different prompts and evaluate against a test set.

- Choose and run pre-configured evaluation metrics or define your own with our convenient SDK library.

- Consult built-in LLM judges for complex issues like hallucination detection, factuality, and moderation.

Confidently Test Within Your CI/CD Pipeline

- Establish reliable performance baselines with Opik’s LLM unit tests, built on PyTest.

- Build comprehensive test suites to evaluate your entire LLM pipeline on every deploy.

Monitor & Analyze Production Data

- Log all your production traces to easily identify issues in production.

- Understand your models’ performance on unseen data in production and generate datasets for new dev iterations.

Open Source & Ready to Run

Opik is a true open-source project, and its full LLM evaluation feature set is included free in the source code. Users can download the code from GitHub and run it locally, with a highly scalable and industry-compliant version ready for enterprise teams.

Iterate Across Your LLM App

Development Lifecycle

Opik helps analyze the quality of LLM responses at every step of the app development lifecycle so you can debug and optimize with confidence.

Understand Cause & Effect in Complex LLM Systems

With multiple components influencing model behavior and countless outputs generated during development, manual review and vibe checks don’t cut it.

With Opik, you can log traces and compute scores in the aggregate, and drill down to individual prompts and responses that need attention.

Built for developers first. Trusted by the world’s largest enterprise teams.

Integrate With Your Existing LLM Workflow

Opik is compatible with any LLM you choose, and it comes out of the box with the following direct integrations to get you up and running fast.

Try Opik in Your LLM System

Opik is free to try and fast to configure. Choose the implementation that’s right for your team and follow the steps below to start logging your first trace.

Get started today, free.

You don’t need a credit card to sign up, and your Comet account comes with a generous free tier you can actually use—for as long as you like.