Machine Learning Operations

Best ML Tools for Your Next Project

Comet’s Comprehensive Guide on Machine Learning Tools and Their Impact On Your Enterprise

ML tools play an essential role in getting your ML models from training to production monitoring. Here we explore what ML tools are, why they are essential, and how you can integrate them into your existing workflow.

Table of Contents

- Welcome to Comet ML

- Types of Machine Learning

- Stages of an ML Workflow

- Challenges of Manual Machine Learning Workflows

- How Can ML Tools Help?

- What ML Tools Should You Use?

- Top ML Tools for Data Preparation

- Top ML Tools for Model Training

- Top ML Tools for Experiment Tracking

- Top ML Tools for Model Monitoring

- All Your Favorite ML Tools in One Platform

- FAQs

Introduction: Will this guide be helpful to me?

This guide will be helpful to you if you are:

- Learn more about the machine learning process and ML tools to help you work efficiently and effectively.

- Discover other ML tools and platforms to assist you in your existing or future ML projects.

- Optimize your ML workflow using the information that we provide in this article.

Welcome to Comet ML

Machine learning (ML) is an artificial intelligence subfield (AI). The objective of machine learning, in general, is to comprehend the structure of data and fit that data into models that people can understand and use.

And to make it possible for ML teams to track, compare, explain and optimize experiments and models, Comet offers an all-encompassing platform. Comet provides insights and data to build more accurate AI models while improving productivity, collaboration, and visibility across teams.

- Scripts for carrying out the experiment

- Evaluation metrics

- Parameter configurations

- Environment configuration files

- Model weights

- Scripts used for running the experiment

- Examples of validation set predictions (common in computer vision)

- Visualizations of performance (confusion matrix, ROC curve)

Types of Machine Learning

Machine learning is divided into three categories – supervised, unsupervised, and reinforcement. Let’s review each type of ML in detail.

Supervised Machine Learning

Supervised learning is the process of training a model using labeled data. It’s a frequent method for forecasting a result. Assume we want to forecast who is likely to open an email we send. We may leverage data from previous mailings, as well as the “label” indicating whether or not the recipient opened the email.

From there, we can create a training data set that includes information on the receiver (location, demographics, historical email engagement behavior) as well as the label. The ML model learns by experimenting with several methods for predicting the label based on the other data points until it discovers the optimal one. Now, we can use that model to forecast who will open the next email we send.

Unsupervised Machine Learning

Unsupervised learning, as opposed to supervised learning, does not require labeled data. Instead, it seeks to uncover hidden correlations and patterns in data. This is ideal for when we are unsure of what we are looking for.

Clustering algorithms, the most typical form of unsupervised learning, take a huge amount of data points and locate groupings within them. For example, suppose we wish to divide our consumers into groups but don’t know how to do so. They can be identified using clustering methods.

Reinforcement Machine Learning

Reinforcement ML involves a feedback loop. First, the algorithm decides on an action and then examines data from the outside to see its effect. As this occurs again, the model learns the adequate way to respond.

This is comparable to how we learn via trial and error. When learning to walk, for example, we may begin by acting on our legs while collecting input from the surroundings and modifying our actions to optimize the reward (walking).

Choosing which advertisements to display on a website is an example of reinforcement learning in action. In this scenario, we wish to maximize participation. However, with so much advertising to pick from and uncertain payouts, how can we decide?

The reinforcement learning method is similar to doing A/B tests, except in this instance, we allow the reinforcement learning algorithm to choose the variation most likely to win based on user feedback and response to changing conditions.

Stages of an ML Workflow

ML workflow is divided into several stages, which we’ll review in detail below.

1. Data Collection

Machines, as you may know, first learn from the data that you provide them. That’s why it’s critical to acquire reliable data so that your machine learning model can identify the right patterns. The accuracy of your model is determined by the quality of the data you provide the machine. If you use erroneous or obsolete data, you will get incorrect or irrelevant results or forecasts.

Use data from a trusted source because it will have a direct impact on the output of your model. Good data is meaningful, has few missing or duplicated numbers, and accurately represents the numerous subcategories/classes available.

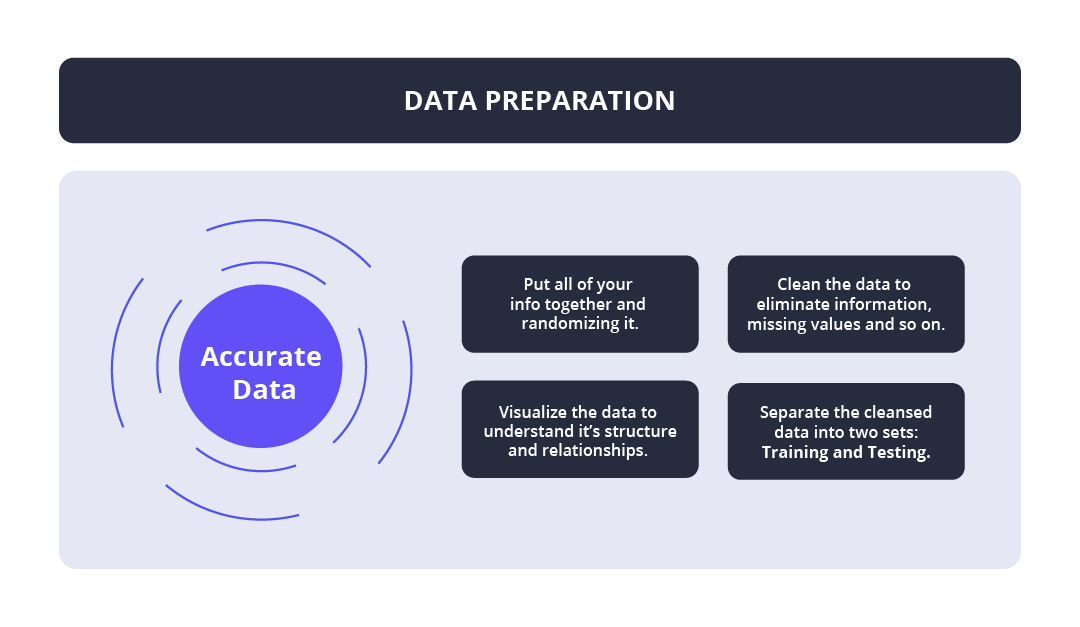

2. Data Preparation

After collecting accurate data, you must prepare it by:

- Putting all of your info together and randomizing it. This ensures that data is distributed evenly and that the ordering does not interfere with the learning process.

- Cleaning the data to eliminate unnecessary information, missing values, duplicate values, columns and rows, data type conversion, and so on. You may need to rearrange the dataset and modify the rows and columns or the index of rows and columns.

- Visualize the data to understand its structure and relationships between the many variables and classifications.

- Separating the cleansed data into two sets: training and testing. The training set is the set from which your model learns. A testing set is used to validate your model after training.

3. Model Selection

A machine learning model is a result of executing a machine learning algorithm on acquired data. It’s critical to select a model that is appropriate for the work at hand. Over time, scientists and engineers created many models for diverse tasks such as speech recognition, picture recognition, prediction, and so on.

Aside from that, you must determine if your model is best suited for numerical or categorical data and pick appropriately.

4. Model Training

The most critical phase in machine learning is training. During training, you feed prepared data to your machine learning model, which looks for patterns and makes predictions. As a consequence, the model learns from the data to complete the goal assigned. The model improves in predicting over time as it is trained.

5. Model Evaluation

After training your model, you must evaluate its performance. This is accomplished by assessing the model’s performance with previously unknown data. The unseen data utilized is the testing set that you previously divided our data into. If testing is done on the same data that was used for training, you will not receive an accurate measure since the model is already familiar with the data and sees the same patterns in it as it did before. This will provide you with a disproportionately high level of precision.

When applied to testing data, you obtain an accurate estimate of how your model will perform and how fast it will run.

6. Parameter Tuning

After you’ve constructed and tested your model, consider whether its accuracy may be enhanced in any way. This is accomplished by fine-tuning the parameters in your model. Parameters are the variables in the model that are normally determined by the programmer. The accuracy will be highest at a certain value of your parameter. Finding these settings is referred to as parameter tuning.

7. Model Conversion

Now that we have refined our model, it’s time to deploy it on devices where it may be used.

Model conversion is a necessary step when designing models for usage in edge devices such as mobile phones or IoT devices.

8. Model Deployment

The final step in all of the indicated phases is to deploy our final trained model. Integrating our model into a larger ecosystem of applications or tools, or just creating an interactive web interface around our model is a necessary step in model deployment.

9. Model Production Monitoring

Nevertheless, the ML workflow process doesn’t end at model deployment. After a successful model deployment, we need to monitor its production. We need this stage to assess the model performance in the production environment.

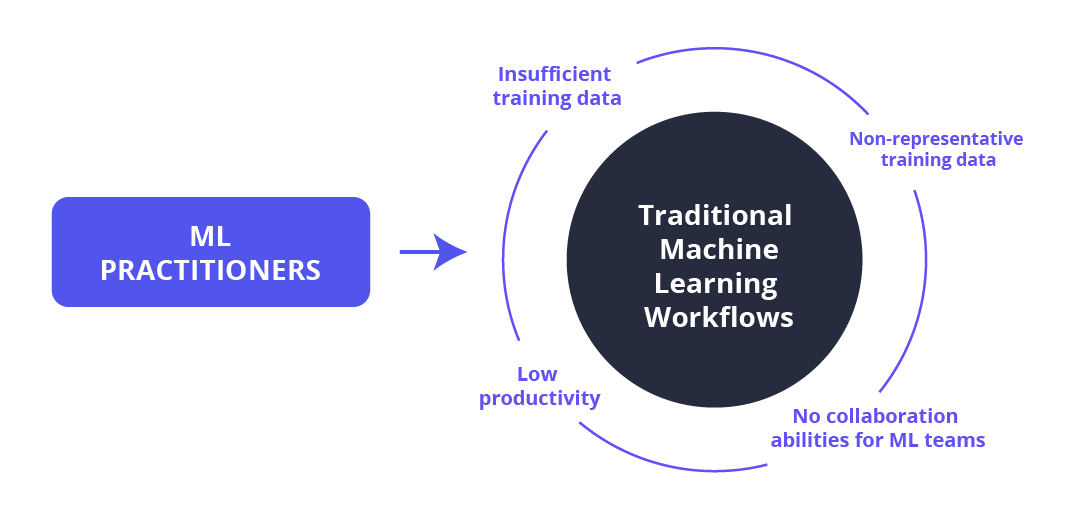

Challenges of Traditional Machine Learning Workflows

ML practitioners usually face various challenges associated with traditional machine learning workflows. Here are some of the most common ones:

- Insufficient training data

- Non-representative training data

- Low productivity

- No collaboration abilities for ML teams

The Fix: Machine Learning Tools

Fortunately, machine learning tools are there to fix the challenges of traditional ML workflows. These tools are specifically developed to help you avoid those challenges.

What Are Machine Learning Tools?

Machine Learning tools are software packages that aid developers in building, training, testing, and deploying machine learning models better.

How Can Machine Learning Tools Help?

To mention a few, ML tools may assist in:

- Predictive analysis

- Identification of dependencies

- Intelligent and data-driven forecasting

- Possible risks

- Monitoring of the critical path

- Impact analysis

They also contribute to better debates by providing data and facts, as well as enhancing cooperation and communication.

What ML Tools Should You Use?

Some of the most popular ML tools you should use include:

- Comet

- Scikit-Learn

- Knime

- Tensorflow

- Weka

- Pytorch

- Rapid Miner

- Google Cloud AutoML

Top ML Tools for Data Preparation

Data Preparation is an essential first step in any machine learning experimentation. Here are our top 3 most recommended data preparations tools:

1. Microsoft Power BI

An industry staple, Power BI is a powerful analytical, data wrangling, and visualization application. By offering drag and drop features for data transformation, this robust ETL tool gives machine learning teams the flexibility to perform analytics and data engineering.

2. Tableau Desktop

Tableau Desktop aids in the creation of data samples and the classification of the data according to various requirements. It assists in combining and integrating data from diverse data sources so that it may be saved in the Tableau Engine in a single format.

3. Alteryx APA Platform

This platform includes extraordinary features for data preparation, exploration, and profiling. With its strong data preparation and model handling capabilities, teams can easily organize data, exchange data reports, and utilize data to transform their MLOps.

4. Kangas

Kangas is a tool for exploring, analyzing, and visualizing large-scale multimedia data. It provides a straightforward Python API for logging large tables of data, along with an intuitive visual interface for performing complex queries against your dataset. It also has integrated computer vision support where you can visualize and filter bounding boxes, labels, and metadata without any extra setup.

Top ML Tools for Model Training

The top 3 model training tools in the ML industry are listed below. You can use them to assess if your needs match the characteristics given by the tool.

1. TensorFlow

With TensorFlow, developers can gain full control and train models from scratch. It also offers prebuilt models that you can use for simpler solutions. One of the most appealing features TensorFlow offers is Dataflow charts, which are particularly useful when developing complicated models.

2. PyTorch

PyTorch offers two notable features: tensor computation with GPU acceleration and neural networks built on a tape-based auto diff mechanism. PyTorch also supports multiple ML libraries and tools that may be used to support a range of solutions.

3. Scikit-learn

Scikit-learn is one of the best open-source machine learning frameworks for beginners. It includes high-level wrappers that allow users to experiment with various techniques and study a wide range of classification, clustering, and regression models.

Top ML Tools for Experiment Tracking

The top three experiment tracking software platforms in the ML industry are listed below. You can use them to track and manage your ML workflows.

1. Comet

Comet is an ML platform that assists data scientists in tracking, comparing, explaining, and optimizing experiments and models throughout the model’s lifespan, from training to production. Data scientists can register datasets, code modifications, experimentation histories, and models for experiment tracking.

2. Weight & Biases

Weight & Biases is an ML platform that allows for the monitoring of experiments, the versioning of datasets, and the maintenance of models. The experiment tracking section’s major goal is to assist Data Scientists in recording every step of the model training process, visualizing models, and comparing experiments.

3. MLflow

MLflow is an open-source platform that aids in the management of the whole machine learning lifecycle. Experimentation is included, but so are model storage, repeatability, and deployment. Tracking, Model Registry, Projects, and Models are each represented by a single MLflow component.

Top ML Tools for Model Monitoring

The top three experiment tracking software platforms in the ML industry are listed below. You can use them to track and manage your ML workflows.

1. Anodot

Anodot is an AI monitoring tool that automatically interprets your data. It can track numerous things at once, including customer experience, partners, revenue, and Telco networking. The program is designed from the ground up to read, analyze, and correlate data to improve your company’s performance.

2. Fiddler

Fiddler has a nice, user-friendly design that is simple to use and understand. You can use Fiddler to debug predictions, explain them, examine model behavior, manage datasets, and so forth.

3. Google Cloud AI Platform

Google Cloud AI Platform is ideal for those seeking a complete and dependable experience. Google blends its autoML, MLOps, and AI Platform to provide a complete experience. It provides both code-based and no-code-based tools to make machine learning easier. The integrated platform is excellent since it assesses user ability level and provides advanced model optimization as well as the point-and-click data science AutoML.

4. Comet

Comet monitors the performance of models in production by tracking data drift and accuracy or error metrics. Its purpose-built model monitoring feature allows you to track and visualize model performance at any scale. You can report on metrics observed in production more quickly and accurately by using data gathered during training as a benchmark. Thanks to its custom features and flexibility, it is an excellent tool for enterprise teams and solo practitioners.

Try Comet’s Machine Learning Tools Today

Comet is a comprehensive ML tool that provides necessary insights and data to build better and more accurate AI models while improving productivity, collaboration, and visibility across teams.

Here’s how to get started:

- Create your FREE Comet account.

- Start integrating our ML tools into your workflow.

- Check out the documents.

- Explore with a sample project.

Frequently Asked Questions (FAQs)

What tools do you use for machine learning?

Some of the most popular ML tools you should use are Scikit-Learn, Knime, Tensorflow, Weka, Pytorch, Rapid Miner, and Google Cloud AutoML.

What is a good testing tool for machine learning products?

4 best testing tools for machine learning products are:

- RPA (robotic process automation)

- AT (autonomous testing)

- MBTA (Model-based test automation)

- NLP (natural language processing)

Do AI/Machine Learning tools help in sales? How does it impact businesses in general?

Artificial intelligence (AI) is assisting businesses in increasing lead volume, improving close rate, and boosting overall sales performance. This is because ML can automate and augment much of the sales process. As a result, salespeople can focus on what really matters: closing the sale.

What are the best tools for 3D deep learning?

PyTorch, Caffe, TensorFlow, and Keras are the best tools for 3D deep learning.

What are the best data annotation tools for machine learning?

Best data annotation tools for ML are ClickUp, Prodigy, Annotate, Filestage, PDF Annotator, Ink2Go.

What machine learning frameworks and tools do Google use?

Eigen and TensorFlow are the two of the tools that are known that Google uses.

What are good data set tagging tools for machine learning?

The list of good set tagging tools for ML are Amazon SageMaker Ground Truth, Label Studio, Sloth, Tagtog, Labelbox, Dataturk, and LightTag.

What are the best tools for data analysis in Python?

The best tools for data analysis in Python are SciPy, Pandas, Keras, PyTorch, BeautifulSoup, Matplotlib, Scrapy, and SciKit-Learn.

What are great feature engineering tools for machine learning?

AutoFeat is one of the great engineering tools for ML. It automates feature selection and feature synthesis, and fits a linear ML model.

Learn More

Wondering how to implement MLOps and experiment management best practices to increase the efficiency of your ML team? Read the comprehensive MLOps guide to learn more and boost your ML project performance.