Join us as we explore

GenAI Engineering: One Line at a Time

At Comet’s Annual Convergence Conference

Virtual Event | May 13th -14th, 2025

+ Networking Reception, San Francisco

2

Days of content

25

+

Speakers

3,500

+

Attendees online

As the industry transitions from traditional machine learning to generative AI, new challenges unique to LLMs are emerging. Convergence 2025 provides a platform to explore these shifts, with sessions on advanced LLM evaluation techniques, the potential of agentic AI, and the responsible use of generative AI. Join us to dive into the complexities of building and deploying LLM-based applications, and connect with others shaping the future of this rapidly evolving field.

About Convergence

The fourth edition of Comet’s Convergence Conference on May 13-14, 2025 will navigate GenAI Engineering: One Line at a Time. This two-day virtual event features over 25 expert-led sessions, including in-depth talks, technical panels, and interactive workshops. Connect with our global community at a networking reception in San Francisco. Engage with leading ML developers, data scientists, and industry experts as we address the latest developments in LLM technology and its significant impacts on AI. Be at the forefront of shaping an ethical and advanced AI landscape.

Two Days x Two Talk Tracks

We’re excited to offer two different tracks for Convergence 2025! Choose the track that best fits your interests and experience, or mix and match sessions from each!

Track 1: GenAI Foundations

For those learning how to build reliable GenAI systems,

one line at a time.

Learn the fundamentals of building GenAI systems—from architecture and optimization to debugging and evaluation. This track dives into the mechanics behind reliable LLM-powered apps, with practical, technical talks on RAG, evals, agents, and more.

Track 2: GenAI in Practice

For those looking to go beyond the fundamentals

and into the field.

Explore real-world GenAI applications and learn from the engineers and leaders deploying them as they share how they scale infrastructure, address safety and evaluation challenges, and navigate edge cases and changing regulations.

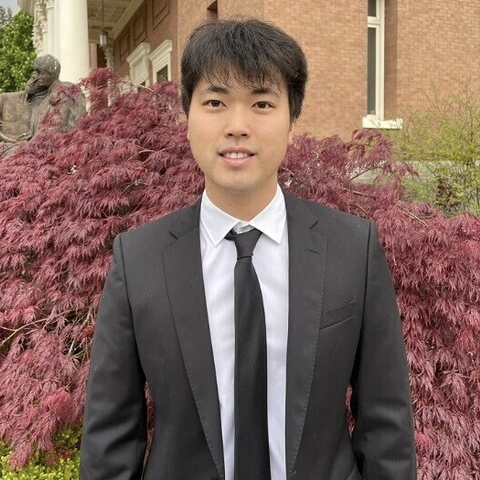

Speakers

Schedule

Day 1 | Tuesday May 13

12:00PM ET

INTRODUCTION

12:05PM ET

Escaping Demo Hell: Shipping Responsible AI from Prototype to Production with Eval-Driven Development

Track 1: GenAI Foundations

Albert Lie – Co-Founder at Forward Labs

12:05PM ET

Evaluation-Driven Development: Building Reliable AI Systems

Track 2: GenAI in Practice

Hugo Bowne-Anderson – Data Science Educator and Vanishing Gradients Host

12:35PM ET

Crafting Effective Agents – From Design to Observability

Track 1: GenAI Foundations

Tony Kipkemboi – Senior Developer Advocate at CrewAI

Practical Approaches to Building Safer AI Systems

Track 2: GenAI in Practice

Allegra Guinan – Co-Founder & CTO at Lumiera

1:05PM ET

AI Coding Agents and How to Code Them

Track 1: GenAI Foundations

Alex Shershebnev – ML/DevOps Lead at Zencoder

1:05PM ET

Simpler, Faster, Better: Challenging GenAI Implementation Assumptions

Track 2: GenAI in Practice

Carolyn Olsen – VP of Data Science at Clearcover Insurance

1:35PM ET

Scaling Intelligence: Engineering Challenges Behind Vector Databases in the Age of AI

Track 1: GenAI Foundations

Parker Duckworth – Director of Database Engineering at Weaviate

1:35PM ET

You Don’t Train for a Marathon in a Lab

Track 2: GenAI in Practice

Sawan Ruparel – Vice President of Engineering at BCGX

2:05PM ET

15 MIN BREAK

2:20PM ET

A Panel Discussion: The Rise of AI Agents: From Demos to Deployment

Gideon Mendels – CEO at Comet

Tharun Tej Tammineni – Senior Partner Engineer at Meta

Jeremy Mumford – Lead AI Engineer at Pattern

João Moura – CEO at CrewAI

3:05PM ET

From Hallucination to Accuracy: Evaluating Context Retrieval in RAG Systems

Track 1: GenAI Foundations

Jasleen Singh – Senior Principal Software Engineer at Dell

3:05PM ET

Uber’s Oncall Copilot Accuracy Journey

Track 2: GenAI in Practice

Paarth Chothani – Staff Software Engineer at Uber

Xandra Zhu – Machine Learning Engineer at Uber

Jonathan Li – Software Engineer at Uber

3:35PM ET

15 MIN BREAK

3:50PM ET

Smaller, Smarter, Sustainable: Optimizing LLM’s by Leveraging White Box Knowledge Distillation Algorithms

Track 1: GenAI Foundations

Ram Ganesh – Senior Data Scientist at Mastercard

3:50PM ET

AI-Powered Payment Gateways for Green Finance: Using LLM Agents to Drive Sustainable Transactions

Track 2: GenAI in Practice

Nikhil Kassetty – Senior Software Engineer – AI and Fintech Expert at Intuit

4:20PM ET

Optimizing Large Language Models: Techniques for Efficiency and Performance

Track 2: GenAI in Practice

Kailash Thiyagarajan – Senior Machine Learning Engineer at Apple

4:20PM ET

Optimizing LLM Performance: Scaling Strategies for Efficient Model Deployment

Track 1: GenAI Foundations

Rajarshi Tarafdar – Senior Software Engineer at JP Morgan Chase

4:50PM ET

DAY 1 WRAP UP

Day 2 | Wednesday May 14

12:00PM ET

INTRODUCTION

12:00PM ET

Workshop | LLM & RAG Evaluation Playbook for Production Apps

Paul Iusztin – Senior AI Engineer / Founder at Decoding ML

1:00PM ET

15 MIN BREAK

1:15PM ET

Workshop | Precision Prompting: Tuning LLM Performance Through Algorithmic Optimization

Vincent Koc – Lead AI Research Engineer at Comet

1:45PM ET

Workshop | Smarter Prompting, Faster: Introducing Opik’s Agent Optimizers

Doug Blank, PH.D. – Head of Research at Comet

2:45PM ET

15 MIN BREAK

3:00PM ET

Workshop | Navigating Uncharted Metrics: Reference-Free LLM Evaluation with G-Eval

Leonardo Gonzalez – VP of AI Center of Excellence at Trilogy

4:25PM ET

DAY 2 WRAP UP

Some Participating Companies

Some Topics Covered

Federated Machine Learning

AI Governance

Diffusion Models

Scalability and LLMOps

LLM Security

Vector Databases/Search

LLMs in Production

Vision Transformers

Multilingual LLMs

Model Pruning &

Why Attend Convergence?

Attending Convergence is the perfect opportunity to be at the forefront of GenAI Engineering. For ML professionals, this annual conference offers a deep dive into the technical intricacies of LLMs, equipping you with the latest tools and methods in prompt engineering and LLM evaluation. Software Engineers entering the field will find accessible sessions that provide the foundational knowledge necessary to start building and deploying generative AI applications effectively. It’s an essential platform for those looking to enhance their expertise, keep pace with rapid advancements in AI, and contribute to the ethical development of new AI technologies.

Register for Convergence 2025

Registration is now closed