Web Scraping and Sentiment Analysis Using Twint Library

Introduction

Twitter is a social networking and news website where users exchange short messages known as tweets.

The ease with which Twitter may be scanned is one of its biggest selling points. Hundreds of millions of intriguing individuals can be followed, and their material can be read quickly, which is convenient in today’s attention-deficit society.

Web scraping is a widely-recognized process of extracting data from a website (like Twitter). After this website data is gathered, it is then exported into a format that is more user-friendly for analysis and modeling. Python libraries such as Beautiful Soup, Selenium, and many others can assist with the web scraping process. However, one disadvantage of some of these libraries is that many require familiarity with HTML, which is not necessarily a skill you’d find in the average data analyst’s toolbox.

In order to scrape data from Twitter, in particular, the company has created something called a Twitter Developer account. This account allows you to create and manage your own projects and apps from data scraped from the site, but it comes with some serious limitations, which are outlined in detail in the Twitter FAQ section.

In this article, we will explore how to scrape Twitter data:

- Without using the Twitter API

- Without having to create a Twitter Developer account

- Without domain knowledge of web development and HTML

Twint

Twint is a Python-based advanced Twitter-scraping application that allows you to scrape Tweets from Twitter profiles without having to use Twitter’s API or creating a Twitter Developer account.

Twint makes use of Twitter’s search operators, which allow you to scrape Tweets from specific individuals, Tweets referring to specific themes, hashtags, and trends, and sort out sensitive information like e-mail and phone numbers from Tweets.

Twint also creates unique Twitter queries that allow you to scrape a Twitter user’s followers, Tweets they’ve liked, and who they follow, all without having to utilize any login, API, Selenium, or browser emulation.

Twint Requirements

- Python 3.6

- aiohttp

- aiodns

- beautifulsoup4

- cchardet

- elasticsearch

- pysocks

- pandas (≥0.23.0)

- aiohttp_socks

- schedule

- geopy

- fake-useragent

- py-googletransx

Benefits of Using Twint

- Only the last 3,200 Tweets can be scraped using the Twitter API. Twint, on the other hand, can theoretically retrieve unlimited Tweets.

- Twint provides various formats into which you can store your scraped data, including CSV, JSON, SQLite database, and Elasticsearch database.

- Twint has a quick initial setup.

- Twint can be used anonymously and without a Twitter account.

- There are no rate restrictions with Twint.

What kind of challenges arise when building ML models at scale? Chris Brossman from The RealReal discusses his team’s playbook for big tasks.

Installation

Twint can be installed with the pip command or straight from git. It is advisable you install Twint through Git as it comes up with little or no issues.

Git Installation:

git clone https://github.com/twintproject/twint.git

cd twint

pip3 install . -r requirements.txt

Using Pip:

pip3 install twint

— or —

pip3 install — user — upgrade -e git+https://github.com/twintproject/twint.git@origin/master#egg=twint

Pipenv:

pipenv install -e git+https://github.com/twintproject/twint.git#egg=twint

In this article we will be accessing Twint in two places:

- Command line: cmd or Powershell

- Python IDE: We will be using a Jupyter notebook, but you can your choice of IDE (preferably Python-based).

From your command line (cmd) you will need to navigate to the twint directory:

C:\Users\>cd twint

From your command line, we will be searching for the key #StandStrong. We will save it as a JSON file in a directory of the same name:

C:\Users\twint>twint -s “#StandStrong” -o “C:/Users/Documents/Python Scripts/standstrong.json” — jsonC:\Users\twint>twint -s “#StandStrong” -o “C:/Users/standstrong.csv” — csv — since 2022–06–01 // Scrape Tweets and save as a csv file.

Code Walkthrough and Project Building

David Adeleke (aka ‘Davido’) announced the release of his new single “STAND STRONG” FT THE SAMPLES on May 13th, 2022. Around the world, there have been some very conflicting feelings about this release, as it shows a very different aspect of Nigeria’s multi-talented artists.

For this project, we are going to scrape data from Twitter that relates to this new song, and apply sentiment analysis.. The keyword #StandStrong will be our base search word.

The full code of this project can be found in my Github Repository. Please feel free to fork the repo and use it to build any of your future projects!

We will be starting this project by importing all necessary libraries that will be used when scrapping Tweets using Twint, creating sentiment analysis and visualization.

import pandas as pd import numpy as np import re import matplotlib.pyplot as plt plt.style.use(‘fivethirtyeight’)import twint import nest_asyncio nest_asyncio.apply() #necessary to allow asyncio to end event loop import nltk nltk.download(‘punkt’) nltk.download(‘wordnet’) from nltk import sent_tokenize, word_tokenize from nltk.stem.snowball import SnowballStemmer from nltk.stem.wordnet import WordNetLemmatizer from nltk.corpus import stopwords from textblob import TextBlob from wordcloud import WordCloud, STOPWORDS

Search for Tweet to Scrape:

For the code below we are going to be scraping for the keyword #StandStrong :

#Configure s = twint.Config() s.Search = "#StandStrong" s.Lang = "en" #s.Limit = 1000 # optional s.Since = '2022–05–01' s.until = '2022–06–29' #Store script in csv file s.Store_csv = True s.Output = "C:/Users/Twint_vv3.csv"twint.run.Search(s)

The parameters used in the above code, along with their definitions, can be found here:

Lang: Specify the language of the Tweet you want to scrape.Since: Collect Tweets that were tweeted since %Y-%m-%d %H-%M-%ss.Until: Filter tweets up until this point in time.Store_csv: Save scraped tweet data in CSV format.

Data Cleaning

We will need to do a lot of cleaning to remove unnecessary text (and other characters) from the data before we can perform sentiment analysis on it. The practice of correcting or deleting incorrect, corrupted, improperly formatted, duplicate, or incomplete data from a dataset is known as data cleaning.

stand_df = pd.read_csv("Twint_vv3.cs")

Tweet = stand_df.filter(['tweet'])

Tweet.head(10)

Next, a function is created to remove some characters, emojis, text, and hyperlinks that will not be needed when performing sentiment analysis on the Tweet column data:

# create a function to clean the tweetsdef cleanTxt(text): #Remove @mentions and replace with blank text = re.sub(r'@[A-Za-z0–9]+', '', text) #Remove the '#' symbol, replace with blank text = re.sub(r'#', '', text) #Removing RT, replace with blank text = re.sub(r'RT[\s]+', '', text) #Remove the hyperlinks text = re.sub(r'https?:\/\/\S+', '', text) return text# clean the text Tweet['tweet']= Tweet['tweet'].apply(cleanTxt)#Show the clean text Tweet

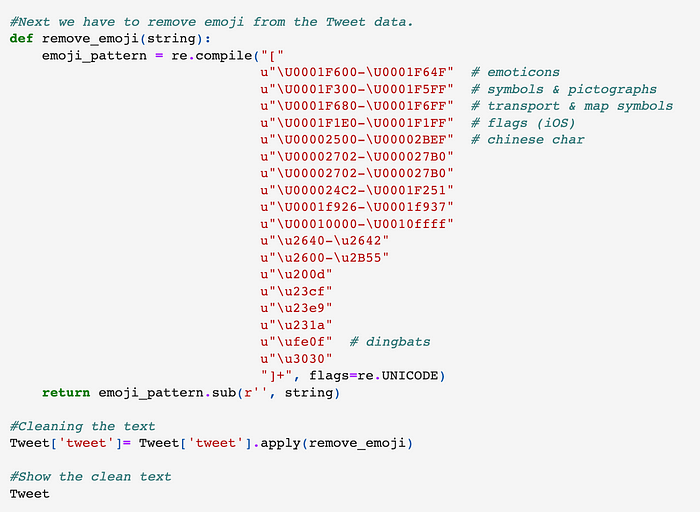

Another function is also recreated to remove emoji’s and other Unicode from the data Tweet column.

Get Subjectivity and Polarity of Tweet

The process of sentiment analysis boils down to determining the attitude or the emotion of the writer (e.g., ‘positive’, ‘negative’, or ‘neutral’).

- Polarity: refers to the strength of an opinion. It could be positive or negative. If anything is associated with a strong good feeling or emotion, such as admiration, trust, or love, it will have a certain orientation toward all other opinions.

- Subjectivity: the degree to which a person is personally connected with an object is referred to as subjectivity. Personal connections and individual experiences with that object are most important here, which may or may not differ from someone else’s perspective.

#Create a function to determine the subjectivity def getSubjectivity(text): return TextBlob(text).sentiment.subjectivity#Create a function to determine polarity def getPolarity(text): return TextBlob(text).sentiment.polarity#Create a new column and add it to the Tweet_df dataframe Tweet['Subjectivity'] = Tweet['tweet'].apply(getSubjectivity) Tweet['Polarity'] = Tweet['tweet'].apply(getPolarity)#Display data Tweet

Grouping Polarity Score of Our Data

At this stage we will create a function to help group our data into negative, neutral and positive comments.

#Group the range of polarity to different categories

def getInsight(score):

if score < 0:

return "Negative"

elif score == 0:

return "Neutral"

else:

return "Positive"

Tweet["Insight"] = Tweet["Polarity"].apply(getInsight)

Tweet.head(50)

Data Visualization and Insight

#Get value counts

Tweet["Insight"].value_counts()

#Plot the values count of polarity

plt.title(“StandStrong Sentiment Score”)

plt.xlabel(“Sentiment”)

plt.ylabel(“Values”)

Tweet[“Insight”].value_counts().plot(kind=”barh”, color=”Gray”)

plt.show()

From the bar cluster chart above, you may notice the keyword #StandStrong had far more neutral comments.

#Plot the values count of polarity

plt.title(“Top Fans”)

plt.xlabel(“Users”)

plt.ylabel(“Values”)

stand_df[“username”].value_counts()[:20].plot(kind=”barh”, color=”Gray”)

plt.show()

Above we plotted the top 20 fans of the song; this is based on the amount of time they tweet about the keyword.

import seaborn as sns

import warnings

stand_df[“date”] = pd.to_datetime(stand_df[“date”]).dt.date

#Helps change the datatype from datetime to date only

sns.countplot(stand_df[“date”])

plt.xticks(rotation = 45)

Plotted above is the frequency of people tweeting the keyword per day.

Before we create our final visualization, let’s examine our list of stopwords:

stopwords = STOPWORDS

print(stopwords)

Word Cloud

A word cloud is a grouping of words that are displayed in various sizes to depict the frequency that a term appears in a document or corpus. Generally, the more important the word is, the larger and bolder it is. You will notice the word StandStrong appears bold in our word cloud below, because it is the most used word, followed by Davido who is the artist that sang it.

#Let's create a wordcloud for a visual representation of the data:Tweet_Word = ' '.join([twts for twts in stand_df['tweet']]) wc = WordCloud( background_color = "black", stopwords = stopwords, height = 500, width = 800, random_state = 21, max_font_size = 120 )wc.generate(Tweet_Word) plt.imshow(wc, interpolation = 'bilinear') plt.axis('off') plt.show()

Conclusion

In conclusion, Twint is a fantastic package for creating social media monitoring apps that aren’t restricted by the Twitter API. In this article, we learned how to utilize Twint to pull data from Twitter. We also performed sentiment analysis of our explore people’s opinions of the keyword.

Social Media

You can follow me on social media:

- Twitter: @kiddojazz.

- LinkedIn: Temidayo Omoniyi.

- Github: Kiddojazz.