Train Neural Networks Using a Genetic Algorithm in Python with PyGAD

The genetic algorithm (GA) is a biologically-inspired optimization algorithm. It has in recent years gained importance, as it’s simple while also solving complex problems like travel route optimization, training machine learning algorithms, working with single and multi-objective problems, game playing, and more.

Deep neural networks are inspired by the idea of how the biological brain works. It’s a universal function approximator, which is capable of simulating any function, and is now used to solve the most complex problems in machine learning. What’s more, they’re able to work with all types of data (images, audio, video, and text).

Both genetic algorithms (GAs) and neural networks (NNs) are similar, as both are biologically-inspired techniques. This similarity motivates us to create a hybrid of both to see whether a GA can train NNs with high accuracy.

This tutorial uses PyGAD, a Python library that supports building and training NNs using a GA. PyGAD offers both classification and regression NNs. Before starting, install PyGAD using pip.

This is the outline:

- Genetic Algorithm Overview

- Build a Population of Neural Networks

- Build the Fitness Function

- Build a Genetic Algorithm

- Train Network using Genetic Algorithm

- Complete Code for Classification Neural Network

- Regression Neural Networks

- About the Author

Genetic Algorithm Overview

This tutorial will not discuss the genetic algorithm in detail—the reader should read about how it works. Just a brief overview is provided below, which serves the purposes of this tutorial. To dive deeper into how GAs work, check out this tutorial.

Assume the problem to be solved using a GA is represented according to the following equation, where Y is the output, X is the input, and a & b are the parameters. Given that X=2 and Y=4, how is a GA used to find the values of the parameters a and b to solve the problem?

Y = a * X + b, X=2 and Y=4

The next figure lists the steps in the pipeline of the genetic algorithm. The first step is to create an initial population of solutions. According to the above equation, the solution consists of the 2 parameters: a and b. By creating an initial population of solutions, we select different values for the 2 parameters a and b. Here are 5 random solutions:

a b

solution 1: -4 1

solution 2: 8 4

solution 3: 0.3 8

solution 4: 2 3

solution 5: 4 7

After the initial population is created, a fitness function is used to calculate a fitness value for each solution. The fitness function changes based on the problem being solved. Regarding the equation Y = a * X + b, a fitness function can calculate how close the prediction is to the target output Y=4using the following equation. The higher the fitness value, the better the solution.

fitness = 1/|predicted-4|

Before the fitness is calculated, the predicted output is calculated according to the parameters of each solution. After that, the fitness value is calculated. Here are predicted outputs and fitness values of all solutions. The highest fitness value is 0.143, and the lowest is 0.05.

a b Prediction Fitness

solution 1: -4 1 -7 0.09

solution 2: 8 4 20 0.05

solution 3: 0.3 8 8.6 0.116

solution 4: 2 3 7 0.143

solution 5: 4 7 15 0.06

The solutions with the highest fitness values are selected as parents. Here is the order of the 5 solutions according to their fitness value, in descending order:

Solution 4

Solution 3

Solution 1

Solution 5

Solution 2

By selecting some of the top solutions as parents, the GA applies crossover and mutation operations between them to create new solutions.

The previous discussion can be summarized into the following 2 points:

- Creating an initial population of solutions.

- Creating a fitness function.

The next section discusses how to build an initial population of neural networks using PyGAD.

Build a Population of Neural Networks

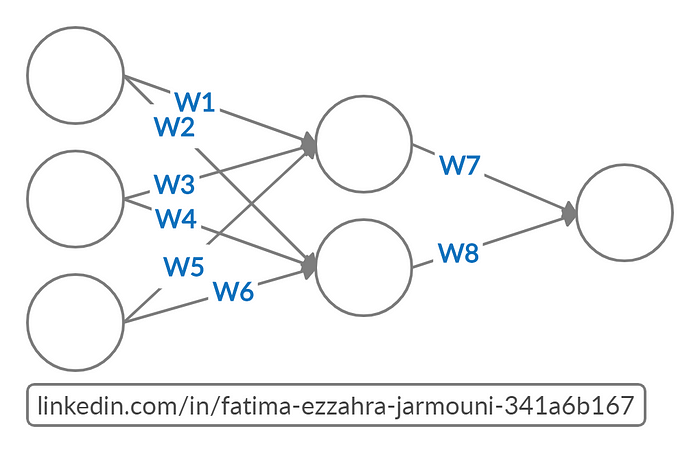

Similar to the 2 parameters a and b in the previously-mentioned equation Y = a * X + b, a neural network has its own parameters (i.e. weights). For example, the network in the next figure has 8 parameters (W1 to W8). Using these parameters, the network can make predictions.

One solution in the genetic algorithm has values for the 8 parameters. Usually, the population consists of several solutions. How, then, do we create a population of neural nets?

The PyGAD library has a module named gann (Genetic Algorithm – Neural Network) that builds an initial population of neural networks using its class named GANN. To create a population of neural networks, just create an instance of this class.

The constructor of the GANN class has the following parameters:

num_neurons_input: Number of inputs to the network.num_neurons_output: Number of outputs (i.e. number of neurons in the output layer).num_neurons_hidden_layers: Number of neurons in the hidden layer(s).output_activation: The activation function at the output layer.hidden_activations: A list of activation functions for the hidden layers.num_solutions=None: Number of solutions in the population. It must be at least 2.

The first example discussed centers on building a classification neural network for the XOR (Exclusive OR logic gate) problem. The inputs and outputs of this problem are prepared as NumPy arrays, as shown below:

import numpy

data_inputs = numpy.array([[1, 1],

[1, 0],

[0, 1],

[0, 0]])

data_outputs = numpy.array([0,

1,

1,

0])

The XOR problem has 4 samples, where each sample has 2 inputs and 1 output. As a result, the num_neurons_input parameter should be assigned a value of 2. The value of the num_neurons_output parameter should be 2 because there are 2 classes.

num_neurons_input = 2

num_neurons_output = 2

The num_neurons_hidden_layers parameter accepts a list representing the number of neurons in the hidden layer. For example, num_neurons_hidden_layers=[5, 2] means there are 2 hidden layers, where the first layer has 5 neurons and the second layer has 2 neurons. For the XOR problem, a single hidden layer with 2 neurons is enough.

num_neurons_hidden_layers = [2]

The architecture of the network that simulates the XOR gate is shown below.

The gann module supports 3 types of activation functions:

- Sigmoid

- Rectified linear unit (ReLU)

- Softmax: Used at the output layer to make predictions.

The activation function for the hidden layer’s neurons is set to relu, according to the next line. The hidden_activations parameter accepts a list of the names of each hidden layer’s activation function.

hidden_activations=["relu"]

If there are multiple hidden layers, then the activation function of each layer is listed.

For the output layer, softmax is used.

output_activation = "softmax"

The last parameter to prepare its value is the num_solutions parameter, which accepts the number of solutions in the population. Let’s set it to 5. As a result, there are 5 different value combinations for the parameters in the neural network.

num_solutions = 5

After preparing all the parameters, here is the Python code that creates an instance of the GANN class to build the initial population for networks solving the XOR problem.

import pygad.gann

GANN_instance = pygad.gann.GANN(num_solutions=5,

num_neurons_input=2,

num_neurons_hidden_layers=[2],

num_neurons_output=1,

hidden_activations=["relu"],

output_activation="softmax")

There is an attribute called population_networks inside the GANN class that returns references to the networks within the population. Here is how the networks’ references are returned.

print(GANN_instance.population_networks)

Result:

[

<pygad.nn.nn.DenseLayer at 0x2725e28b630>,

<pygad.nn.nn.DenseLayer at 0x2725e28b9b0>,

<pygad.nn.nn.DenseLayer at 0x2725e190ac8>,

<pygad.nn.nn.DenseLayer at 0x2725e27eb00>,

<pygad.nn.nn.DenseLayer at 0x2725e27e940>

]

There is also a function called population_as_vectors() in the gannmodule that returns a list of the solutions’ parameters.

population_vectors = pygad.gann.population_as_vectors(population_networks=GANN_instance.population_networks)print(population_vectors)

Here are the parameters of the 5 solutions.

[

array([-0.01812899, -0.02474208, -0.07105532, -0.01654875, -0.04692194, -0.02432995]),

array([-0.05717154, 0.04262176, 0.01157643, 0.09404094, -0.04565558, -0.01055631]),

array([-0.03654666, -0.05663649, -0.01808185, 0.04675663, 0.01162651, 0.02960824]),

array([ 0.03844111, 0.09247692, -0.0251123 , -0.07804498, -0.04800328, 0.09069625]),

array([-0.07784676, -0.09123247, 0.03205176, -0.00280736, 0.03818657, -0.07136233])

]

Now, the population of networks is prepared. The next section discusses building the genetic algorithm.

Build the Genetic Algorithm

The PyGAD library has a module named pygad that builds instances of the genetic algorithm using its GA class. This class accepts more than 20 parameters, but the ones needed in this tutorial are listed below:

num_generations: Number of generations.num_parents_mating: Number of parents selected from the population.initial_population: The initial population.fitness_func: The fitness function, by which each solution is assessed.mutation_percent_genes: Percentage of genes to mutate.callback_generation: A function called after each generation.

num_generations is assigned an integer representing the number of iterations/generations within which the solutions are evolved. It’s set to 50 in this example.

num_generations = 50

Given that there are 5 solutions within the population, the num_parents_mating could be set to 3.

num_parents_mating = 3

The initial population was previously returned into the population_vectorsvariable, which is assigned to the initial_population parameter.

initial_population = population_vectors.copy()

The fitness_func parameter is critical and must be designed carefully. It accepts a user-defined function that returns the fitness value of a single solution. This function must accept 2 parameters:

solution: The solution to calculate its fitness value.sol_idx: Index of the solution within the population.

To measure the classification accuracy of a solution within the population, here’s the implementation of the fitness function. It uses the predict()function from the pygad.nn module to make predictions based on the current solution’s parameters. This function accepts the following parameters:

last_layer: A reference to the last layer in the neural network.data_inputs: The input samples to predict their outputs.problem_type: Type of the problem, which can be either"classification"(default) or"regression".

Based on the network predictions returned by the pygad.nn.predict()function, the classification accuracy is calculated and saved into the solution_fitness variable.

def fitness_function(solution, sol_idx): global GANN_instance, data_inputs, data_outputs predictions = pygad.nn.predict(last_layer=GANN_instance.population_networks[sol_idx], data_inputs=data_inputs, problem_type="classification") correct_predictions = numpy.where(predictions == data_outputs)[0].size solution_fitness = (correct_predictions/data_outputs.size)*100 return solution_fitness

The above function is assigned to the fitness_func parameter.

fitness_func = fitness_function

The next parameter is mutation_percent_genes, which accepts the percentage of genes to mutate. It’s recommended to set a small percentage. This is why 5% is used.

mutation_percent_genes = 5

Finally, the callback_generation parameter accepts a function that’s called after each generation. For training a neural network, the purpose of this function is to update the network weights by those evolved using the GA. Here is the implementation of this function.

def callback_generation(ga_instance):

global GANN_instance

population_matrices = pygad.gann.population_as_matrices(population_networks=GANN_instance.population_networks, population_vectors=ga_instance.population)

GANN_instance.update_population_trained_weights(population_trained_weights=population_matrices)

print("Generation = {generation}".format(generation=ga_instance.generations_completed))

print("Accuracy = {fitness}".format(fitness=ga_instance.best_solution()[1]))

The function above is assigned to the callback_generation parameter.

callback_generation = callback_generation

After preparing all the parameters of the GA class, here is how we create an instance.

ga_instance = pygad.GA(num_generations=50,

num_parents_mating=3,

initial_population=population_vectors.copy(),

fitness_func=fitness_func,

mutation_percent_genes=5,

callback_generation=callback_generation)

At this time, the neural network and the genetic algorithm are both created. The next section discusses running the genetic algorithm to train the network.

Train a Network using the Genetic Algorithm

To start evolving the GA, the run() method is called. This method applies the pipeline of the genetic algorithm by calculating the fitness values of the solutions, selecting the parents, mating the parents by applying the mutation and crossover operations, and producing a new population. This process lasts for the specified number of generations.

ga_instance.run()

After the run() method completes, the plot_result() method can be used to create a figure showing how the accuracy changes by generation.

ga_instance.plot_result()

According to the next figure, the GA found a solution that has 100% classification accuracy after around 14 generations.

Information about the best solution can be returned using the best_solution() method. It returns the solution itself, its fitness value, and its index within the population.

solution, solution_fitness, solution_idx = ga_instance.best_solution()print(solution) print(solution_fitness) print(solution_idx)

Result:

[-1.42839466 0.02073534 0.02709985 -0.1430065 0.46980429 0.01294253 -0.37210115 0.03971092]100.00

The best solution can be used to make predictions according to the code below. Because the accuracy is 100%, then all the outputs are predicted successfully.

predictions = pygad.nn.predict(last_layer=GANN_instance.population_networks[solution_idx],

data_inputs=data_inputs,

problem_type="classification")

print("Predictions of the trained network : {predictions}".format(predictions=predictions))

Result:

Predictions of the trained network : [0. 1. 1. 0.]

Complete Code for Classification Neural Network

The complete code of building and training a neural network using the genetic algorithm is shown below.

import numpy

import pygad

import pygad.nn

import pygad.gann

def fitness_func(solution, sol_idx):

global GANN_instance, data_inputs, data_outputs

predictions = pygad.nn.predict(last_layer=GANN_instance.population_networks[sol_idx],

data_inputs=data_inputs)

correct_predictions = numpy.where(predictions == data_outputs)[0].size

solution_fitness = (correct_predictions/data_outputs.size)*100

return solution_fitness

def callback_generation(ga_instance):

global GANN_instance

population_matrices = pygad.gann.population_as_matrices(population_networks=GANN_instance.population_networks,

population_vectors=ga_instance.population)

GANN_instance.update_population_trained_weights(population_trained_weights=population_matrices)

print("Generation = {generation}".format(generation=ga_instance.generations_completed))

print("Accuracy = {fitness}".format(fitness=ga_instance.best_solution()[1]))

data_inputs = numpy.array([[1, 1],

[1, 0],

[0, 1],

[0, 0]])

data_outputs = numpy.array([0,

1,

1,

0])

GANN_instance = pygad.gann.GANN(num_solutions=5,

num_neurons_input=2,

num_neurons_hidden_layers=[2],

num_neurons_output=2,

hidden_activations=["relu"],

output_activation="softmax")

population_vectors = pygad.gann.population_as_vectors(population_networks=GANN_instance.population_networks)

ga_instance = pygad.GA(num_generations=50,

num_parents_mating=3,

initial_population=population_vectors.copy(),

fitness_func=fitness_func,

mutation_percent_genes=5,

callback_generation=callback_generation)

ga_instance.run()

ga_instance.plot_result()

solution, solution_fitness, solution_idx = ga_instance.best_solution()

print(solution)

print(solution_fitness)

print(solution_idx)

Regression Neural Networks

In addition to building neural networks for classification, PyGAD also supports regression networks. Compared to the previous code, there are 2 changes:

- Edit the

fitness_func()to calculate the regression error rather than classification accuracy. - Set the

problem_typeparameter in thepygad.nn.predict()function to"regression"rather than"classification".

Here, some new training samples are used to train the NN:

data_inputs = numpy.array([[2, 5, -3, 0.1],

[8, 15, 20, 13]])

data_outputs = numpy.array([[0.1, 0.2],

[1.8, 1.5]])

In this example, the mean absolute error is used inside the fitness function. Here is its new code. Note how the problem_type argument is set to "regression".

def fitness_func(solution, sol_idx):

global GANN_instance, data_inputs, data_outputs

predictions = pygad.nn.predict(last_layer=GANN_instance.population_networks[sol_idx],

data_inputs=data_inputs,

problem_type="regression")

solution_fitness = 1.0/numpy.mean(numpy.abs(predictions - data_outputs))

return solution_fitness

For the complete code of this example, check out this code:

About the Author

Fatima Ezzahra JARMOUNI is a Moroccan junior data scientist who received an M.Sc. degree in data science from L’École Nationale Supérieure d’Informatique et d’Analyse des Systèmes (ENSIAS).

Fatima Ezzahra is actively seeking for a job in data science and data analysis. If there is a chance, use any of these contacts:

- LinkedIn: https://www.linkedin.com/in/fatima-ezzahra-jarmouni-341a6b167

- GitHub: https://github.com/JARMOUNI94

- E-mail: fatimaezzahrajarmouni at gmail dot com

- ResearchGate: https://www.researchgate.net/profile/Fatima_Ezzahra_Jarmouni2

- Academia: https://um5s.academia.edu/FatimaEzzahraJARMOUNI

Conclusion

This tutorial discussed how to build and train both classification and regression neural networks using the genetic algorithm using a Python library called PyGAD.

To summarize what we’ve covered: The library has a module named gannthat creates a population of neural networks. The pygad module implements the genetic algorithm and uses the parameters in the population of neural networks as the initial population. The genetic algorithm then makes slight changes over many generations. For each generation, PyGAD updates the weights and uses it for making predictions using the neural network. This is a time-consuming process, especially for complex problems.

Thus, efforts are needed to speed-up the implementation of PyGAD. Cython may be an option.