Issue 11: Announcing Comet Artifacts, the Case for Automating Morals

New Comet tools for more effective data management throughout ML experiment pipelines, an exploration of what it means to try to automate morality, interfaces for explaining Transformer models, and more

Welcome to issue #11 of The Comet Newsletter! To kick things off this week, we have a really special and exciting update to share with you. We’re thrilled to announce the release of Comet Artifacts, a new set of tools that provides ML teams a convenient way to log, version, and browse data from all parts of their experimentation pipelines.

But while our team has been hard at work on this set of new features, we’ve also been following the latests industry news and perspective, so check out the abridged list of links at the end of this issue to see what our team has been paying attention to.

Like what you’re reading? Subscribe here.

And be sure to follow us on Twitter and LinkedIn — drop us a note if you have something we should cover in an upcoming issue!

Happy Reading,

Austin

Head of Community, Comet

FROM TEAM COMET | WHAT WE’RE READING

Announcing Comet Artifacts

Today, we’re thrilled to introduce Comet Artifacts, a new set of tools that provides ML teams with the capability to log, version, and access their data across entire experimentation workflows.

Why Artifacts?

Machine learning typically involves experimenting with different models, hyperparameters, and different versions of datasets.

In addition to the metrics and parameters that are being measured and tested, machine learning also involves keeping track of the inputs and outputs produced by an experiment. An experiment run can produce all sorts of interesting output data—files containing model predictions, model weights, and much more.

And often, the outputs from one experiment can be used as the inputs for other experiments. This can become complex to track without the right structure or a single source of truth.

What are Artifacts?

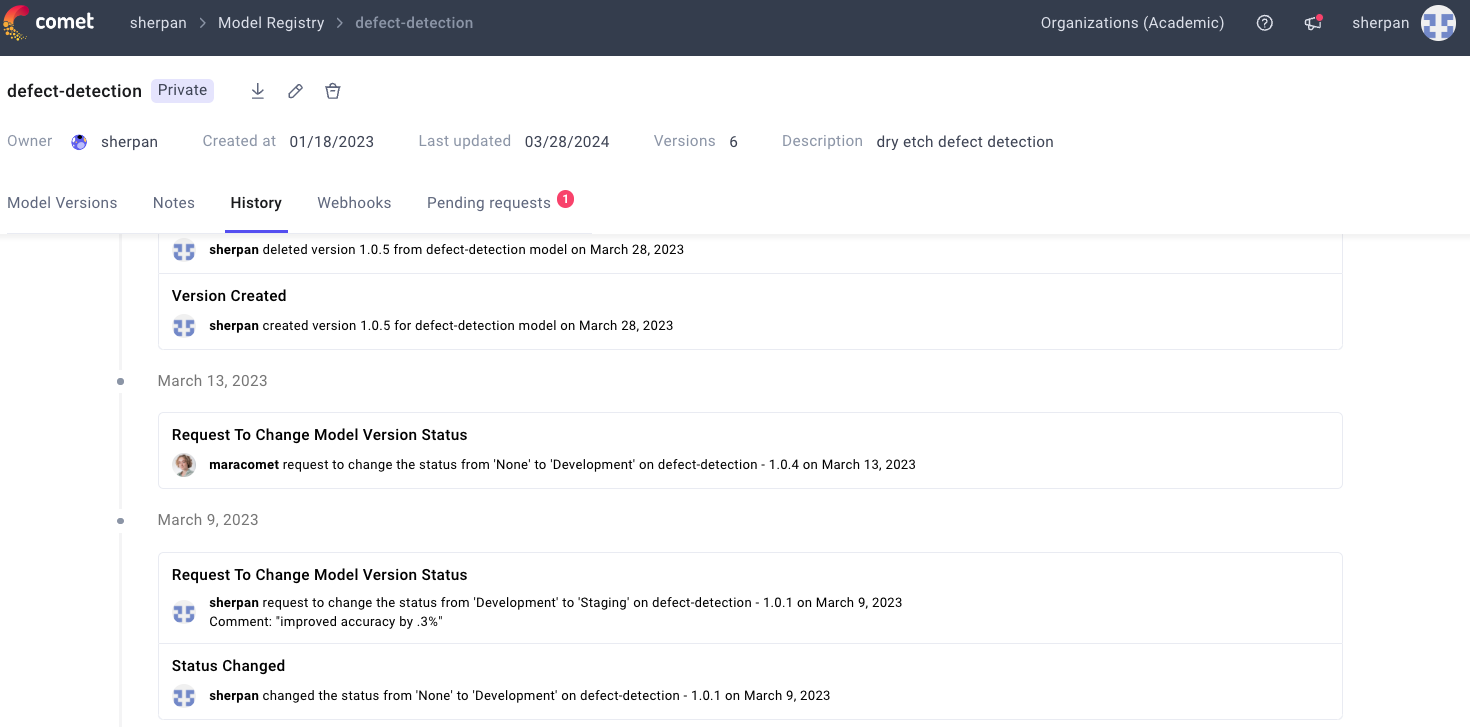

In Comet, an Artifact is a versioned object, where each version is an immutable snapshot of files and assets, arranged in a folder-like logical structure. This snapshot can be tracked using metadata, a version number, tags, and aliases. A version tracks which experiments consumed it, and which experiment produced it.

This means that with Artifacts, you can structure your experiments as multi-stage pipelines or DAGs (Directed Acyclic Graphs), and ensure centralized, managed, and versioned access to any of the intermediate data produced in the process.

Specifically, Artifacts enable you and your team to:

- Reuse data produced by intermediate or exploratory steps in experimentation pipelines, and allow it to be tracked, versioned, consumed, and analyzed in a managed way.

- Track and reproduce complex multi-experiment scenarios, where the output of one model would be used in the input of another experiment.

- Iterate on datasets over time, track which model used which version of the dataset, and schedule model re-training.

Getting Started with Comet Artifacts

For a deeper dive into working with Artifacts—or to jump right in and try it for yourself—check out these additional resources:

- Our full announcement

- A Colab Notebook, if you’d prefer to jump right in

- Docs and reference

And stay tuned! We’ll be exploring more of the use cases and capabilities of Comet Artifacts in the near future.

FROM TEAM COMET | WHAT WE’RE READING

OpenAI Codex shows the limits of large language models

OpenAI Codex, which powers GitHub’s new “AI pair programmer” CoPilot, has been pitched as the future of AI-assisted software development. Built on the foundation GTP-3 architecture, Codex was designed as a unique implementation of the architecture—but in their paper, researches from OpenAI revealed that none of the other various versions of GPT-3 were able to solve any of the code-based problems that Codex is designed to tackle.

The primary implication of this finding is that, while quite powerful, the GPT-3 architecture was initially developed on the premise that enough language models trained on a big enough text dataset would be able to match or outperform models designed for more specialized tasks.

In addition to this dynamic, author Ben Dickson covers some of the other key findings in the research, covering ares like size vs cost, text generation vs understanding, and the responsible use and reporting of these kinds of models.

Read Ben Dickson’s full article here

Justitia ex Machina: The Case for Automating Morals

This compelling article by Rasmus Berg Palm and Pola Schwöbel discuss two essential—and deeply complex—concepts when it comes to how we evaluate ML models: fairness and transparency. The two authors wade into these challenging waters, touching on ML’s potential and pitfalls ion decision making as it relates to race, gender, sexual orientation, and more.

They also pose another fundamental question that we’ll all likely grapple with for years to come—are humans actually better at making the kinds of decisions we’re attempting to automate?

Read the full essay in The Gradient

Interfaces for Explaining Transformer Models

An incredibly thorough and information-rich resource from Jay Alammar, who focuses on visualizing Transformer architectures via input saliency and neuron activation. This range of visualizations and the accompanying commentary provided a wide variety of entry points into one of the most popular deep learning architectures of the past few years.