Experimentation is the lifeblood of machine learning. It’s how we discover and refine models that power everything from recommendation systems to self-driving cars. However, running experiments, tracking their progress, and sharing results can be challenging, especially in interdisciplinary teams. This article will explore how two powerful tools, Comet and Gradio, simplify and enhance your machine learning journey.

Machine learning is dynamic and ever-evolving, requiring data scientists and machine learning engineers to iterate on models continually. This iterative process often involves experimenting with different hyperparameters, datasets, and algorithms. As a result, keeping track of machine learning experiments and sharing findings with team members is critical.

Two invaluable tools in this journey are Comet and Gradio. Comet allows data scientists to track their machine learning experiments at every stage, from training to production, while Gradio simplifies the creation of interactive model demos and GUIs with just a few lines of Python code. This article will show how these two tools can be effortlessly integrated, enhancing your machine learning experiments collaboratively and interactively.

The Power of Experiment Tracking with Comet

Challenges in Experiment Tracking

Tracking machine learning experiments can be a daunting task. Consider a scenario where you’re experimenting with different neural network architectures for image classification. You might have various configurations, datasets, and training iterations. Manually keeping tabs on each experiment’s parameters, metrics, and results quickly becomes unmanageable.

Introducing Comet: Your Trusted MLOps Companion

Comet is an MLOps platform designed to tackle these challenges. It provides a unified platform for data scientists and teams to track, manage, and monitor machine learning experiments in one place. Here are some key features:

- Experiment Tracking: Easily log hyperparameters, metrics, code versions, and dataset information for every experiment.

- Collaboration: Share experiments with team members, making it a collaborative hub for your ML projects.

- Reproducibility: Ensure your experiments are reproducible by tracking code changes and dependencies.

- Visualization: Create interactive dashboards to visualize and compare experiment results.

Comet’s comprehensive suite of tools empowers data scientists to focus on developing their models and lets the platform handle experiment tracking and management.

Building Interactive Model Demos with Gradio

The Importance of Interactive Model Demos

In machine learning, it’s not just about building accurate models; it’s also about ensuring that these models are interpretable and usable by a broader audience, including non-technical stakeholders. That’s where Gradio steps in.

Gradio is an open-source Python library that simplifies the creation of interactive ML interfaces. Whether it’s image classification, text generation, or any other ML task, Gradio lets you build GUIs with just a few lines of code. Let’s dive into how it works using an example.

We will be handling an interactive Question Answering System using Comet and Gradio:

For starters, below is a systematic approach to building a question-answering system that integrates state-of-the-art NLP models with interactive web interfaces and leverages Comet LLM for logging and analyzing interactions.

1. Install Necessary Packages

- Purpose: Ensure all required libraries (

transformers,gradio,comet-llm) are installed in the Python environment to leverage their functionalities for the project.

2. Import Libraries

- Purpose: Load the necessary Python libraries for the project, including

comet_llmfor interaction logging,gradiofor creating web interfaces, andtransformersfor accessing pre-trained models and utilities.

3. Initialize Comet LLM

- Purpose: Set up Comet LLM with your API key, workspace, and project details. This step is crucial for logging the question-answering interactions for analysis and review.

4. Configure Model and Tokenizer

- Purpose: Select and load the DistilBERT model and its tokenizer. DistilBERT is chosen for its efficiency and effectiveness in handling natural language processing tasks, such as question answering.

5. Initialize QA Pipeline

- Purpose: Create a question-answering pipeline with the loaded model and tokenizer. This pipeline is responsible for processing the context and question to generate an answer.

6. Define Answering and Logging Function

- Purpose: Implement a function that takes a context and a question as input, uses the QA pipeline to find an answer, and logs the interaction (context, question, and answer) to Comet LLM. This function embodies the core functionality of your application.

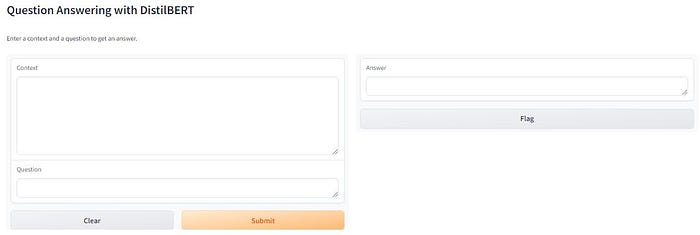

7. Setup Gradio Interface

- Purpose: Design and configure the Gradio interface to interact with users. Define inputs for context and question, and display the generated answer as output. Gradio simplifies deploying ML models with user-friendly web interfaces.

8. Launch Interface

- Purpose: Start the Gradio web server to make the question-answering system accessible to users. This step allows users to input their context and questions and receive answers in real-time.

With the blueprint in hand, let’s harness it to construct our Question Answering System, setting the stage for a seamless blend of technology and user interaction.

Let’s start coding…

# Install necessary packages for our project

!pip install transformers gradio comet-llm

# Import the necessary libraries

import comet_llm

import gradio as gr

from transformers import pipeline, AutoModelForQuestionAnswering, AutoTokenizer

# Initialize Comet LLM with provided API key and project details

comet_llm.init(api_key="YOUR_API_KEY", workspace="YOUR_WORKSPACE", project="YOUR_PROJECT_NAME")

# Configure the model and tokenizer using DistilBERT

MODEL_NAME = "distilbert-base-uncased-distilled-squad"

tokenizer = AutoTokenizer.from_pretrained(MODEL_NAME)

model = AutoModelForQuestionAnswering.from_pretrained(MODEL_NAME)

# Initialize the pipeline for question-answering tasks

qa_pipeline = pipeline("question-answering", model=model, tokenizer=tokenizer)

# Define the function to answer questions and log to Comet LLM

def answer_question_and_log(context, question):

# Generate the answer using the QA pipeline

answer = qa_pipeline(question=question, context=context)['answer']

# Log the prompt and output to Comet LLM

comet_llm.log_prompt(

prompt=f"Question: {question}\nContext: {context}",

output=answer,

workspace="YOUR_WORKSPACE", #

project="YOUR_PROJECT_NAME", #

metadata={

"model": MODEL_NAME,

"api_key": "YOUR_API_KEY"

}

)

return answer

# Setup Gradio interface

iface = gr.Interface(

fn=answer_question_and_log,

inputs=[gr.Textbox(lines=7, label="Context"), gr.Textbox(label="Question")],

outputs=gr.Textbox(label="Answer"),

title="Question Answering with DistilBERT",

description="Enter a context and a question to get an answer."

)

# Launch the interface

iface.launch()

The system runs successfully and the interface is created.

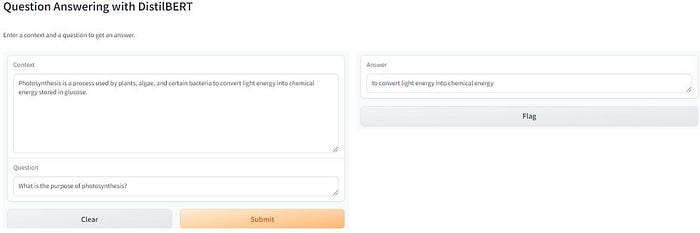

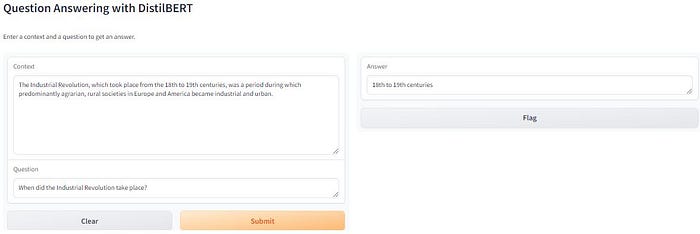

Next up, we’ll walk through two examples to show you how it all works. We’ll be feeding the interface with a “context” and a “question”, then we will expect an answer from it.

Example 1

Example 2

Just like we hoped, our system nailed it, giving us the right answers for both examples based on the context we gave it.

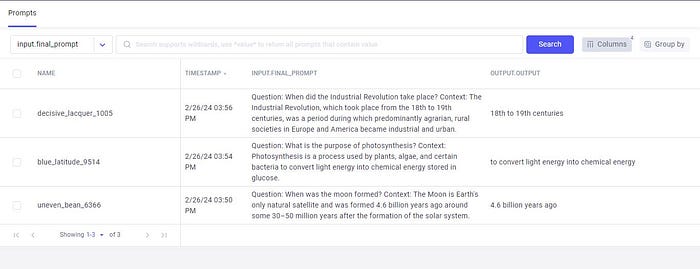

Moreover, the integration with Comet ML played a crucial role in capturing the essence of this interaction. The context, the posed question, and the model’s precise answer were all meticulously logged in the Comet experiment. This showcases the seamless synergy between the model’s operational capabilities and Comet ML’s robust tracking and analytical framework.

This synergy facilitates a comprehensive understanding of the model’s performance and user engagement, serving as a cornerstone for ongoing model refinement and enhancement.

Bridging the Gap: Comet and Gradio Integration

The Power of Integration

The magic happens when you combine Comet’s experiment tracking capabilities with Gradio’s interactive demos. Integrating these two tools simplifies the experimentation process and enhances collaboration within your ML team.

Real-World Applications

Integrating Comet and Gradio isn’t just theoretical; it can be used to make a real impact in machine learning. Here are a few examples of how this powerful combination can be used:

1. Healthcare Diagnostics

In healthcare, accurate and rapid diagnostics are critical for patient care. Medical professionals can utilize Comet and Gradio to build AI models to diagnose diseases. Here’s how it would work:

- Interactive Diagnostics: Doctors can interactively input patient data, including symptoms, medical history, and test results, into a Gradio-powered interface.

- Instant Predictions: The ML model, supported by Comet’s experiment tracking, processes this data and provides instant diagnostic predictions. These predictions can include disease classifications, severity assessments, and treatment recommendations.

- Enhanced Collaboration: Medical teams can collaborate effectively by sharing diagnostic sessions via the Comet platform. This allows for a collective review of patient cases and fine-tuning diagnostic models based on real-world data and expert insights.

The result? Faster and more accurate disease diagnosis, leading to improved patient outcomes and healthcare efficiency.

2. Financial Predictions

In the world of finance, predicting stock prices and market trends is both challenging and lucrative. Financial analysts and investors can leverage the integration of Comet and Gradio to create interactive models that aid in financial predictions. Here’s how they can use this dynamic duo:

- User-Friendly Financial Tools: Gradio’s intuitive interface design would enable financial experts to input various market indicators, economic data, and trading strategies effortlessly.

- Predictive Analytics: Behind the scenes, machine learning models, constantly updated and tracked using Comet, analyze this input data to predict stock prices, market trends, and investment opportunities.

- Accessible Insights: The power of integration shines when non-technical stakeholders, such as clients and decision-makers, can interact with these financial prediction models through Gradio’s user interface. They can explore different scenarios and better understand the financial landscape.

This integration would bring transparency and accessibility to complex financial models, fostering better-informed investment decisions and risk management.

3. Education

Education is transforming with the integration of AI. Educators can use Comet and Gradio to develop AI-powered educational tools that enhance the learning experience for students of all ages. Here’s how this technology could be applied in education:

- Interactive Learning: Gradio-powered interfaces would enable students to interact with AI models, such as language tutors, math problem solvers, and virtual science labs.

- Personalized Feedback: These AI models can provide instant feedback and guidance to students, helping them grasp concepts and refine their skills.

- Teacher Support: Educators can use Comet to track students’ progress and understand the most effective teaching methods and AI tools. This data-driven approach could empower teachers to tailor their instruction to individual student needs.

- Engagement and Accessibility: AI-powered educational tools would engage students with interactive content, making learning more engaging and effective. Additionally, they can enhance accessibility for students with diverse learning needs.

Wrapping Up…

In machine learning, experimentation is the key to innovation. However, experimenting efficiently, tracking progress, and sharing findings collaboratively can be challenging. That’s where Comet and Gradio come to your rescue. Comet simplifies experiment tracking, while Gradio makes your models interactive. Together, they create a synergy that empowers you to build better models and easily share and understand them.

So, don’t hesitate to explore the possibilities of Comet and Gradio integration in your next machine learning project. By bridging the gap between experimentation and usability, you’re paving the way for more accessible, interpretable, and impactful machine learning models.

Additional Resources

Feel free to reach out and share your experiences with Comet and Gradio integration. Happy experimenting!