NLP for Text-to-Image Generators — Prompt Generation [Part 2]

In the previous tutorial, we analyzed a large dataset of prompts created by Midjourney users. By using natural language processing (NLP), we were able to create a semantic search and model topics for the existing topics. In this article, we are going to dive deeper and explore ways of using language models to meaningful generate prompts.

In order to have the best results, the quality of the prompt describing the visual matters. Prompt engineering is the concept of providing an input to a large language model and it generates a complete prompt or phrase as the output. The generated output depends on the task that the underlying language model was trained on.

Language models like GPT-3 by OpenAI have been trained using a large number of parameters and can provide good results for general tasks like writing topics for articles. However, these large language models can be fine-tuned by training them on specific data so that they can perform better on specific tasks. For our use case, we’ll need to fine-tune a large language generation model provided by Cohere. This makes it able to generate prompts that can be fed into the text-to-image generators.

Prerequisites

You need to have Python 3.6+ installed in your development machine in order to follow along. In addition to this, you need an account to use Cohere’s platform.

Cohere is a platform that provides access to advanced large language models and NLP tools through one easy-to-use API. The platform offers free credits that you can use to experiment with your NLP projects. Make sure you install the following dependencies.

pip install datasets cohere numpy pandas nltk

Data preparation

You will use the Midjourney’s prompt dataset available on Hugging Face. For this tutorial, you need to clean the prompts, add a separator and export them in txt format. This is because you will use this data to fine-tune a language model using Cohere’s platform. To get started, first load the dataset.

from datasets import load_dataset

import pandas as pd

dataset = load_dataset(“succinctly/midjourney-prompts”)

df = dataset[‘train’].to_pandas()

Next, clean the dataset by removing duplicates, prompts with less than 10 words, and empty rows. This ensures the training data is of high quality. The resulting dataset has 61k prompts.

df = df[df[‘text’].str.strip().astype(bool)]

df = df.drop_duplicates(subset=[‘text’], keep=’first’)

Finally, make sure you write the prompts to a txt file. Make sure you add a separator such as “ — END PROMPT — ” to identify different prompts.

import nltk

nltk.download('punkt')

with open('/path/to/folder/midjourney_prompts.txt', 'w') as f:

for index, row in df.iterrows():

if len(word_tokenize(row['text'])) > 10:

f.write(row['text'])

f.write('\n-- END PROMPT --\n')

Prompt engineering plus Comet plus Gradio? What comes out is amazing AI-generated art! Take a closer look at our public logging project to see some of the amazing creations that have come out of this fun experiment.

Fine-tuning Cohere’s generation model

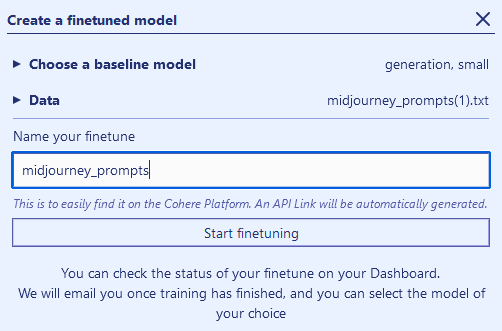

Cohere has a generation model that you’ll use as the base model to fine-tune it with your training data. The process is simple and user-friendly. Log in to your Cohere account, head over to the dashboard, and click on ‘Create Finetune.’

Select ‘Generation (Generate)’ as your baseline model and ‘small’ as the model size. The smaller the model, the faster it is, but it has lower performance and vice-versa. Next, upload your txt file that has your training data and configure the data separator as shown below.

Click ‘Preview Data’ to confirm the samples are correct. If you are satisfied, click ‘Review Data.’

Give your fine-tune model a name then click ‘Start Fine-tuning’ to kickstart the process.

Monitor the status of the training process and once it changes to ‘Ready,’ you can now start using the model.

Prompt generation

In this section, you will integrate your fine-tuned language model with your prompt generation process. This is where prompt engineering comes to play. By automating this process, you can generate up to five prompts with a single API call and iterate based on results. Also, you can add various parameters to this API call to configure the generation process so that it suits your needs. Visit Cohere’s Generate Documentation for more information about these configuration options.

First, you’ll need to grab your API key from Cohere’s dashboard and initialize the client. Kindly note that you need to specify the version so that some features are available.

import cohere

co = cohere.Client(‘{apiKey}’, ‘2021–11–08’)

Next, call the generate API. In this call, you will specify the following parameters:

- Specify the model using your fine-tuned language model’s full ID.

- Add the prompt you want the model to use to generate the complete prompt.

- Specify the number of prompts you want to be generated (A maximum of five is allowed).

- Configure the presence penalty that will be used to make sure tokens are not repeated.

- Set the maximum number of tokens to be generated.

response = co.generate(

model='<full-model-id>',

prompt='a rockstar puppy in mars',

max_tokens=50,

temperature=0.9,

num_generations=5,

presence_penalty=0,

stop_sequences=[],

return_likelihoods='NONE')

print('Prediction: {}'.format(response.generations[0].text))

When you run the code above with the input prompt as “a rockstar puppy in mars,” it will generate the following outputs:

Using the generated prompts, you can create some artworks using Stable Diffusion and see what the renders look like.

Conclusion

Text-to-image generators are so much fun once you figure out how to create meaningful prompts and you are only limited by your imagination. Cohere’s platform offers easy-to-use APIs to access advanced NLP tools. You can also use other large language models like GPT-3 to create your own fine-tuned model for generating prompts.

Don’t forget to check out Dall-e 2 Prompt Book for additional information on how to come up with meaningful prompts. Happy creating!