Machine Learning Teams are moving their models from solutions like Github and storing them in MLOps platforms like Comet. Comet’s Model Registry allows ML Organizations to access the full training lineage of a Model. However, the Model Lifecycle doesn’t end at a Model Store. Eventually, teams deploy a model to production and monitor it for drift. As models drift, teams re-train the model on a new version of the data. Updating a model in production is an arduous task that raises a lot of questions. When does a model needs to be updated? Can we verify if the re-trained model is better than the original? How can we automate the deployment of the updated models? Who has the final say to move a model production? ML Teams need a Model Storing Solution like Comet that supports all these Model CI/CD Workflows.

Detect the Need for Model Re-Training

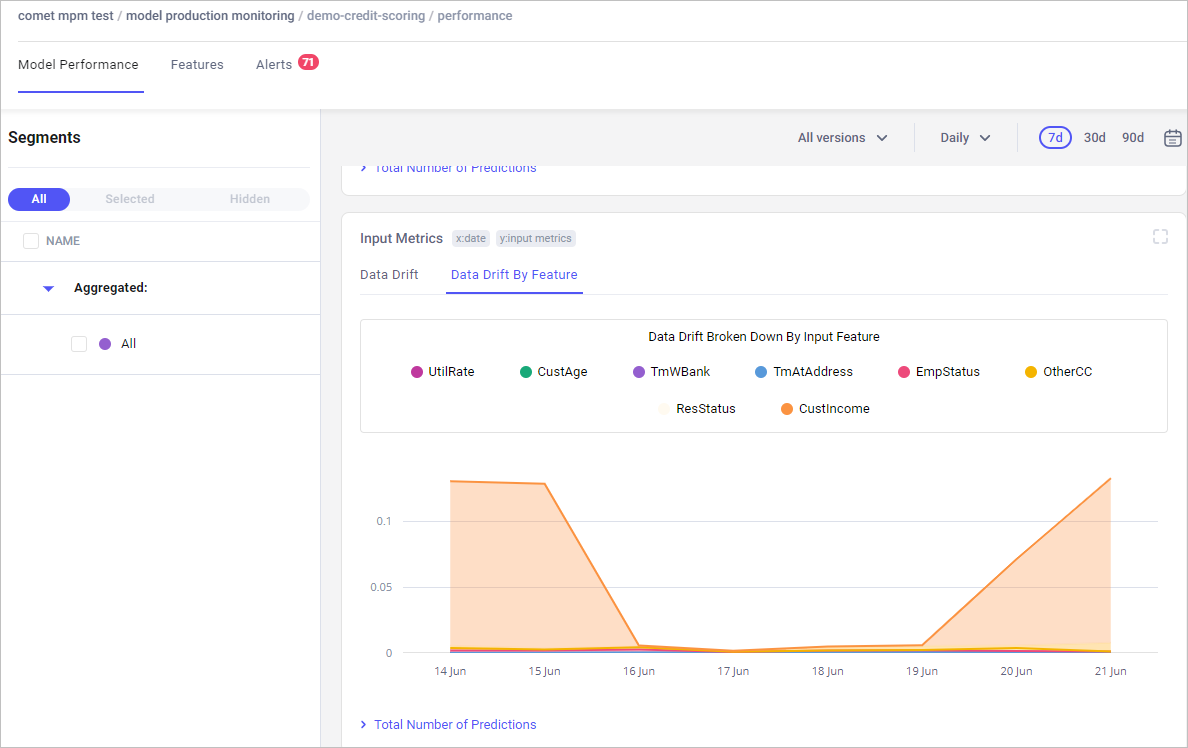

Machine Learning Models Models are rarely “set and forget”. As models start to underperform or “drift” in production, ML teams need to be aware as-soon-as possible, so they can commence the re-training process. Comet’s Model Production Monitoring (MPM) solution stores and analyzes a model’s predictions to detect where and how a model is failing. Here are some of the ways MPM helps ML teams debug their models in productions

- Detect Model Output Drift

- Detect Model Input Feature Drift

- Detect Model Accuracy Drift

- Detect Data Drift from Training Distribution

- Detect Model Bias via Segmentation

Any of the occurrences listed above warrants a model re-training; ideally on a new dataset with some added data from inference.

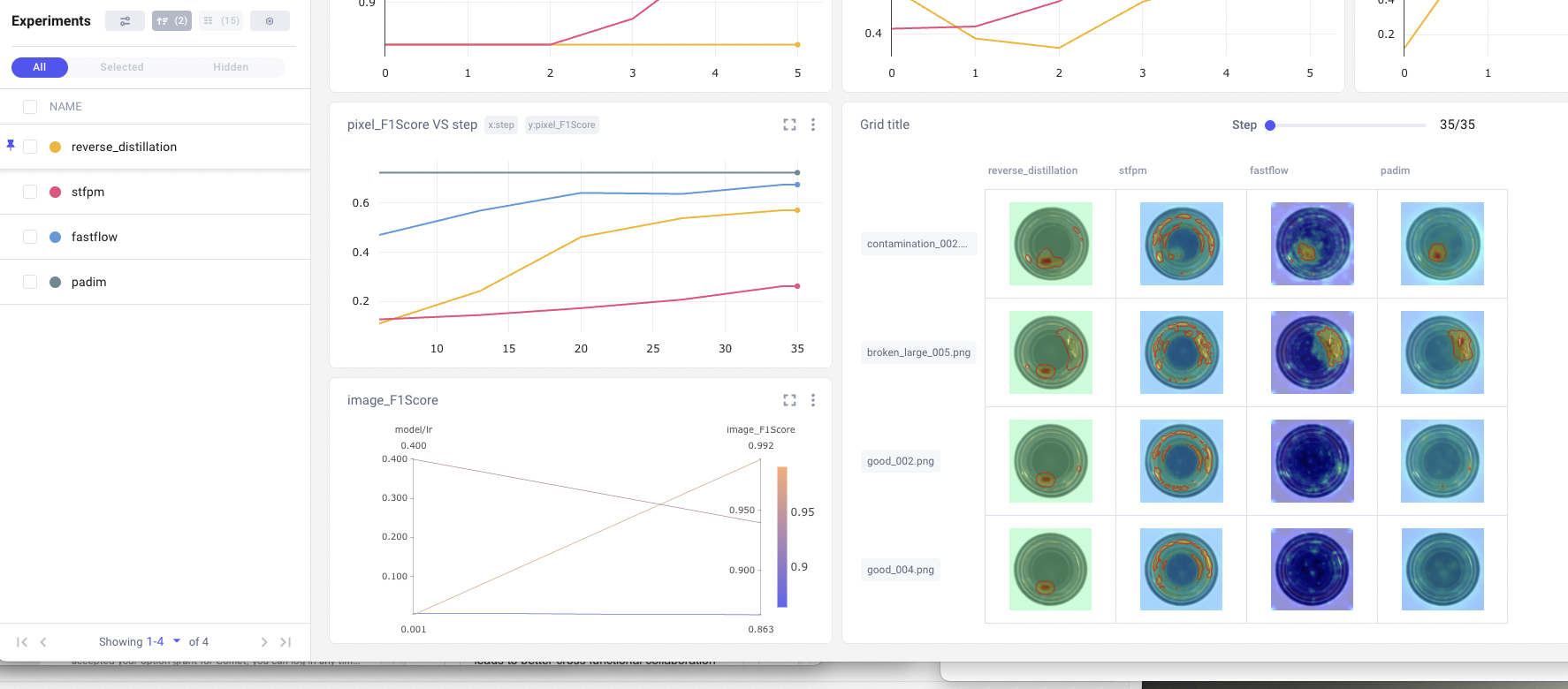

Compare and Contrast Model Versions

Comet’s Model Registry has full lineage to the Experiment Management platform. Users can access the experiment run associated with a particular Model. Since Comet stores all the necessary metadata needed to reproduce a model training run, re-training a model on a new dataset is often trivial. New and Updated Dataset Versions are tracked via Comet Artifacts. Comet’s Built-In Panels can be used to compare model performance at high level metrics such as loss, accuracy, mAP, recall and also compare model predictions on specific data-samples.

Downstream Model Updates via Webhooks

A re-trained model is added to the Model Registry with an incremented Model Version Number. Each model has an associated status field with the options to assign the following fields: “Development”, “QA”, “Staging”, “Production”. For comprehensive model management, Comet exposes changes in the Model Registry via Webhooks. Webhooks enable teams to automate their model promotion process. Here’s how Comet Customers are leveraging this feature in their workflows today.

- Add/Delete a Model from the Registry -> Send an alert message on Slack/Teams

- Change a Model’s Status to “QA” -> Create an automatic Jira ticket for someone to review it

- Change a Model Status to “Staging” to “Production”, trigger your deployment CI/CD with Sagemaker or Github Actions.

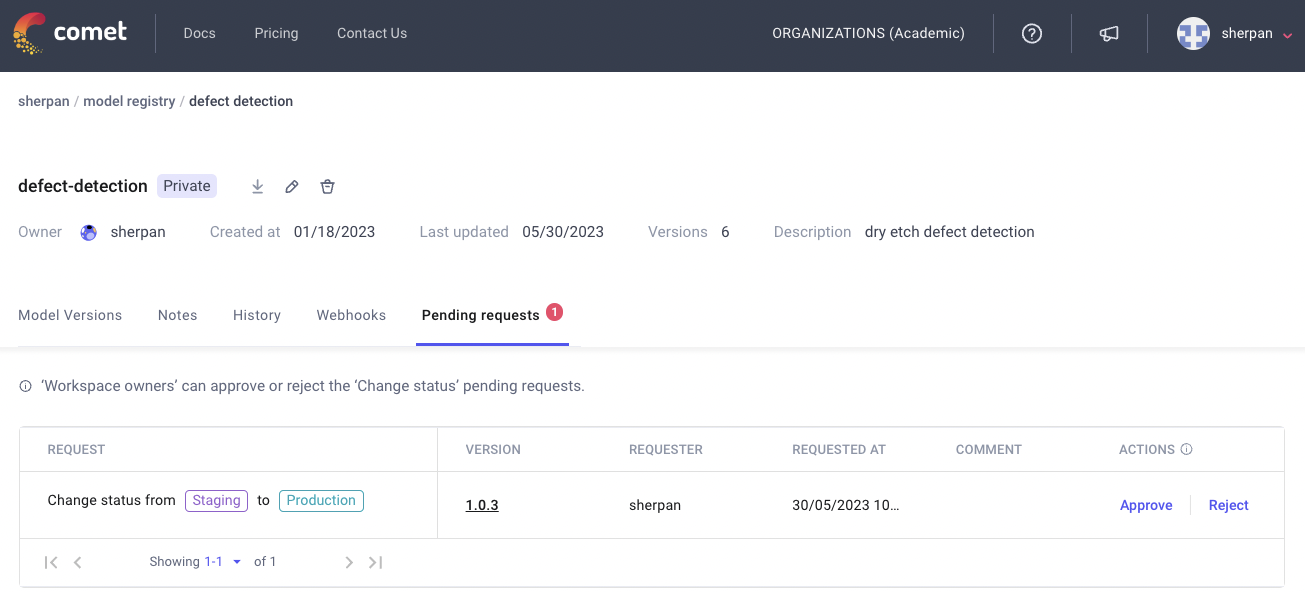

Approve Model Promotions

Machine Learning Models are making high-impact decisions for organizations across the globe. Driving Cars, Analyzing Medical Images, Recommending Loan Amounts, and Detecting Credit Card Fraud. A new model update can have a profound impact on a ML system. In the software world, pull requests are approved by a committee of engineers before it is merged into the main branch.

Since changing the Model Status can affect downstream systems with our Webhooks functionality, in Comet, ML Engineers have to “request” to change the status of the model. Only Workspace Admin(s) then have the authority to either “approve” or “reject” the request. For organizations where Model Auditing is a requirement, data scientists use Comet Reports to supplement a model status request.

Store Your Production Models in Comet

As teams deploy more models to production, a cohesive Model CI/CD process becomes a “must have” rather than “a nice to have”. Comet is incredibly easy to integrate with your current ML workflows. Sign-up for a free account today and try it out!