I wrote an article on how you can explore your data with YOLOPandas and Comet, and you can find the article here. As prompt engineering is fundamentally different from training machine learning models, Comet has released a new SDK tailored for this use case comet-llm.

Check out the Comet LLMOps tool.

In this article you will learn how to log the YOLOPandas prompts with comet-llm, keep track of the number of tokens used in USD($), and log your metadata. This is a helpful guide if you want to learn more about LLMs and understand how to use the new comet-llm tool.

Prerequisites

- YOLOPandas

- comet-llm

- A Comet account, you can sign up here.

YOLOPandas lets you specify commands with natural language and execute them directly on Pandas objects. On the other hand, comet-llm is a tool to log and visualize your LLM prompts and chains. You can use comet-llm to identify effective prompt strategies, streamline your troubleshooting, and ensure reproducible workflows!

Note: Experiment Tracking projects and LLM projects are mutually exclusive (for now). It is not possible to log prompts using the

comet_llmSDK to an Experiment Management project and it is not possible to log an experiment using thecomet_mlSDK to a LLM project.

Installation

The SDK and YOLOPandas can be installed using the Python package installer — pip

#install CometLLM

pip install comet_llm

#install YOLOPandas

pip install yolopandas

Import libraries

After installation, the next steps require you to import both the SDK and the Library into your notebook.

Add your OpenAI API key as an environmental variable, you can find your keys here.

Initialize comet-llm

If you don’t have one already, create your free Comet account and grab your API Key from the account settings page. You will need to also create a new project, make sure to set the project type to Large Language Models and click on the Create button.

Paste the code above in your notebook, and let’s have a look at a quick example of how you can quickly log your prompt using the comet-llm SDK.

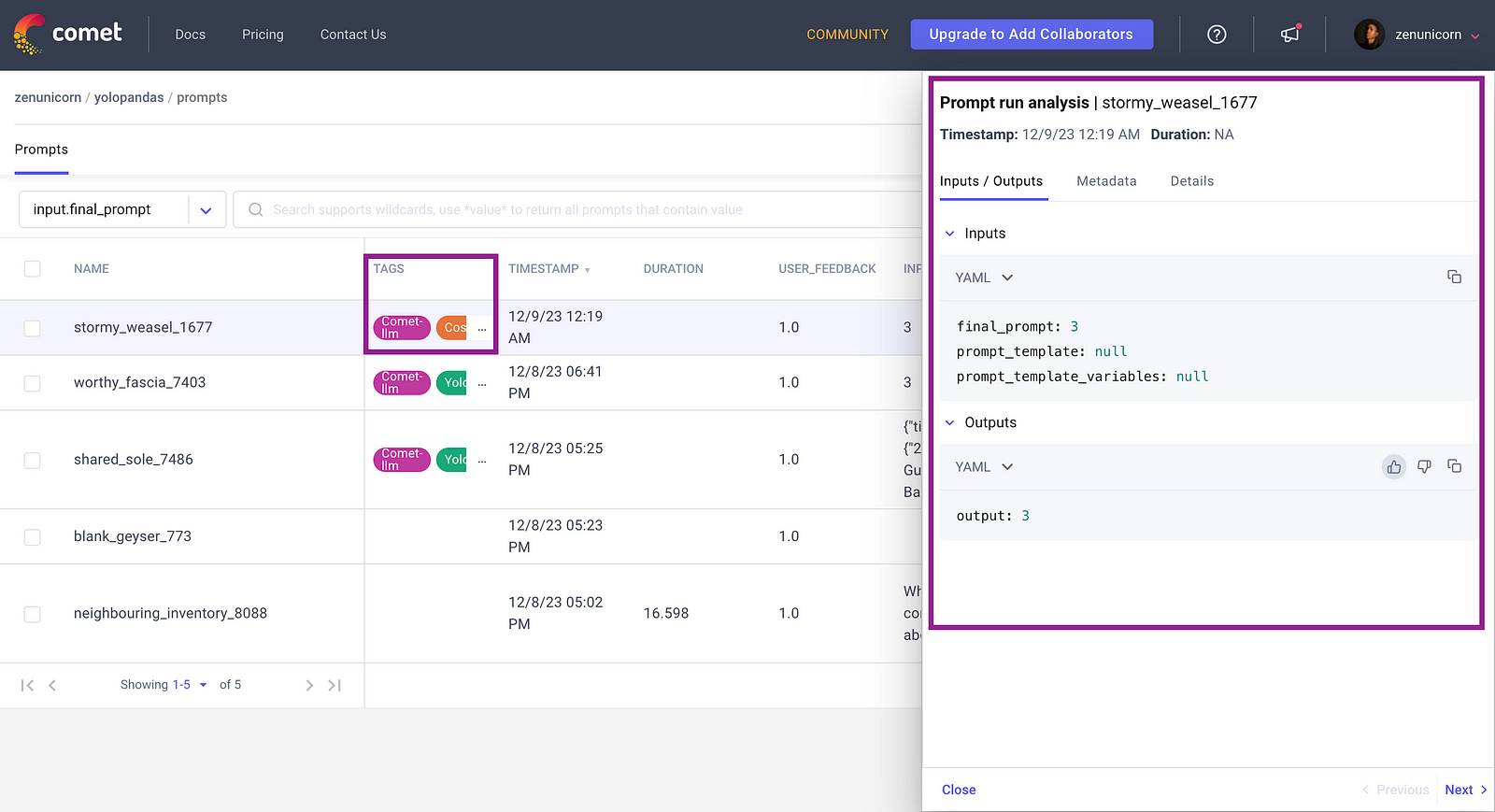

Through the log_prompt function, the prompt, its associated response, and metadata like token usage, total tokens model, etc. were logged to the LLM project dashboard.

Import dataset

The dataset [source: Kaggle] contains titles of movies and other information such as: title, type, description, release_year, age_certification, runtime, genres, production_countries, seasons, imdb_id, imdb_score, imdb_votes, tmdb_popularity, and tmdb_score.

Let’s query the dataset, for each movie we want to count the number of reviews, average score, and show the five with the highest reviews. Set yolo=True and assign it to a variable.

highest_reviews_prompt = movie_reviews.llm.query("for each movie,

count the number of reviews and their average score. Show the 5 with

the highest reviews,", yolo=True)

We will create a function that accepts three parameters — user_prompt, tags and metadata.

Finally, we will call the function by passing variables created above into the function.

Logging YOLOPandas cost

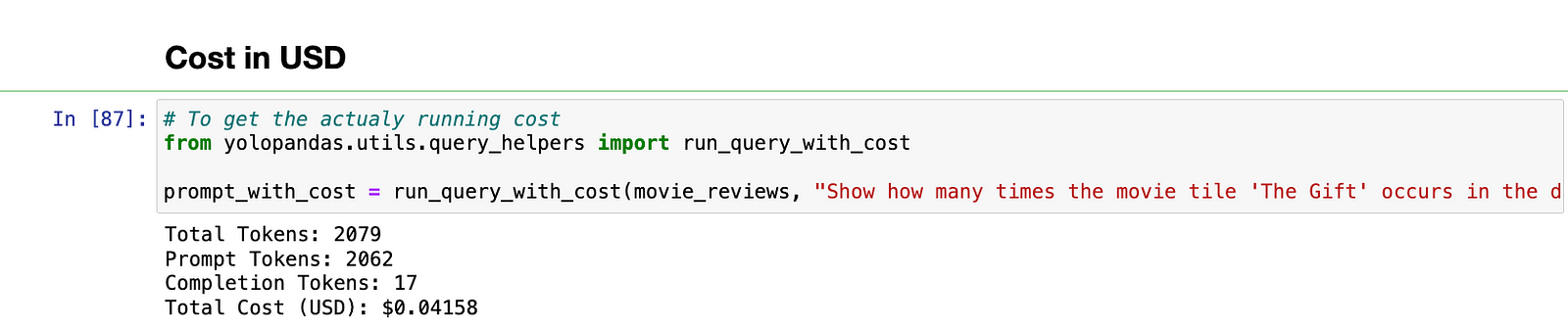

To get a better idea of how much each query costs, you can use the function run_query_with_cost found in the utils module to compute the cost in $USD broken down by prompt/completion tokens.

The run_query_with_cost accepts three parameters, the dataset, your query and yolo=True.

The run_query_with_cost function outputs the total tokens, prompt tokens, completion tokens and the total cost (USD). We will write a function to log the prompt to Comet-LLM and add these metrics as metadata.

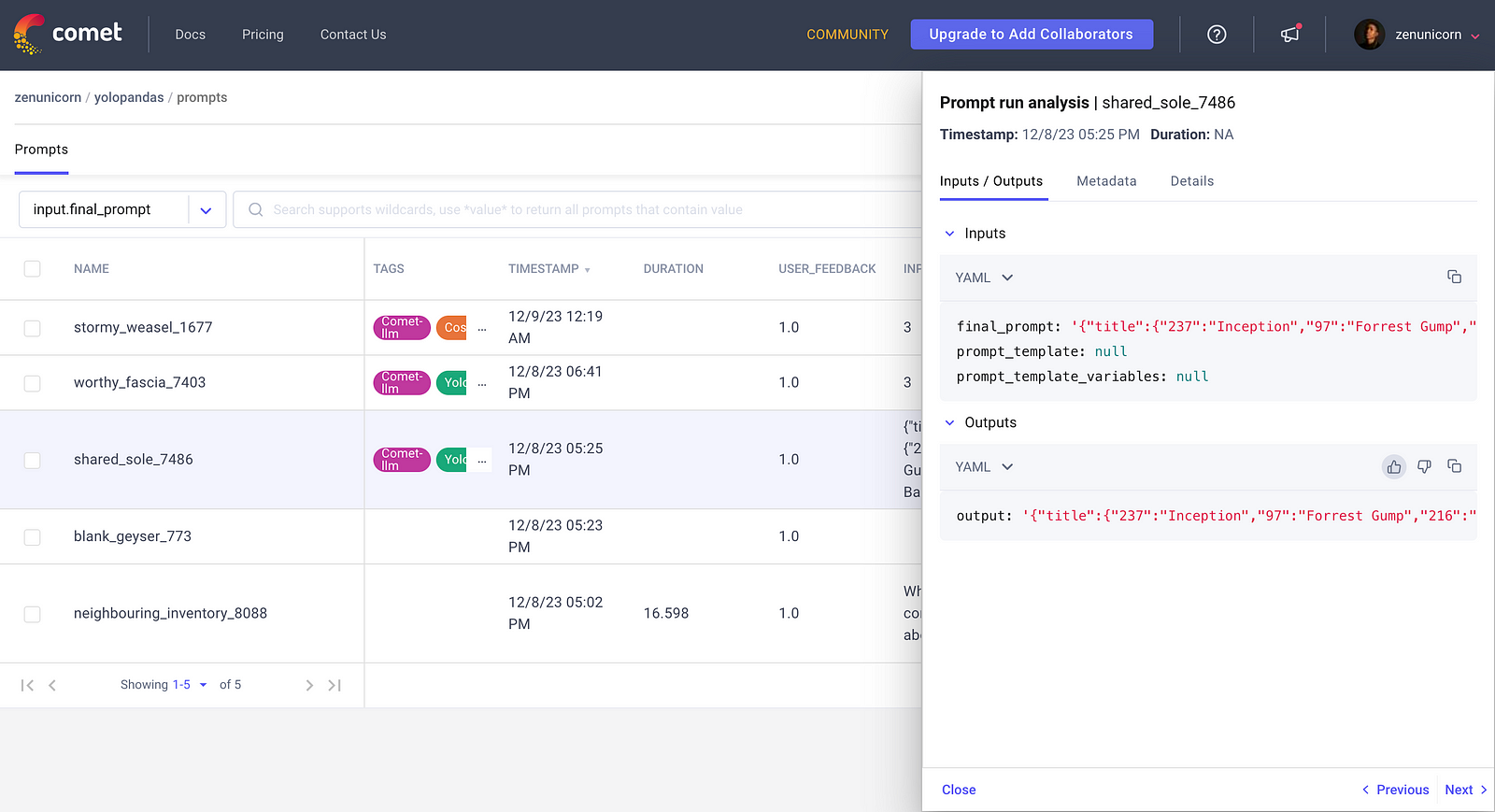

As you can see, we were able to log our YOLOPandas prompt, metadata and the running cost for each prompt to Comet using the comt-llm SDK. You can also log your prompt template and variables and can be viewed in the table sidebar by clicking on a specific row.

You can view a demo project here.