Hyperparameter tuning is one of the most important tasks in a Data Science project lifecycle because it determines the performance of our Machine Learning model.

Many tools and strategies can be used to perform hyperparameter tuning, including (but not limited to) the following well-known Python libraries:

- Tree-based Pipeline Optimization Tool (TPOT)

- Hyperopt-Sklearn

- Auto-Sklearn

In this article, I focus on the Comet Optimizer, provided by Comet. With respect to the previous libraries, Optimizer is already integrated with Comet Experiments, thus results of each test can be visualized directly in Comet.

Comet is a platform for Machine Learning experimentation, which provides a variety of features. You can track experiments and their results, collaborate with other users, and optimize experiments using Comet’s algorithms. When using Comet, you can compare datasets and code changes as they relate to experiments. Trying to find the best dataset or the best model parameters? Comet can help.

In this article, I describe how to exploit Comet for hyperparameter tuning. The article is organized as follows:

- Overview of Comet Optimizer

- Configuration of Parameters

- Running Experiments

- Show Results

Overview of Comet Optimizer

The Comet Optimizer is used to tune hyperparameters of a model by maximizing or minimizing a particular metric. The Comet Optimizer supports three different optimization algorithms including Grid, Random, and Bayes. In addition Comet also provides a mechanism to specify your optimization algorithm. For details about each optimization algorithm, you can refer to the Comet official documentation.

The Optimizer class is the main class for hyperparameter tuning in Comet. I assume that you have already installed the cometPython package. If you have not installed it yet, you can refer to my previous article.

Before creating an Optimizer, you should specify some configuration parameters, which include the optimization algorithm, the metric to be maximized/minimized, the number of trials, and the parameters to be tested:

config = {"algorithm": "bayes",

"spec": {

"maxCombo": 0,

"objective": "minimize",

"metric": "loss",

"minSampleSize": 100,

"retryLimit": 20,

"retryAssignLimit": 0,

},

"trials": 1,

"parameters": my_params,

"name": "MY-OPTIMIZER"

}

Innovation and academia go hand-in-hand. Listen to our own CEO Gideon Mendels chat with the Stanford MLSys Seminar Series team about the future of MLOps and give the Comet platform a try for free!

Configuration of Parameters

Similar to the other tools for hyperparameter tuning, the Comet Optimizer requires specifying for each parameter to be tested the range of possible values, with a syntax depending on the type of parameter (integer, categorical, and so on). For example, for an integer, the following configuration should be used:

{"PARAMETER-NAME":

{"type": "integer",

"scalingType": "linear" | "uniform" | "normal" | "loguniform" | "lognormal",

"min": MY-MIN-VALUE,

"max": MY-MAX-VALUE,

}

And for a categorical parameter, the following configuration should be used:

{"PARAMETER-NAME":

{"type": "categorical",

"values": ["LIST", "OF", "CATEGORIES"]

}

}

For example, to tune a scikit-learn K-Neighbors Classifier you could use the following parameter configuration:

my_params = { 'n_neighbors':{ "type" : "integer", "scalingType" : "linear", "min" : 3, "max" : 8, }, 'weights':{ "type" : "categorical", "values" : ['uniform', 'distance'], }, 'metric': { "type" : "categorical", "values" : ['euclidean', 'manhattan', 'chebyshev', 'minkowski'] }, 'algorithm': { "type" : "categorical", "values" : ['ball_tree', 'kd_tree'] } }

Running Experiments

You can create an Optimizer object as follows:

from comet_ml import Optimizeropt = Optimizer(config, api_key="MY-API-KEY", project_name="MY-PROJECT-NAME", workspace="MY-WORKSPACE")

A Comet Optimizer creates a different experiment for each combination of parameters to be tested. Thus, you can iterate over the list of experiments and fit a new model for each experiment.

For example, for the K-Neighbors classifier of the previous example, you can write the following code:

from sklearn.neighbors import KNeighborsClassifier from sklearn.metrics import f1_score, precision_score, recall_scorefor experiment in opt.get_experiments(): model = KNeighborsClassifier( n_neighbors=experiment.get_parameter("n_neighbors"), weights=experiment.get_parameter("weights"), metric=experiment.get_parameter("metric"), algorithm=experiment.get_parameter("algorithm") ) loss = model.fit(X_train, y_train) experiment.log_metric("loss", loss) y_pred = model.predict(X_test) f1 = f1_score(y_test, y_pred) precision = precision_score(y_test, y_pred) recall = recall_score(y_test, y_pred) experiment.log_metric("f1", f1) experiment.log_metric("precision", precision) experiment.log_metric("recall", recall)

In the previous example, for each experiment, precision, recall, and f1-score are calculated and logged through the log_metric() function.

Show Results

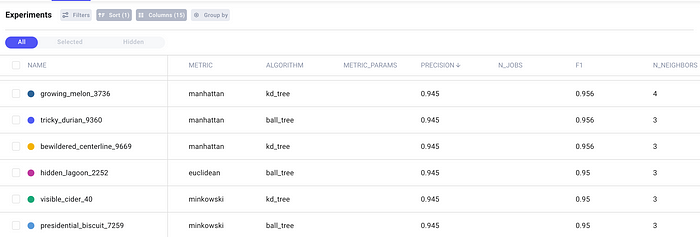

When all the experiments finish, you can view results in Comet. For each experiment, you can view the configuration parameter as well as the performance metric, as shown in the following Figure:

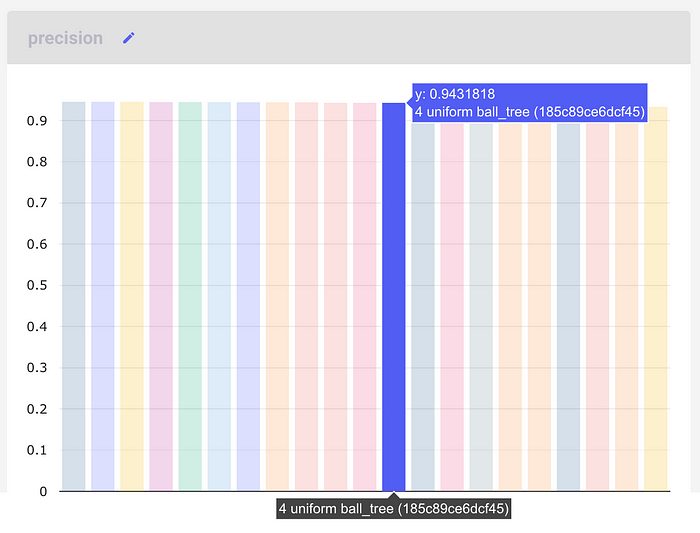

In addition, you can build a panel with a specific metric for all the experiments. For example, the following Figure shows the precision for all the experiments:

I have highlighted the specific bar with the following parameters:

- n_neighbors = 4

- weight = uniform

- algorithm = ball_tree

By comparing all the results, you can easily choose the best parameters for your model, and use them in production.

Summary

Congratulations! You have just learned how to tune hyperparameters in Comet! You can just exploit the ready-to-use Optimizer class! For more details, you can read the Comet documentation.

There are many other things you can do with Comet, such as using it in conjunction with Gitlab, as I described in my previous article.

Now you just have to try it — have fun!