Overview

Let us start by asking ourselves some questions: Have you ever wondered how Google’s translation app can instantly convert entire paragraphs between two languages?

How do Netflix and YouTube know what movies or videos we like, and how do they provide suitable recommendations? Or how do autonomous vehicles even become a possibility?

Other practical examples of deep learning include virtual assistants, chatbots, robotics, image restoration, NLP (Natural Language Processing), and so on. Now that we have our questions let us start providing answers to them.

This article will discuss deep learning using Keras and, most importantly, how we will log our models to Comet.

The code for this tutorial is based on this notebook by Ken Jee from the Z by HP Unlocked Challenge 4: Image Classification.

What is Deep Learning?

Experience is the best teacher. The more we learn, the richer our experiences. The same is true for machines running AI hardware and software in the deep learning field of artificial intelligence (AI). The data that machines gather defines the experiences through which they can learn, and the quantity and quality of data determine how much they can learn.

A branch of machine learning is deep learning. Deep learning systems can perform better with access to more data, which is the machine equivalent of more experience, in contrast to typical machine learning algorithms, many of which have a finite ability to learn regardless of the amount of data they obtain. Machines may be trained to perform specific activities such as driving a car, spotting weeds in a field of crops, diagnosing illnesses, checking machinery for flaws, and other jobs once they have acquired sufficient experience through deep learning.

Neural networks are motivated by the human brain’s organization. By repeatedly examining data according to a predetermined logical framework, deep learning computers try to reach the same conclusions as people. Deep learning does this via a multi-layered neural network algorithmic framework.

We will be using Keras to build a CNN-based image classifier.

Keras is a user-friendly toolbox that substantially reduces the access hurdle to deep learning research and development. The Keras team purposefully incorporated the minimal entry barrier into its architecture to democratize machine learning. CNN, a subset of deep neural networks made up of several layers of artificial neurons, are frequently used to evaluate visual information.

Keras

Keras, an open-source, deep-learning library, was developed by Francois Chollet, a deep-learning researcher at Google. With Keras, users may rapidly translate code into a product because of its user-friendly design principles. This indicates that it was created following a set of criteria that aims to make it effective, dependable, and available to a broad audience. It has several uses in both business and academics as a result. It also offers comprehensive developer instructions.

Convolutional neural networks (CNNs) are a subtype of artificial neural networks that have been popular in several applications linked to computer vision and are attracting interest in other domains.

Convolution, pooling, and fully connected layers are just a few components that make up a convolutional neural network. Using a back propagation approach, it is designed to automatically and adaptively learn spatial hierarchies of features.

Introducing the Comet AI art gallery — a public forum to log experiments, test different parameters, and share your AI-generated art! Learn more about our integration with Gradio to create this one-of-a-kind space.

Prerequisites

To continue this article, we must install the following on our local machine.

- Pandas

- Numpy

- TensorFlow

- Keras_tuner

- Comet

With that said, let us get started by importing important libraries. We will import Comet and the other libraries to log the important data as we proceed with the project.

import pandas as pd import numpy as np import warnings import matplotlib.pyplot as plt import tensorflow as tf from tensorflow import keras from tensorflow.keras import layers from tensorflow.keras.preprocessing.image import ImageDataGenerator import keras_tuner as kt import comet_ml

The next thing to do in this stage is to ensure experiment reproducibility; we will set the seed value, and for our notebook that looks cleaner, turn off the warnings.

seed = 1842

tf.random.set_seed(seed)

np.random.seed(seed)

warnings.simplefilter('ignore')

Data

We will load the data into our notebook; the task is to create a machine-learning model to categorize pictures of the flower “La Eterna.” To get a baseline score, we shall employ a CNN model. Initial evaluation indicates that the dataset is small for a deep learning assignment. The data_cleaned/Trainsubfolder contains the photos we use for training.

image_generator = ImageDataGenerator(rescale=1/255, validation_split=0.2)

#Train & Validation Split

train_dataset = image_generator.flow_from_directory(batch_size=32,

directory='data_cleaned/Train',

shuffle=True,

target_size=(224, 224),

subset="training",

class_mode='categorical')

validation_dataset = image_generator.flow_from_directory(batch_size=32,

directory='data_cleaned/Train',

shuffle=True,

target_size=(224, 224),

subset="validation",

class_mode='categorical')

#Organize data for our predictions

image_generator_prediction = ImageDataGenerator(rescale=1/255)

prediction_data = image_generator_prediction.flow_from_directory(

directory='data_cleaned/scraped_images',

shuffle=False,

target_size=(224, 224),

class_mode=None)

Let’s plot flowers for the first batch:

batch_1_img = train_dataset[0]

for i in range(0,32):

img = batch_1_img[0][i]

lab = batch_1_img[1][i]

plt.imshow(img)

plt.title(lab)

plt.axis('off')

plt.show()

In order to capture a lot of valuable data about the experiments, such as the parameters, metrics and other important in this project, we have to start logging right here.

experiment = comet_ml.Experiment(

api_key="API-Key",

project_name="Project name",

workspace="Workspace name",

log_code=True)

We used the Comet Experiment class, and we pass in the api_key, project_name , workspace and the log_code parameters.

hyperparams = {

"batch_size": 32,

"epochs": 20,

"num_nodes": 64,

"activation": 'relu',

"optimizer": 'adam',

}

experiment.log_parameters(hyperparams)

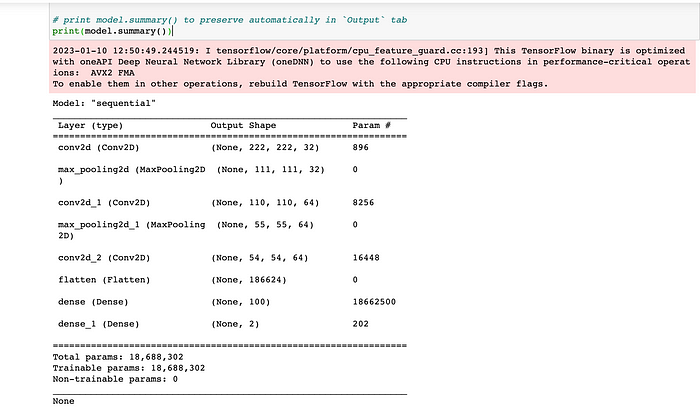

Building the CNN

Building the CNN, we must be careful with input and output forms. This is the input shape: (224, 224, 3). This indicates that the image’s height, width, and channels are 224, 224, and 3. Red, green, and blue are the three colour channels of a picture.

model = keras.models.Sequential([

keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape = [224, 224,3]),

keras.layers.MaxPooling2D(),

keras.layers.Conv2D(64, (2, 2), activation='relu'),

keras.layers.MaxPooling2D(),

keras.layers.Conv2D(64, (2, 2), activation='relu'),

keras.layers.Flatten(),

keras.layers.Dense(100, activation='relu'),

keras.layers.Dense(2, activation ='softmax')

])

# print model summary

print(model.summary())

The model is now ready for compilation. A callback is also being used to end the training early, so the callback will be activated when the validation loss has remained constant or increased for over three epochs.

model.compile(optimizer='adam',

loss = 'binary_crossentropy',

metrics=['accuracy'])

callback = keras.callbacks.EarlyStopping(monitor='val_loss',

patience=3,

restore_best_weights=True)

Training the CNN

We will train our model with the training dataset from data_cleaned/Train and set the epochs to 20.

model.fit(train_dataset, epochs=20, validation_data=validation_dataset, callbacks=callback)

Assessing the CNN performance

We will evaluate our model’s performance by checking for loss and accuracy. Both loss and accuracy will be logged to Comet using experiment.log_metric() .

loss, accuracy = model.evaluate(validation_dataset)

print("Loss: ", loss)

print("Accuracy: ", accuracy)

experiment.log_metric("Loss", loss, step=None, include_context=True)

experiment.log_metric("Accuracy", accuracy, step=None, include_context=True)

Let’s save the model.

model.save('cnn-model')

A folder named cnn-model containing assets, keras_metadata.pb, fingerprint.pb, saved_model.pb, variables will be created in the project folder.

Logging the model

To finish this project, one thing left is to log the model and end the experiments to Comet.

# log the model

experiment.log_model(model, 'cnn-model')

End the Experiment

#end the experiment

experiment.end()

Always use the experiment.end() to end the experiment when running code on Colab or Jupyter notebook.

And that is it; we have successfully logged our Keras deep learning experiments to Comet.

Conclusion

We have reached the end of this tutorial on logging Keras deep learning experiments to Comet. The logged experiment can be found in our dashboard, and collaborators can be added to the project to view and improve. We covered what deep learning means, Keras, why we used Comet, and finally, we logged our experiments to Comet — an MLOps platform that enables us to track, compare and improve our experiments and models.

Here is this link to my version of the notebook (feel free to leave a star), as well as the original notebook by Ken Jee. Also check out this amazing work by Milind Soorya on a CNN-based image classifier.