Getting Started With Comet’s LLMOps

In the rapidly evolving landscape of artificial intelligence and natural language processing, large language models (LLMs) have emerged as powerful tools capable of understanding, generating, and manipulating human language with unprecedented proficiency. These remarkable AI systems have found applications in a wide array of fields, revolutionizing the way we interact with technology and enabling new possibilities in communication, automation, and problem-solving. In this article, we will delve into the world of LLMs, exploring what they are, how they work, their impact on various domains, and finally Comet’s LLMOps

What Are Large Language Models (LLMs)?

Large language models are a class of artificial intelligence models that excel at processing and generating human language. These models are often neural networks, mainly variants of the Transformer architecture, which have proven highly effective for natural language understanding and generation tasks. LLMs are characterized by their enormous size, with billions of parameters, and their ability to generalize and adapt to a wide range of language-related tasks.

How Do LLMs Work?

At their core, LLMs employ deep learning techniques to understand and generate text. They are trained on massive datasets comprising diverse text sources, such as books, articles, websites, and more. During training, the models learn to recognize patterns, relationships, and semantics within the text data. This enables them to grasp grammar, syntax, context, and even nuanced aspects of language like sentiment and tone.

The key to the success of LLMs is their attention mechanism, which allows them to focus on different parts of the input text when making predictions or generating text. This attention mechanism, combined with the massive scale of their parameters, enables LLMs to capture complex dependencies and generate coherent and contextually relevant text.

Applications of LLMs

The versatility of LLMs has led to their adoption across a wide range of applications.

1. Natural Language Understanding: LLMs can extract meaning from text, making them invaluable for tasks like sentiment analysis, text classification, and named entity recognition.

2. Text Generation: LLMs can generate human-like text, which has applications in content creation, chatbots, and even creative writing.

3. Machine Translation: They excel at language translation tasks, breaking down language barriers and facilitating global communication.

4. Question Answering: LLMs can provide answers to questions by extracting relevant information from vast amounts of text.

5. Chatbots and Virtual Assistants: LLMs can be used to power chatbots and virtual assistants, providing human-like interactions and personalized responses to users

6. Content Recommendation: LLMs are behind personalized content recommendation systems, improving user experiences on websites and streaming platforms.

7. Content Generation: LLMs can be used to generate different creative text formats of text content, such as poems, code, scripts, musical pieces, emails, letters, etc., which can be used for a variety of purposes, such as marketing, education, and entertainment.

Ethical and Social Considerations

While LLMs offer tremendous potential, they also raise important ethical and societal questions. Concerns include biases present in training data, the potential for misinformation generation, and the concentration of AI power in the hands of a few organizations. Addressing these issues is crucial to ensuring that LLMs are used responsibly and for the benefit of society.

About Comet

Comet is a powerful machine learning platform designed to help you effectively manage and monitor your machine learning experiments. Among its notable features, Comet provides users with a suite of tools to track and assess model performance. For a more comprehensive understanding of Comet and its capabilities, you can explore additional information available here.

Getting Started With Comet LLMOps

Comet’s LLMOps toolkit opens the door to cutting-edge advancements in prompt management, offering users a gateway to expedited iterations, improved identification of performance bottlenecks, and a visual journey through the inner workings of prompt chains within Comet’s ecosystem.

Comet’s LLMOps tools shine in their ability to accelerate progress in the following key areas:

1. Prompt History Mastery: In the realm of ML products driven by large language models, maintaining meticulous records of prompts, responses, and chains is paramount. Comet’s LLMOps tool provides an effortlessly intuitive interface for the comprehensive tracking and analysis of prompt history. Users can gain deep insights into the evolution of their prompts and responses.

2. Prompt Playground Adventure: Within the LLMOps toolkit lies the innovative Prompt Playground, a dynamic space where Prompt Engineers can embark on expedited explorations. Here, they can swiftly experiment with various prompt templates and observe how these templates impact diverse contexts. This newfound agility in experimentation empowers users to make informed decisions during the iterative process.

3. Prompt Usage Surveillance: Navigating the world of large language models may involve tapping into paid APIs. Comet’s LLMOps tool offers meticulous usage tracking at both project and experiment levels. This finely detailed tracking system allows users to gain a nuanced understanding of API consumption, facilitating resource allocation and optimization.

In summary, Comet’s LLMOps toolkit stands as an indispensable resource for researchers, engineers, and developers immersed in the complexities of large language models. It not only streamlines their workflow but also offers enhanced transparency and efficiency, paving the way for the seamless development and fine-tuning of ML-driven applications.

Step 1:

Login to the Comet website. If you’ve yet to use the platform, you can sign up here.

Step 2:

Click on the icon in the right-hand corner that has the ‘NEW PROJECT’ tag.

Step 3:

Fill in the boxes and SELECT large language models as project type then, click on CREATE.

Step 4:

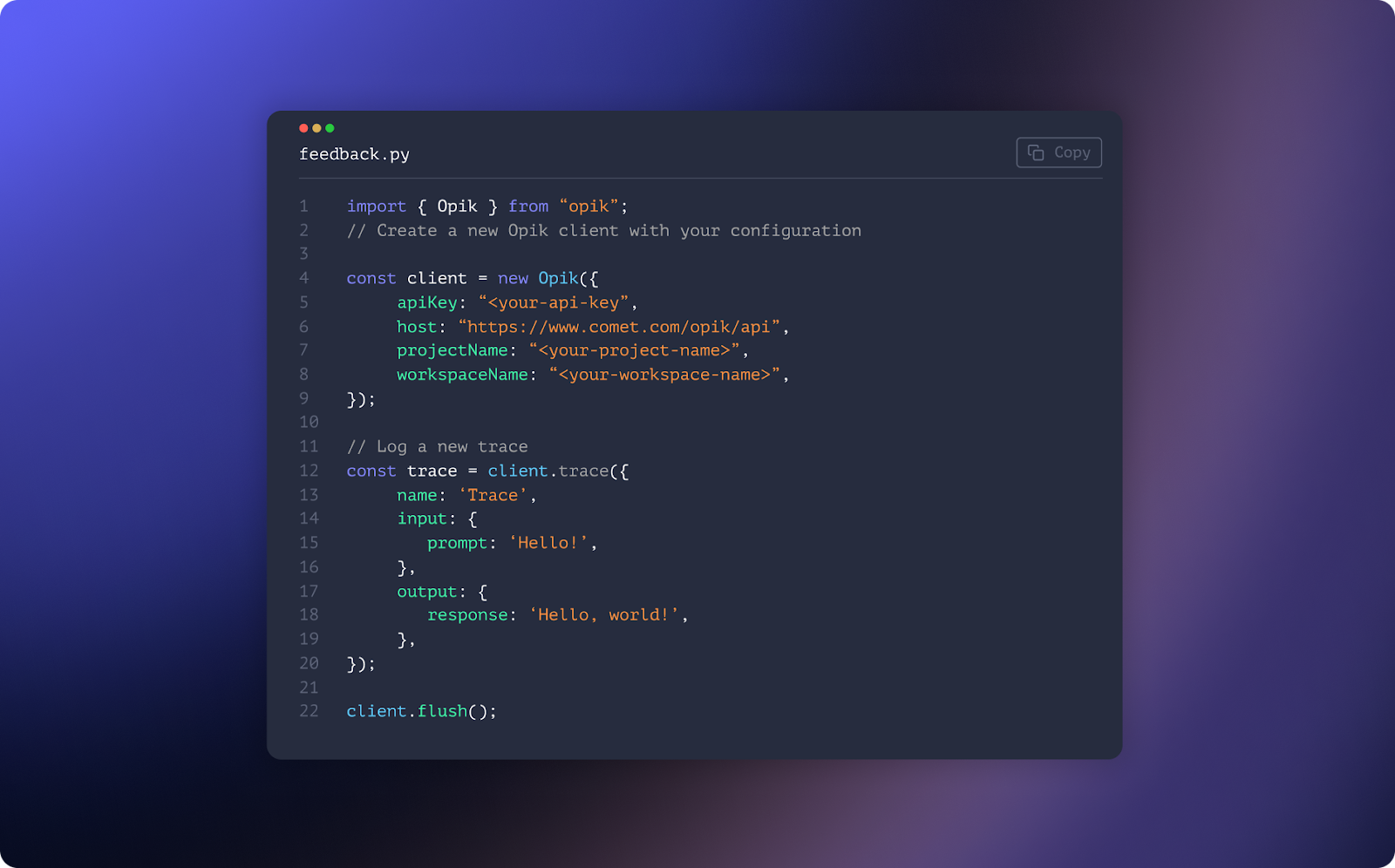

The next step is to install the open-source LLM SDK, which offers a comprehensive set of features for LLMOps. It can be installed using pip in a terminal or command line.

pip install comet-llm

The next step is to log prompts and responses to the LLM using the LLM SDK. The LLM SDK allows you to log prompts with their corresponding responses and any other relevant metadata, such as token usage. This can be done using the log_prompt() function.

Note:To avoid including your API key and workspace for every single prompt, using the comet_llm.init() function will save you stress

import comet_llm

comet_llm.init(project="Alexa-cometllm-prompts")

After which we then proceed to log our first prompt.

comet_llm.log_prompt(

prompt="Hey,What's your name?",

output=" My name is Alexa, and i'm your new best friend

project="Alexa-chatbot",

)

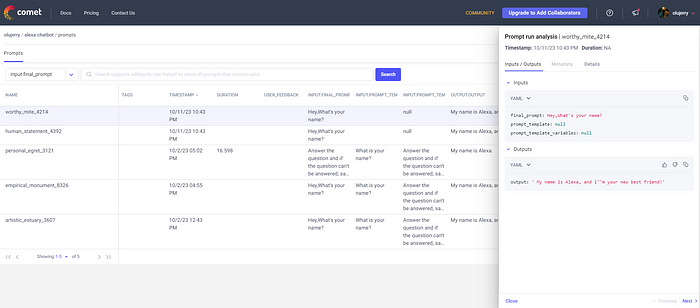

The prompt and response get logged into our Comet dashboard:

Now let’s create more complex prompts:

comet_llm.log_prompt(

prompt="Answer the question and if the question can't be answered, say \"I don't know\"\n\n---\n\nQuestion: What is your name?\nAnswer:",

prompt_template="Answer the question and if the question can't be answered, say \"I don't know\"\n\n---\n\nQuestion: {{question}}?\nAnswer:",

prompt_template_variables={"question": "What is your name?"},

project="Alexa Chatbot",

metadata= {

"usage.prompt_tokens": 7,

"usage.completion_tokens": 5,

"usage.total_tokens": 12,

},

output=" My name is Alexa.",

duration=16.598,

)

Once the code is loaded, it becomes accessible within the Comet workspace, where it is meticulously recorded. This workspace serves as an archive for all prompts and responses. Within this workspace, a table sidebar offers a comprehensive view of the logged data, including details such as prompts, responses, metadata, duration, and more. Simply click on a specific row to access this valuable information.

Conclusion

In conclusion, embarking on the journey to explore Comet’s LLMOps is a promising step for anyone venturing into the world of large language models (LLMs). This suite of tools provides an invaluable toolkit for researchers, engineers, and data scientists, enabling them to harness the full potential of LLMs while ensuring efficient experimentation, prompt tracking, and model performance monitoring.

As we’ve discovered throughout this guide, LLMOps offers a range of features, from tracking prompt histories and facilitating rapid iterations with the Prompt Playground to monitoring usage and resource allocation. These tools not only streamline the development process but also enhance transparency and accountability, addressing the ethical and operational challenges that accompany LLM research and deployment.

By taking advantage of Comet’s LLMOps, users can unlock the true power of large language models, pushing the boundaries of what is possible in natural language understanding, generation, and beyond. Whether you are a seasoned AI practitioner or just beginning to explore this fascinating field, Comet’s LLMOps offers a welcoming gateway into a realm where language and technology converge, promising exciting innovations and discoveries on the horizon.