To jump directly into resources about how to use Comet and Ultralytics YOLOv5, check out:

- Comet docs

- YOLOv5 experiment logged in Comet

- Colab tutorial on training YOLOv5 with custom datasets in Comet

- YOLOv5 GitHub repo

Start training and logging Ultralytics YOLOv5 models with Comet:

What is YOLOv5?

The Ultralytics YOLOv5 library is a family of deep learning object detection architectures that are pre-trained on the COCO dataset. It’s open-source and feature-rich. To date, it’s become one of the most practical set of object detection algorithms that anyone can use.

Computer vision use cases are costly to train. Handling unstructured data and debugging the model performance is also complicated. YOLOv5 makes it simple to apply computer vision to any custom application.

Want to learn more about YOLO object detection more generally? Check out this YouTube video tutorial.

What makes YOLOv5 so convenient

YOLOv5 is a library with a suite of tools that enables both beginners and experts of object detection to train and tune a model for production-ready performance. Some of the unique features include:

- auto-anchors that use k-means and a Genetic Algorithm (GA) for optimization. They identify anchors that weren’t already labeled so you don’t have to do it yourself.

- easily train on custom datasets

- multiple model architectures including a small one that can run on the edge

- a low-code library that just works right out of the box. All you have to do is modify a config file and load up your custom dataset

The Ultralytics team that manages YOLOv5 has put a lot of effort into their documentation, along with adding integrations and tutorials.

YOLOv5 is now fully integrated with Comet

The YOLOv5 library can be a great starting point to your computer vision journey. To improve the model’s performance and get it production-ready, you’ll need to log the results in an experiment tracking tool like Comet.

The Comet and YOLOv5 integration offers 3 main features that we’ll cover in this post:

- Autologging and custom logging features

- Saving datasets and models as artifacts for debugging, and reproducibility

- Organizing your view with Comet’s custom panels

Comet automates tracking of ML metadata

Comet is a powerful tool for tracking your models, datasets, and metrics. It even logs your system and environment variables to ensure reproducibility and smooth debugging for each and every run. It’s like having a virtual assistant that magically knows what notes to keep.

With the YOLOv5 integration, Comet automatically logs each of the following, straight out-of-the-box, and without any additional code:

Metrics

- Box loss, object loss, classification loss for the training and validation data;

- mAP_0.5, mAP_0.5:0.95 metrics for the validation data;

- Precision and recall for the validation data.

Parameters

- Model hyperparameters;

- All parameters passed through the command line options.

Visualizations

- Confusion matrix of model predictions on the validation data;

- Plots for the precision-recall and F1 curves across all classes;

- Correlogram of the class labels.

Easily log custom data like YOLOv5 checkpoints, models and datasets

If you’re looking for more in-depth experiment management, custom logging capabilities are also available either through command line flags or environment variables. With Comet, you can log custom user-defined metrics, class-level metrics, and model predictions.

Log YOLOv5 checkpoints in Comet

Training with unstructured data (like images) can be painfully time-consuming. Any interruption can be a major set-back, especially if you have to start training from scratch.

By logging checkpoints, you can simply pick-up where you left off! Comet allows you to resume training from your latest checkpoint, specify which checkpoints are logged, overwrite checkpoints, and retrieve saved checkpoints with a simple command line flag.

--save_period 1

Log datasets and YOLOv5 models as Comet Artifacts

Saving datasets and models as Artifacts in Comet will support you with debugging and reproducibility. You can upload artifacts in isolation (e.g. a dataset you plan on using later, or a pre-trained model), or you can upload them automatically with your training runs, all with a simple command line flag.

To log model predictions as images, you can add

--bbox_interval 1

With Comet Artifacts, you can:

- version control your models and datasets, while tracking their lineages.

- upload saved Artifacts and use them in new experiments

- view them directly in the Comet UI, or download into your environment of choice for further analysis.

Once you’ve logged an artifact with Comet, you can upload a version of it with:

--upload_dataset "train"

To see any of the custom logging in action, check out the Colab.

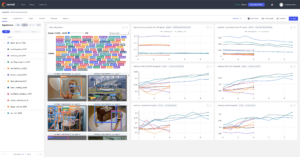

Organize your view with Comet’s custom panels

Comet’s platform lets you visualize your model in any way you like. You can choose from any of Comets 200+ publicly available Panels or build your own!

Review a YOLOv5 model logged with Comet

Now that you know what the integration can do, why not see it for yourself? Our resident data scientist, Dhruv Nair has logged a YOLOv5 model.

When you navigate to Dhruv’s public experiment in the Comet UI, your default view displays Panels that illustrate performance metrics across multiple experiment runs, helping you visually compare models and experiments within a single project. Comet’s YOLOv5 integration automatically logs experiment metrics like precision, mean average precision (mAP), recall, and training loss, and then plots them for you in the default panel view. Additionally, if you select individual experiments, you’ll find that Comet auto-logs even more details of each specific experiment run like system metrics and package installations, learning rate, loss metrics, and more.

With Comet, you also have the freedom to further customize which metrics and features are logged. For most experiment-specific logging, just insert the relevant command line flag or environment variable into your original code from this simple documentation. This panel illustrates your model’s bounding box predictions on validation images, and allows you to adjust confidence thresholds and filter by label– all directly in the UI!

Other tools you might use for your YOLOv5 model

Since you’re still here, thanks for reading this far! Check out these free resources to help debug your Ultralytics YOLOv5 model.

- If you’re using synthetic images, check out this forensic image tool to see if your model has identified some differences between your real and simulated images.

- Albumentations is already auto-implemented in YOLOv5, but take a look and you might find a different way to see your data.

- If you need to annotate your own data, check out labelimg.

You can also join Comet’s Slack community to get support on any integration.