Issue 7: Comet + TensorboardX, Tesla at CVPR, Guide to ML Job Interviews

A new Comet integration with TensorBoardX, Tesla at CVPR, a new e-book on ML job interviews, more intelligent multi-task learning, and more

Welcome to issue #7 of The Comet Newsletter!

This week, we share a new Comet integration with TensorBoardX and take a closer look at Andrej Karpathy’s CVPR talk on Tesla’s vision-only approach to autonomous vehicles.

Additionally, we highlight Facebook’s new open-source time series library, an exceptional e-book on ML job interviews, and a great piece on how to more intelligently do multi-task learning.

Like what you’re reading? Subscribe here.

And be sure to follow us on Twitter and LinkedIn — drop us a note if you have something we should cover in an upcoming issue!

Happy Reading,

Austin

Head of Community, Comet

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

From CVPR 2021: Tesla’s Andrej Karpathy on a Vision-Based Approach to Autonomous Vehicles

Tesla is doubling down on its vision-first approach to self-driving cars and will stop using radar sensors altogether in future releases.

From its inception, Tesla has taken a different approach to most other companies developing a self-driving car that doesn’t rely on Lidar. Instead they used a combination of radar sensors and 8 cameras placed around the vehicle.

In this talk at CVPR 2021, Andrej Karpathy explains the challenges with this approach and why Tesla has decided to focus on cameras and stop using radar sensors. The main challenge with using different sensor types is sensor fusion. As Elon Musk puts it: “When radar and vision disagree, which one do you believe? Vision has much more precision, so better to double down on vision than do sensor fusion.”

Radar is used to track objects that are in front of the car and determine their distance, speed, and acceleration. The approach taken by the Tesla team has been to compute this information from onboard cameras rather than radar sensors, and they claim to have developed a new vision-based system that outperforms existing radar-based approaches.

In order to achieve this, they relied on both auto-labelling techniques and the power of their fleet. Broadly, the approach is as follows:

- Use all the information at your disposal (radar, cameras, hindsight, etc) to create a neural network that will generate ground-truth labels

- Use these labels to train a neural network that can be deployed on cars (strict latency requirements, can’t use data from the future, etc.)

- Run this neural network in shadow mode on the Tesla fleet

- Based on 200 handcrafted triggers, identify edge-cases for which the predictions might be incorrect

- Annotate edge cases and go back to step 1

Even with this complex vision-based approach, moving away from radar sensors is a risky move. It will be fascinating to see how this plays out over the next couple of years.

For a more complete look at the most interesting updates from CVPR 2021, check out this recap in Analytics India Magazine.

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

How to Do Multi-Task Learning Intelligently

Traditionally, machine learning models have been trained and deployed to solve one task at a time—whether that’s image classification, sentiment analysis, segmentation, etc.

But as complexity in both modeling and business use cases evolve, there are more and more situations that demand ML models that can make more than one type of prediction on a given input—a process known as “Multi-task Learning” (MTL).

In their new article in The Gradient, a team of researchers—Aminul Huq, Mohammad Hanan Gani, Ammar Sherif, and Abubakar Abid—explore the what, why, and how behind MTL. They note that MTL can be especially useful in:

- Expanding the features and labels of a dataset

- Making ML models more generalizable

- And can help overcome limits in computation power

But these potential benefits don’t quite give the full story. There are also a range of difficulties and problems with an MTL approach—namely, MTL can create an imbalance in terms of which ML task is prioritized, and model optimization can be much more complex.

To help cut through the noise and help practitioners make sense of when and how to use MTL in their projects, they summarize three research papers that offer slightly different approaches

- AdaShare: Learning What to Share for Efficient Deep Multi-Task Learning (X. Sun et al., 2020)

- End-to-End Multi-Task Learning with Attention (S. Liu et al., 2019)

- Which Tasks Should Be Learned Together in Multi-task Learning? (Standley et al., 2020)

Read the full article in The Gradient.

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

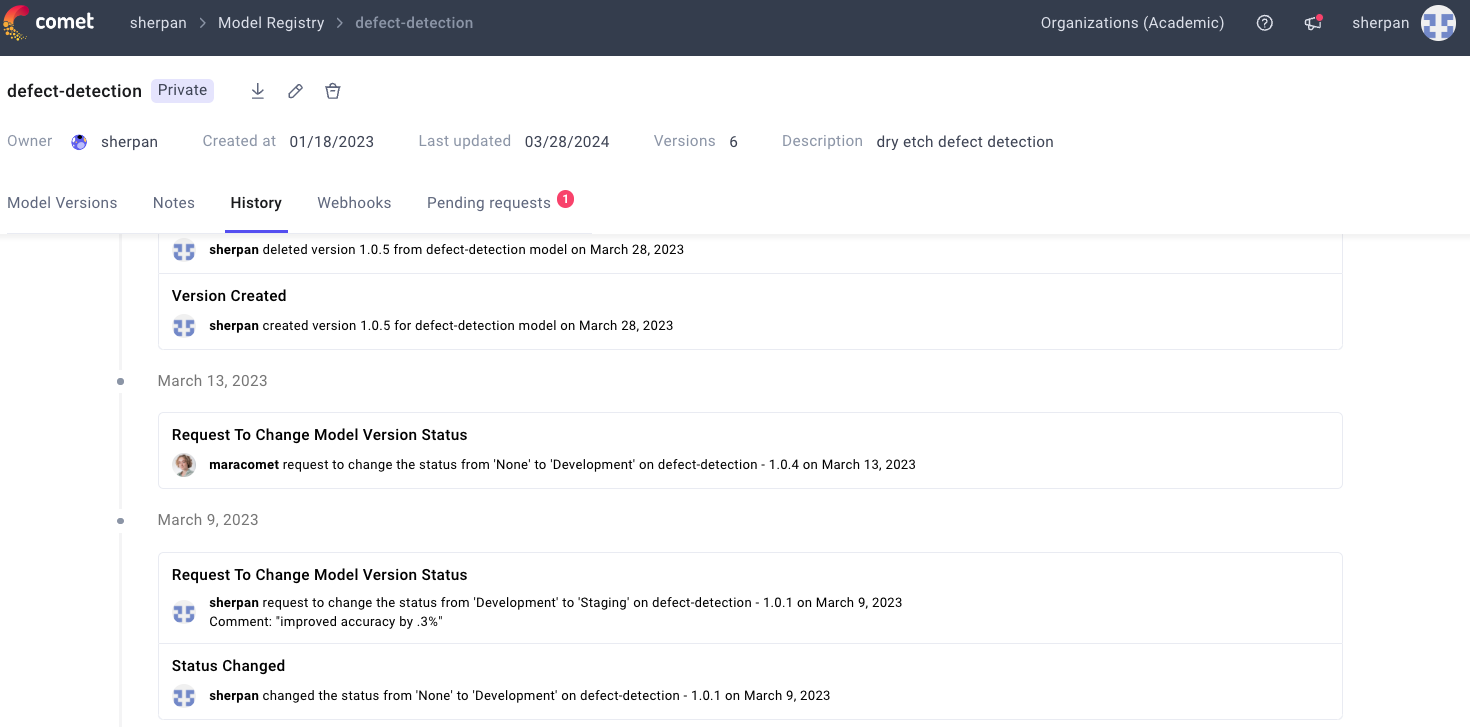

New Comet Integration: TensorBoardX

TensorBoardX is an open-source, TensorBoard-like API that allows data scientists to – – effectively track and visualize their model metrics and parameters.

With the new Comet integration, data scientists can extend the functionality of TensorBoardX, enabling custom visualizations and the ability to compare model metrics, parameters, code, and much more.

Facebook Introduces Kats: A New, Open-Source “One-Stop Shop” for Time Series Analysis

Facebook’s “Prophet” has long been one of the most helpful open-source tools for time series analysis and forecasting—so helpful, in fact, that our team built an integration to support logging your Prophet experiments in Comet.

This week, the team at Facebook announced Kats, a new open-source library that extends Prophet with a generalizable framework that covers the key components of most generic time series analyses.

- Forecasting

- Anomaly detection

- Multivariate analysis

- Feature extraction/embedding

For more, check out the announcement blog post, or jump into the code by visiting the GitHub repo.

New E-Book: Introduction to Machine Learning Interviews

Much like machine learning itself, the interviewing process for ML roles (for interviewers and interviewees alike) can be a bit of a black box. The field (relatively speaking) is still quite new, and standardized practices and perspectives are hard to come by.

The talented and deeply experienced Chip Huyen has consolidated years of experience as both an ML interviewer and job candidate in her new, incredibly-detailed and interactive e-book that sheds light on many different facets of the machine learning interview experience. To give you a more detailed look at the book, here’s how Huyen describes its two primary sections:

“The first part provides an overview of the machine learning interview process, what types of machine learning roles are available, what skills each role requires, what kinds of questions are often asked, and how to prepare for them. This part also explains the interviewers’ mindset and what kind of signals they look for.

“The second part consists of over 200 knowledge questions, each noted with its level of difficulty — interviews for more senior roles should expect harder questions — that cover important concepts and common misconceptions in machine learning.”

Be sure to check out and bookmark the full text, as there’s a lot of awesome content to work through.

INDUSTRY | WHAT WE’RE READING | PROJECTS | OPINION

LinkedIn’s job-matching AI was biased. The company’s solution? More AI.

There’s an old maxim about job recruiters and hiring managers—each resume that comes into their inboxes gets, at most, 6 seconds of review.

While that seems like very little time at all, most AI-based resume review systems—which many job search platforms now employ—take only a couple of milliseconds to review each resume. The benefits for recruiters and hiring managers is clear—these recommendation and filtering systems can drastically reduce the time it takes to source viable candidates, while automatically categorizing and ranking the resumes and applications that pass the AI system’s initial smell test.

But (perhaps unsurprisingly) as these AI systems have become more and more commonplace on platforms that host millions of job postings at once, we’re also beginning to see the cracks in the foundation.

This excellent piece of reporting in the MIT Technology Review explores how LinkedIn’s AI-based approach to job-matching has struggled to avoid certain biases when surfacing potential roles to candidates and potential candidates to recruiters and hiring managers.

One specific example of this comes in the way the LinkedIn algorithm was factoring user behaviors into its algorithm. Authors Sheridan Wall and Hilke Schellman provide a specific example to help illustrate this:

“While men are more likely to apply for jobs that require work experience beyond their qualifications, women tend to only go for jobs in which their qualifications match the position’s requirements. The algorithm interprets this variation in behavior and adjusts its recommendations in a way that inadvertently disadvantages women.”

Embedding algorithmic automation into each step of the hiring process is a double-edged sword—it can rapidly speed up the filtering of candidates, which traditionally has taken significant resources and time. However, if not built correctly and monitored diligently, these systems can easily reinforce biases and perceptions that have endured about which kinds of people are right for different roles.