Reviewing these sections can help pinpoint the source of the problem and suggest possible

resolutions.

### ⌨️ Using Comet Debugger Mode (UI/Browser)

**Comet Debugger Mode** is a hidden diagnostic feature in the **Opik web application** that displays real-time technical information to help you troubleshoot issues. This mode is particularly useful when investigating connectivity problems, reporting bugs, or verifying your deployment version.

**To toggle Comet Debugger Mode:**

Press `Command + Shift + .` on macOS or `Ctrl + Shift + .` on Windows/Linux

Reviewing these sections can help pinpoint the source of the problem and suggest possible

resolutions.

### ⌨️ Using Comet Debugger Mode (UI/Browser)

**Comet Debugger Mode** is a hidden diagnostic feature in the **Opik web application** that displays real-time technical information to help you troubleshoot issues. This mode is particularly useful when investigating connectivity problems, reporting bugs, or verifying your deployment version.

**To toggle Comet Debugger Mode:**

Press `Command + Shift + .` on macOS or `Ctrl + Shift + .` on Windows/Linux

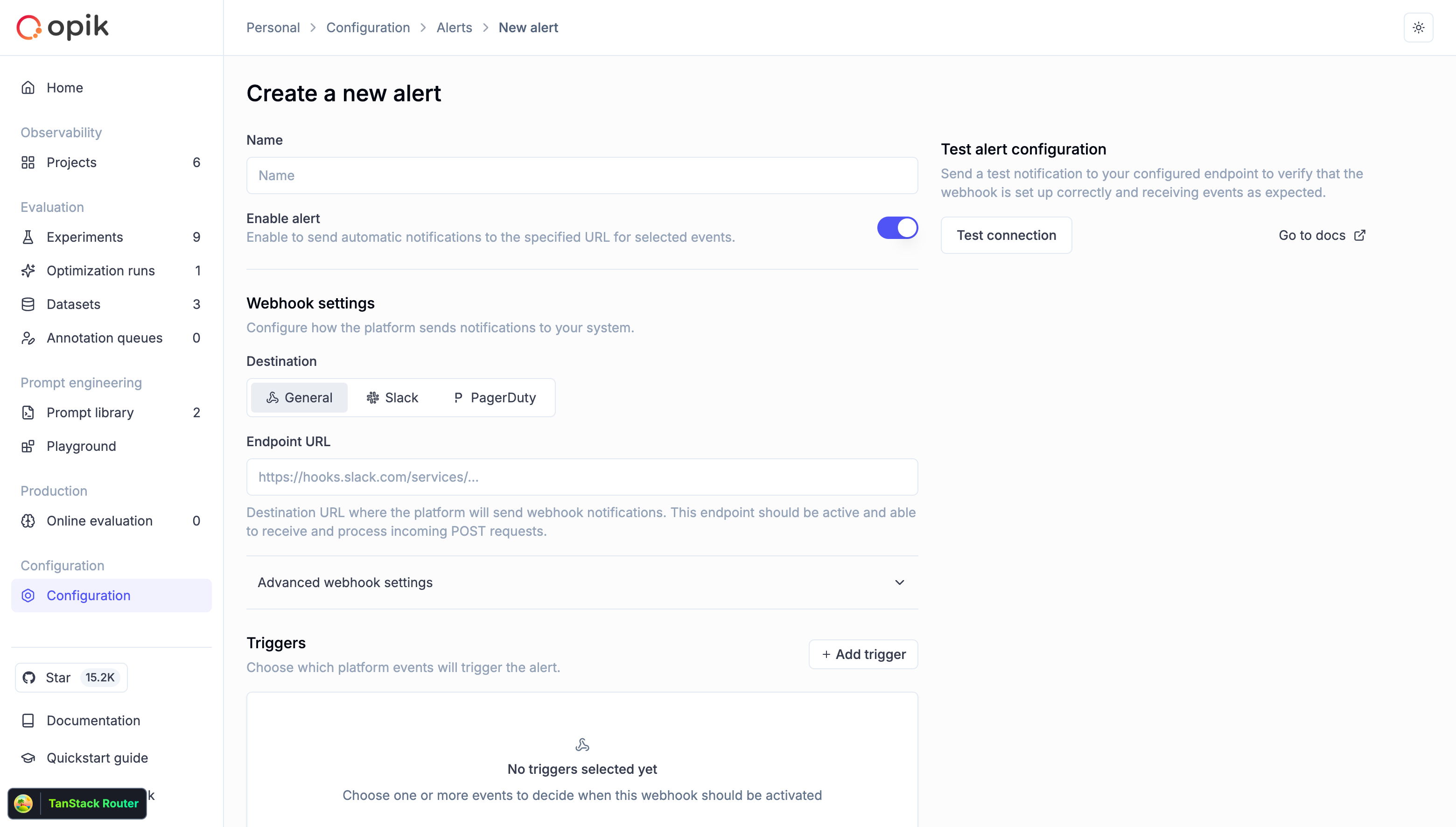

**What it displays:**

* **Network Status**: Real-time connectivity indicator with RTT (Round Trip Time) showing latency to the Opik backend server in seconds

* **Opik Version**: The current version of Opik you're running (click to copy to clipboard)

This information is helpful when:

* Reporting issues to the Opik team (include the version number and RTT)

* Verifying your Opik version matches expected deployment

* Diagnosing connectivity problems between UI and backend (check RTT for latency issues)

* Troubleshooting UI-related issues or unexpected behavior

* Confirming successful updates or deployments

* Monitoring network performance and latency to the backend server

**How it works:**

The keyboard shortcut toggles the debug information overlay on and off. When enabled, a small

status bar appears in the UI showing the network connectivity status and version information.

The mode persists across browser sessions (stored in local storage), so you only need to enable

it once until you toggle it off again.

**What it displays:**

* **Network Status**: Real-time connectivity indicator with RTT (Round Trip Time) showing latency to the Opik backend server in seconds

* **Opik Version**: The current version of Opik you're running (click to copy to clipboard)

This information is helpful when:

* Reporting issues to the Opik team (include the version number and RTT)

* Verifying your Opik version matches expected deployment

* Diagnosing connectivity problems between UI and backend (check RTT for latency issues)

* Troubleshooting UI-related issues or unexpected behavior

* Confirming successful updates or deployments

* Monitoring network performance and latency to the backend server

**How it works:**

The keyboard shortcut toggles the debug information overlay on and off. When enabled, a small

status bar appears in the UI showing the network connectivity status and version information.

The mode persists across browser sessions (stored in local storage), so you only need to enable

it once until you toggle it off again.

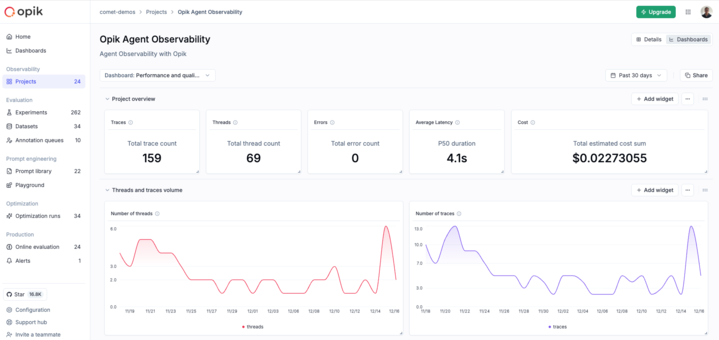

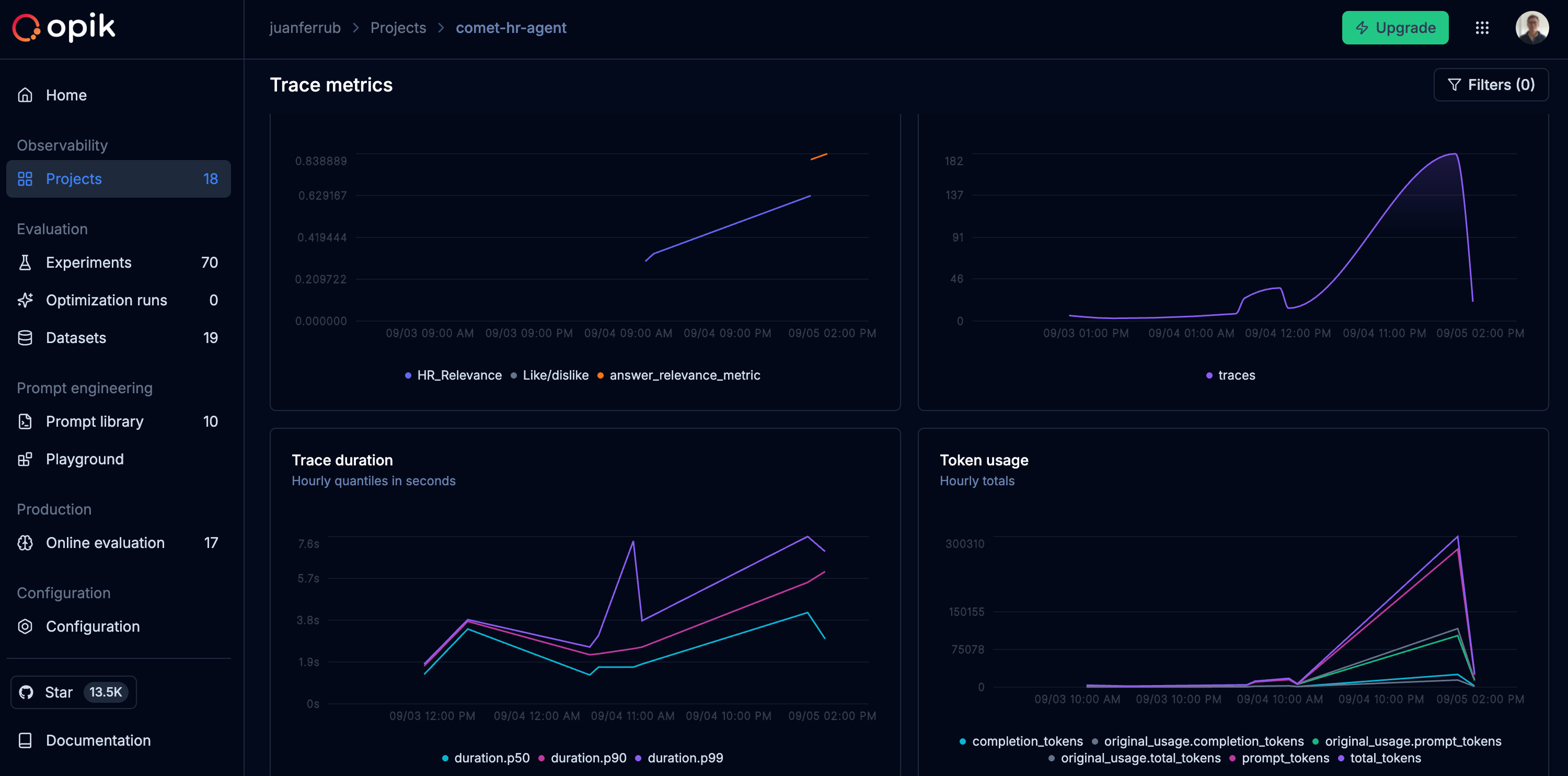

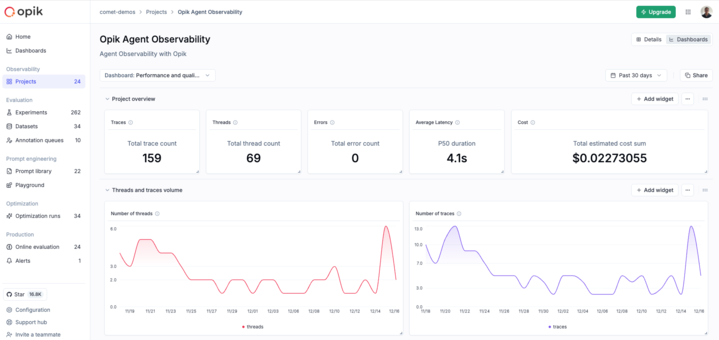

Our new dashboards engine lets you build fully customizable views to track everything from token usage and cost to latency, quality across projects and experiments.

**📍 Where to find them?**

Dashboards are available in three places inside Opik:

* **Dashboards page** – create and manage all dashboards from the sidebar

* **Project page** – view project-specific metrics under the Dashboards tab

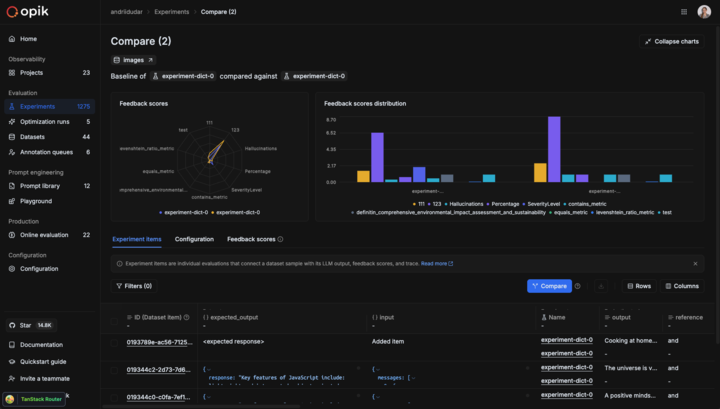

* **Experiment comparison page** – visualize and compare experiment results

**🧩 Built-in templates to get started fast**

We ship dashboards with zero-setup pre-built templates, including Performance Overview, Experiment Insights and Project Operational Metrics.

Templates are fully editable and can be saved as new dashboards once customized.

**🧱 Flexible widgets**

Dashboards support multiple widget types:

* **Project Metrics** (time-series and bar charts for project data)

* **Project Statistics** (KPI number cards)

* **Experiment Metrics** (line, bar, radar charts for experiment data)

* **Markdown** (notes, documentation, context)

Widgets support filtering, grouping, resizing, drag-and-drop layouts, and global date range controls.

👉 [Documentation](https://www.comet.com/docs/opik/production/dashboards)

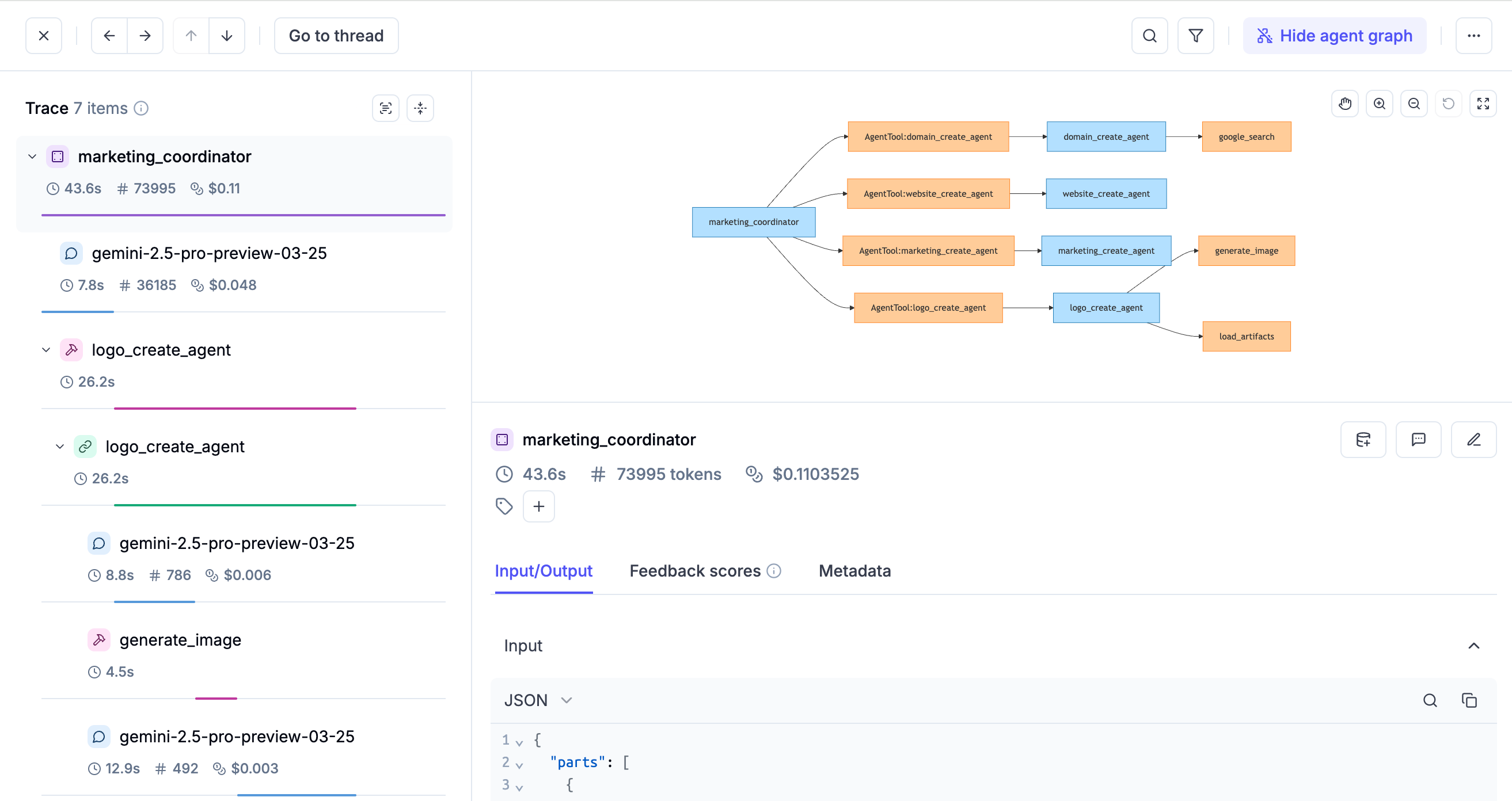

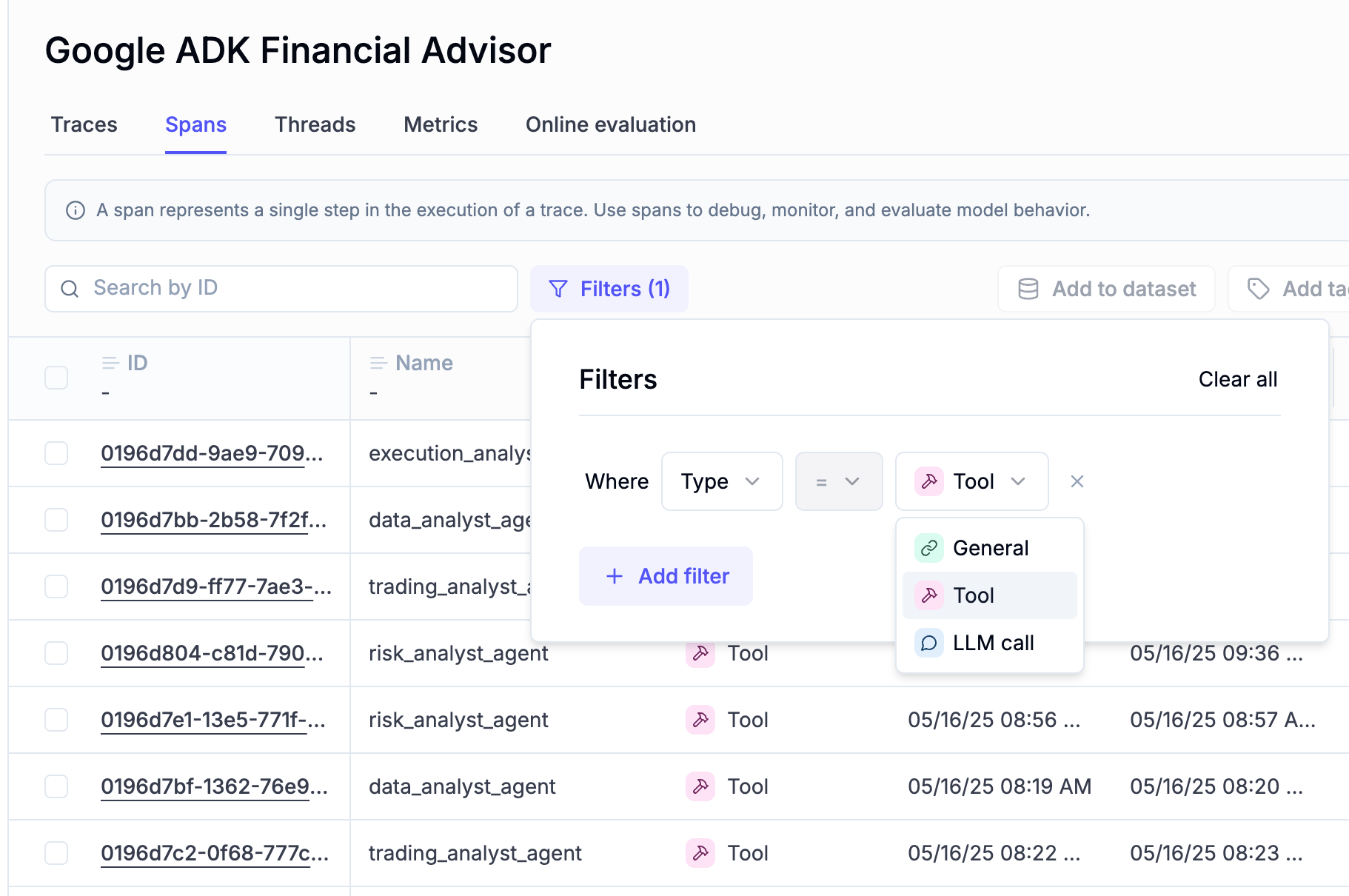

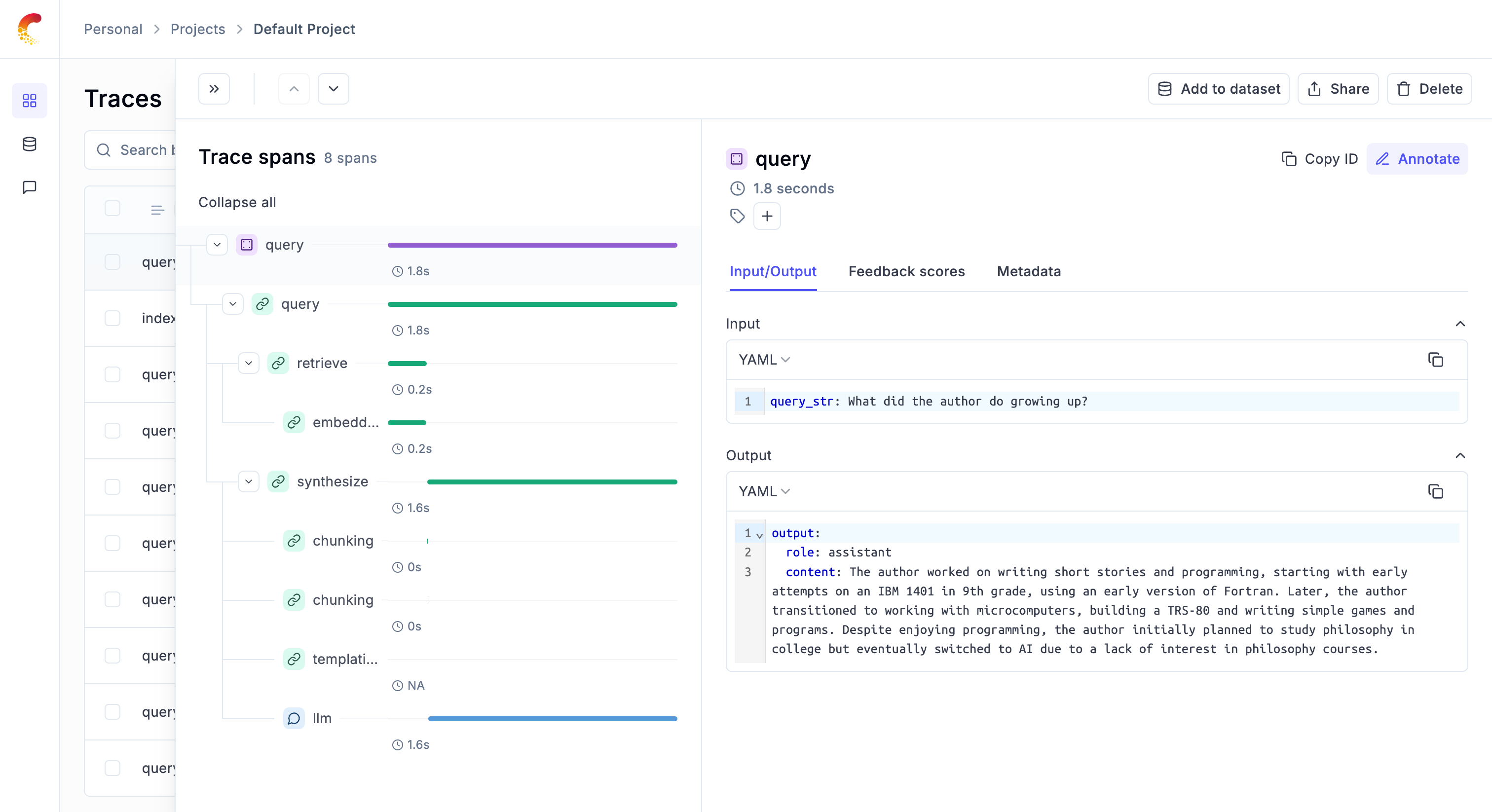

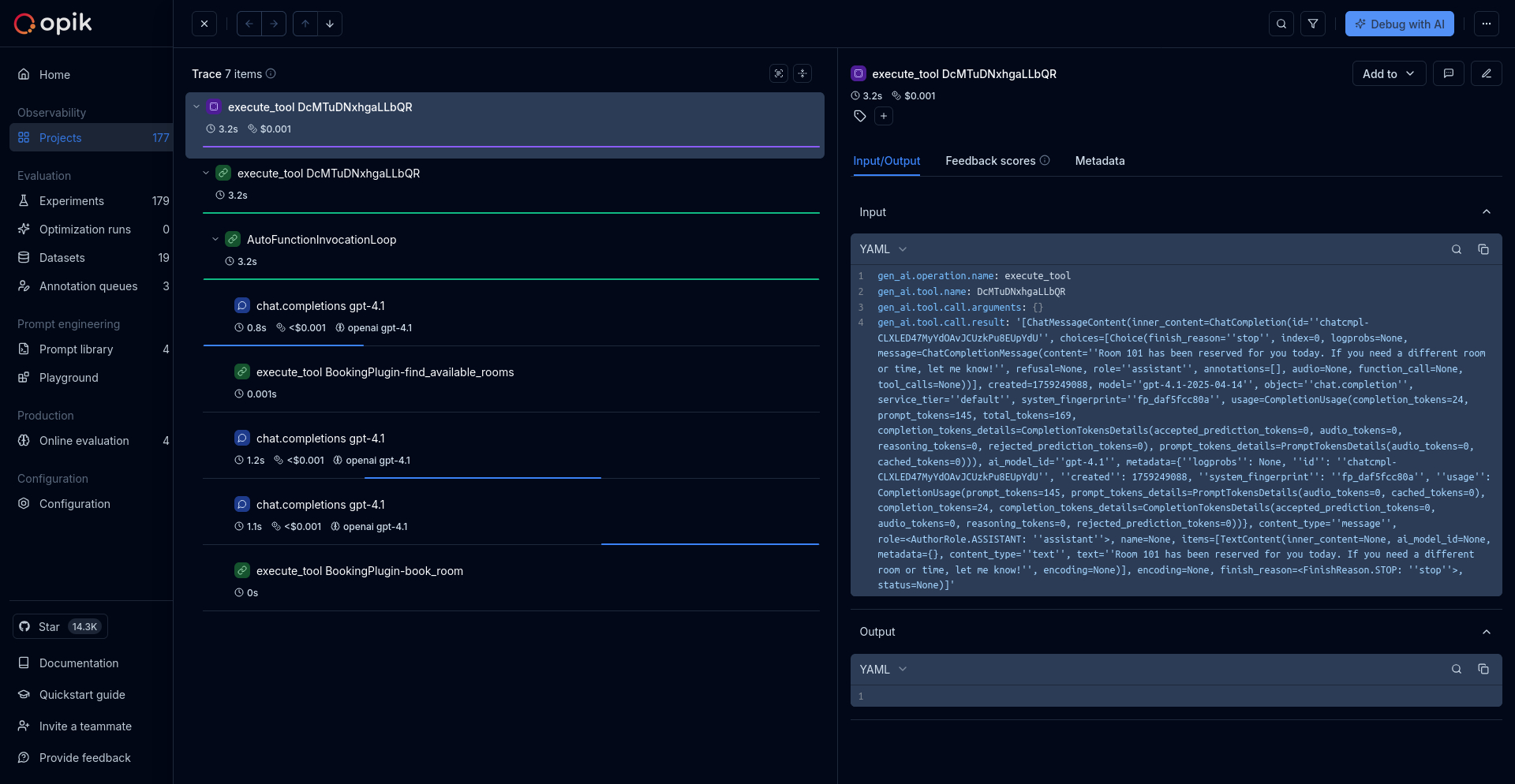

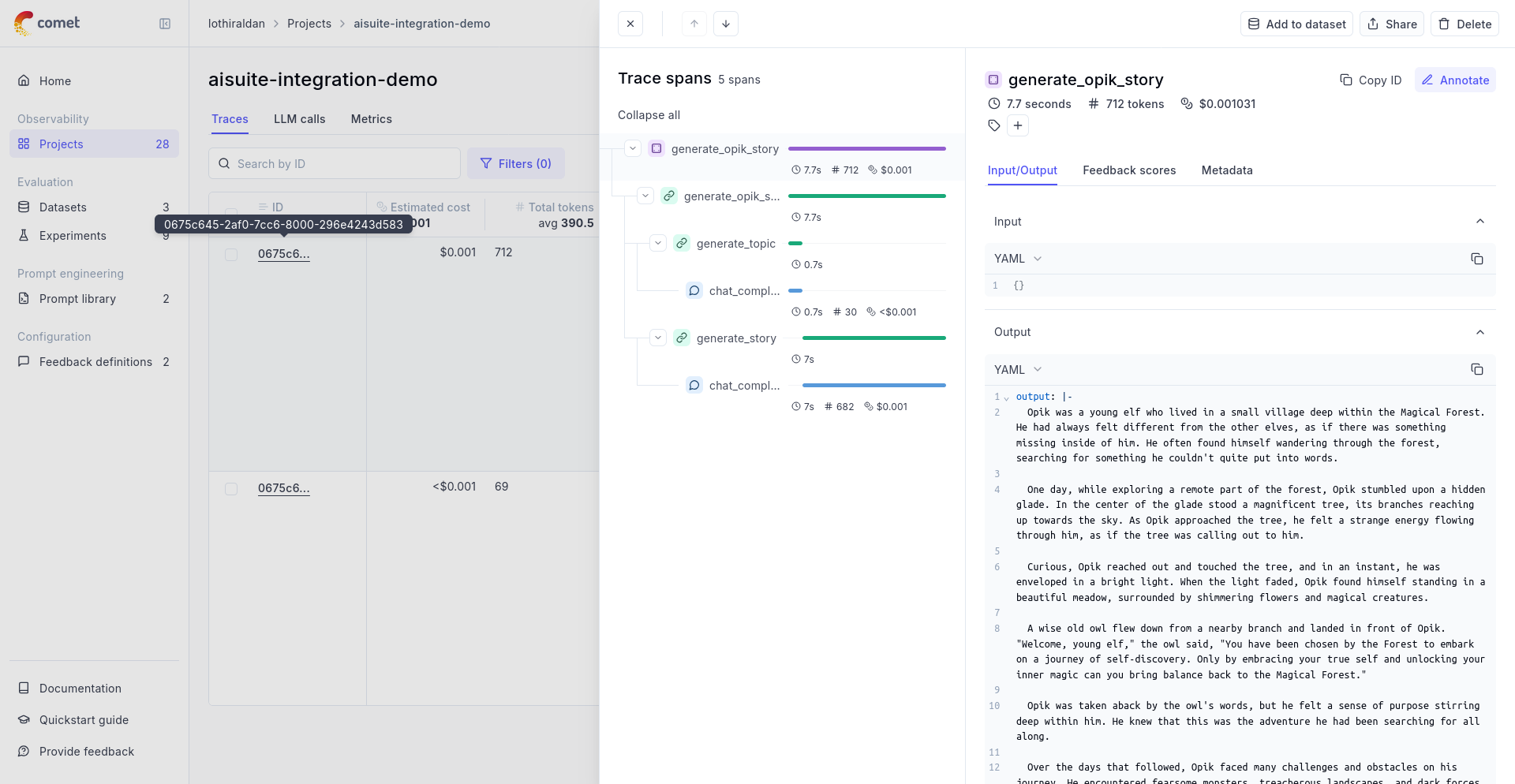

## 🧪 Improved Evaluation Capabilities

**Span-Level Metrics**

Span-level metrics are officially live in Opik supporting both LLMaaJ and code-based metrics!

Teams can now EASILY evaluate the quality of specific steps inside their agent flows with full precision.

Instead of assessing only the final output or top-level trace, you can attach metrics directly to individual call spans or segments of an agent's trajectory.

This unlocks dramatically finer-grained visibility and control. For example:

* Score critical decision points inside task-oriented or tool-using agents

* Measure the performance of sub-tasks independently to pinpoint bottlenecks or regressions

* Compare step-by-step agent behavior across runs, experiments, or versions

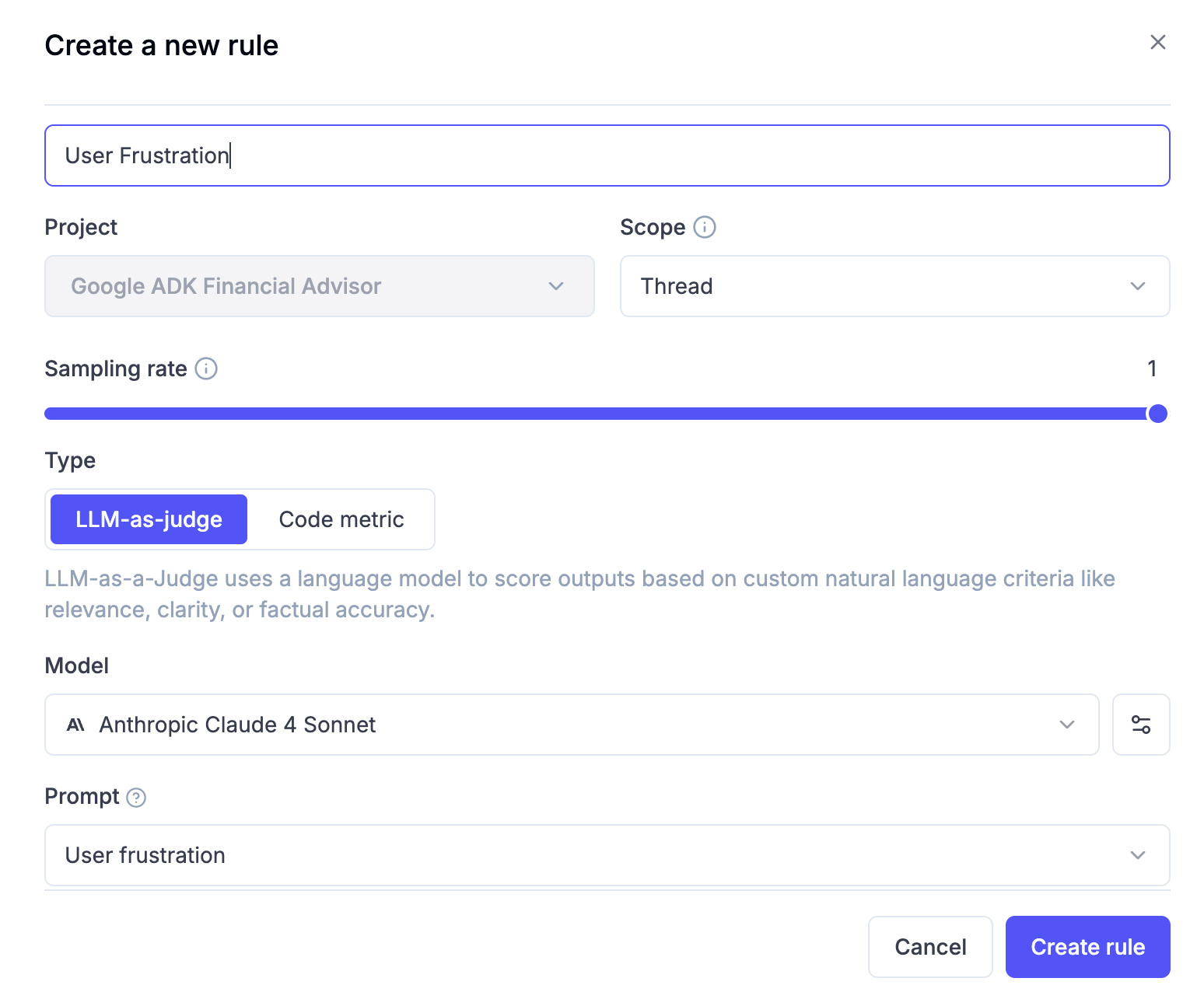

**New Support accessing full tree, subtree, or leaf nodes in Online Scores**

This update enhances the online scoring engine to support referencing entire root objects (input, output, metadata) in LLM-as-Judge and code-based evaluators, not just nested fields within them.

Online Scoring previously only exposed leaf-level values from an LLM's structured output. With this update, Opik now supports rendering any subtree: from individual nodes to entire nested structures.

## 📝 Tags Support & Metadata Filtering for Prompt Version Management

You can now tag individual prompt versions (not just the prompt!).

This provides a clean, intuitive way to mark best-performing versions, manage lifecycles, and integrate version selection into agent deployments.

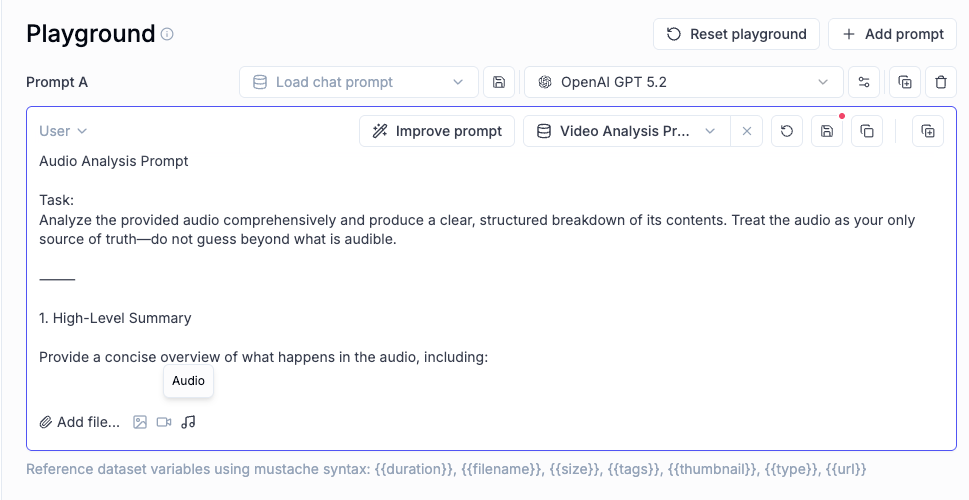

## 🎥 More Multimodal Support: now Audio!

Now you can pass audio as part of your prompts, in the playground and on online evals for advanced multimodal scenarios.

Our new dashboards engine lets you build fully customizable views to track everything from token usage and cost to latency, quality across projects and experiments.

**📍 Where to find them?**

Dashboards are available in three places inside Opik:

* **Dashboards page** – create and manage all dashboards from the sidebar

* **Project page** – view project-specific metrics under the Dashboards tab

* **Experiment comparison page** – visualize and compare experiment results

**🧩 Built-in templates to get started fast**

We ship dashboards with zero-setup pre-built templates, including Performance Overview, Experiment Insights and Project Operational Metrics.

Templates are fully editable and can be saved as new dashboards once customized.

**🧱 Flexible widgets**

Dashboards support multiple widget types:

* **Project Metrics** (time-series and bar charts for project data)

* **Project Statistics** (KPI number cards)

* **Experiment Metrics** (line, bar, radar charts for experiment data)

* **Markdown** (notes, documentation, context)

Widgets support filtering, grouping, resizing, drag-and-drop layouts, and global date range controls.

👉 [Documentation](https://www.comet.com/docs/opik/production/dashboards)

## 🧪 Improved Evaluation Capabilities

**Span-Level Metrics**

Span-level metrics are officially live in Opik supporting both LLMaaJ and code-based metrics!

Teams can now EASILY evaluate the quality of specific steps inside their agent flows with full precision.

Instead of assessing only the final output or top-level trace, you can attach metrics directly to individual call spans or segments of an agent's trajectory.

This unlocks dramatically finer-grained visibility and control. For example:

* Score critical decision points inside task-oriented or tool-using agents

* Measure the performance of sub-tasks independently to pinpoint bottlenecks or regressions

* Compare step-by-step agent behavior across runs, experiments, or versions

**New Support accessing full tree, subtree, or leaf nodes in Online Scores**

This update enhances the online scoring engine to support referencing entire root objects (input, output, metadata) in LLM-as-Judge and code-based evaluators, not just nested fields within them.

Online Scoring previously only exposed leaf-level values from an LLM's structured output. With this update, Opik now supports rendering any subtree: from individual nodes to entire nested structures.

## 📝 Tags Support & Metadata Filtering for Prompt Version Management

You can now tag individual prompt versions (not just the prompt!).

This provides a clean, intuitive way to mark best-performing versions, manage lifecycles, and integrate version selection into agent deployments.

## 🎥 More Multimodal Support: now Audio!

Now you can pass audio as part of your prompts, in the playground and on online evals for advanced multimodal scenarios.

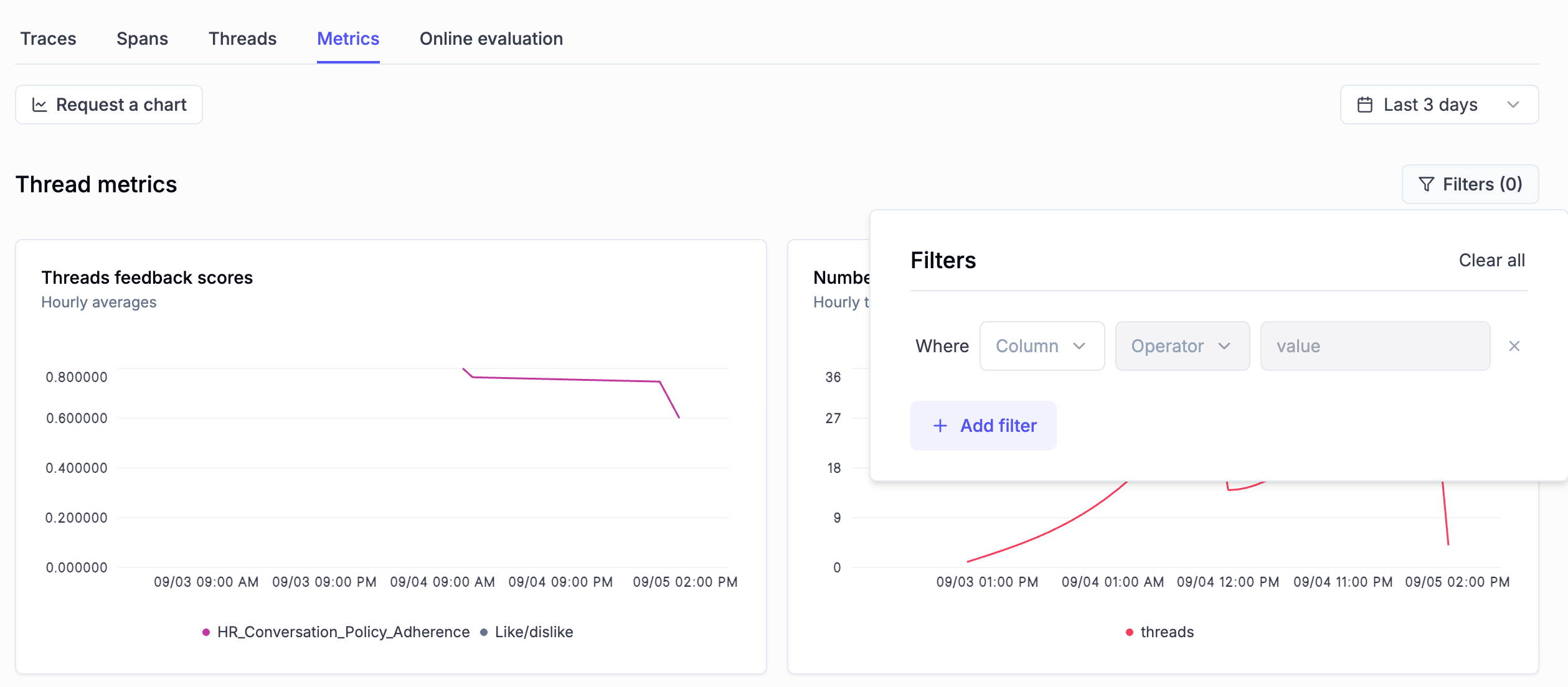

## 📈 More Insights!

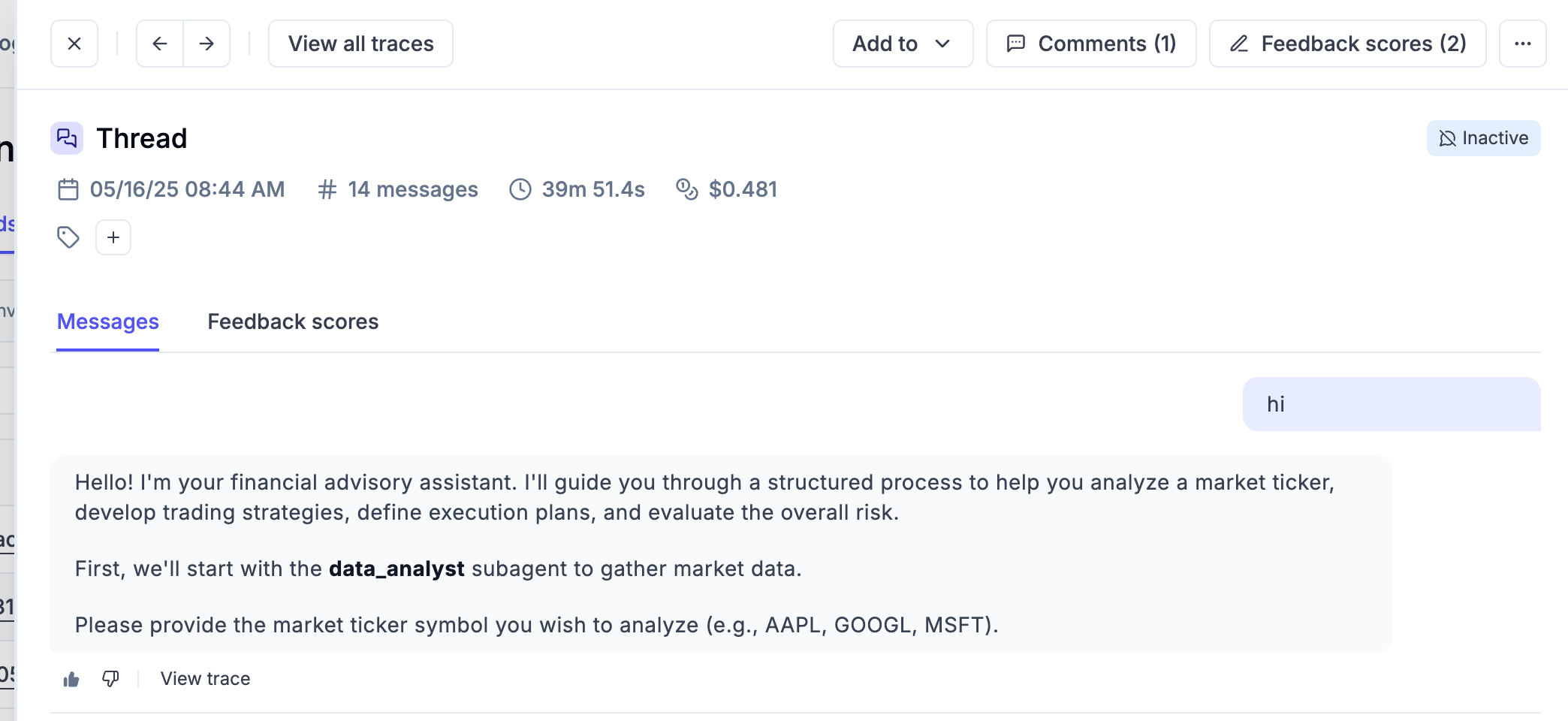

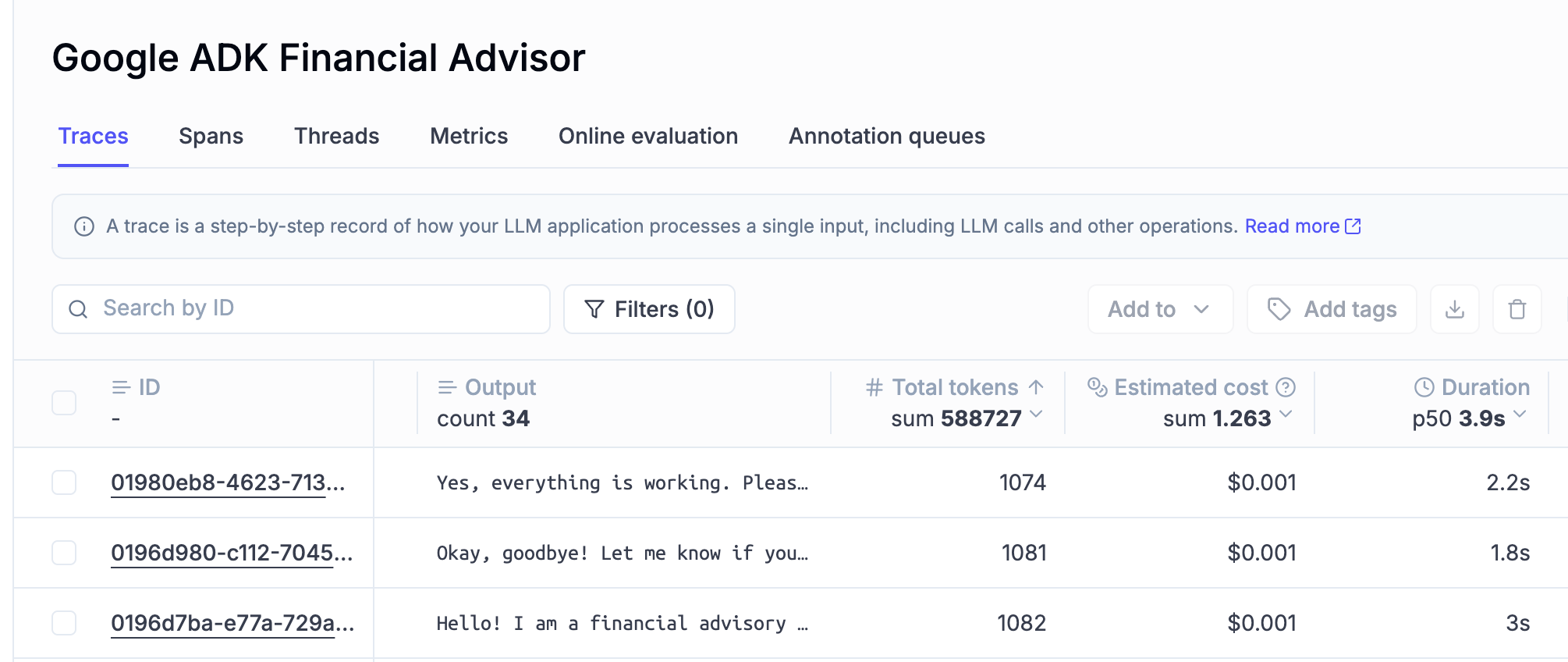

**Thread-level insights**

Added new metrics to the threads table with thread-level metrics and statistics, providing users with aggregated insights about their full multi-turn agentic interactions:

* Duration percentiles (p50, p90, p99) and averages

* Token usage statistics (total, prompt, completion tokens)

* Cost metrics and aggregations

* Also added filtering support by project, time range, and custom filters

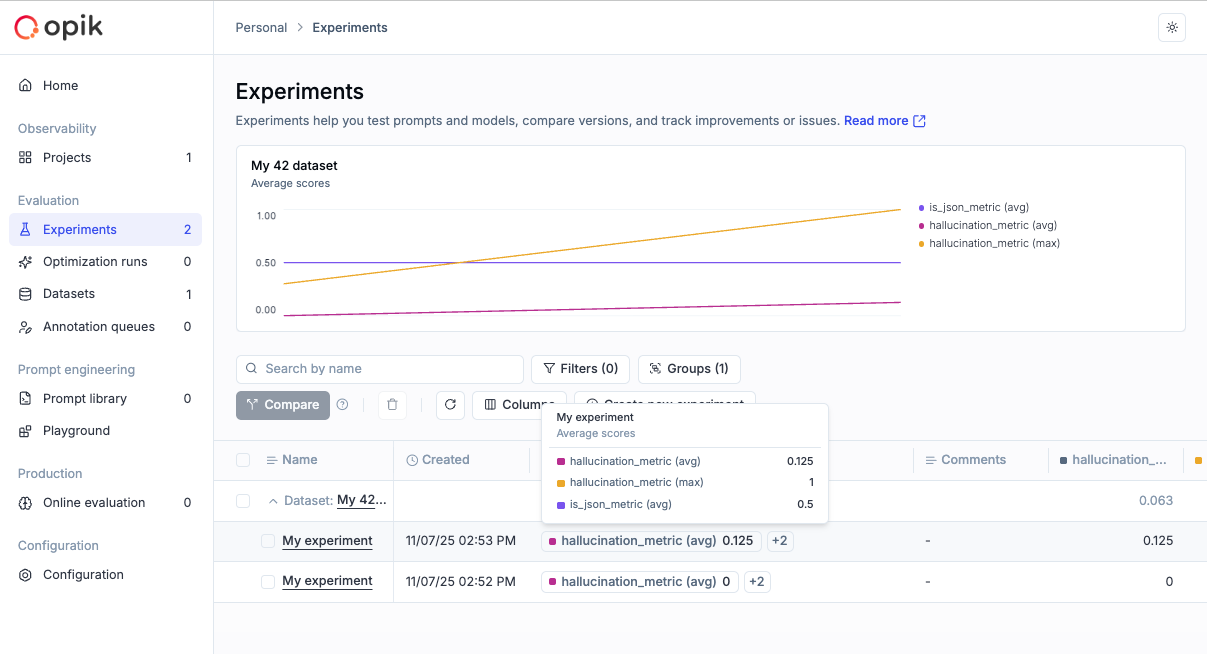

**Experiment insights**

Added additional aggregation methods in headers for experiment items.

This new release adds percentile aggregation methods (p50, p90, p99) for all numerical metrics in experiment items table headers, extending the existing pattern used for duration to cost, feedback scores, and total tokens.

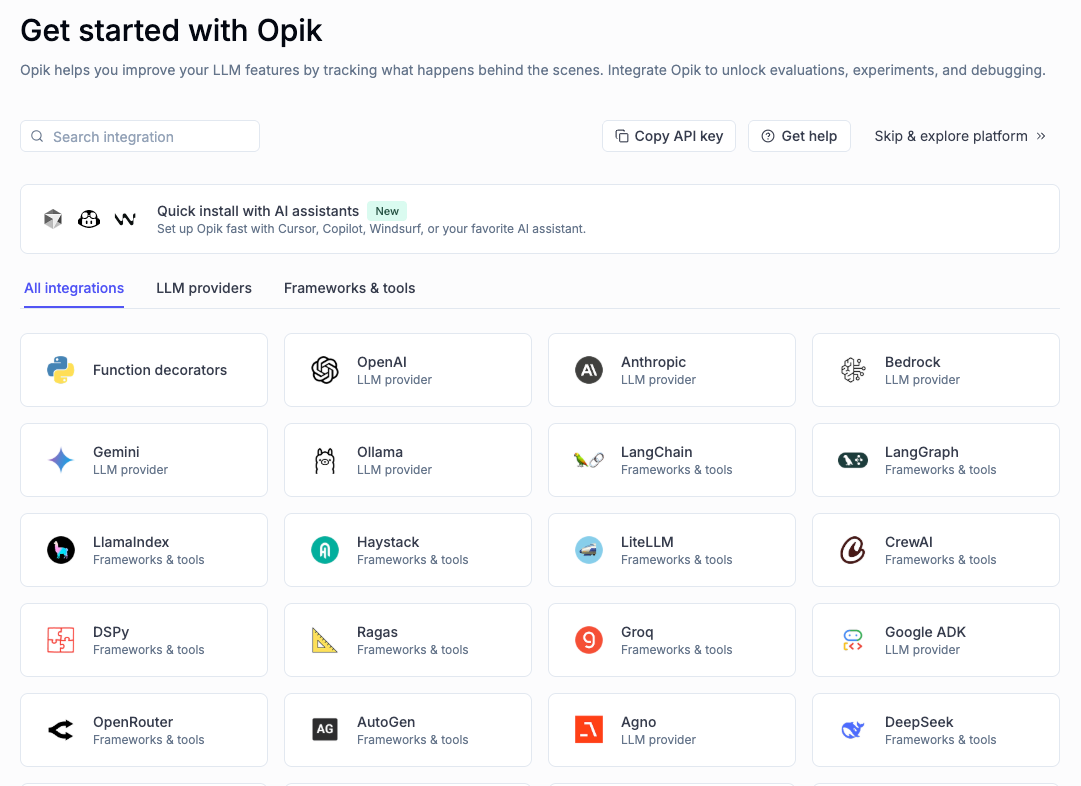

## 🔌 Integrations

**Support for GPT-5.2 in Playground and Online Scoring**

Added full support for GPT 5.2 models in both the playground and online scoring features for OpenAI and OpenRouter providers.

**Harbor Integration**

Added a comprehensive Opik integration for Harbor, a benchmark evaluation framework for autonomous LLM agents. The integration enables observability for agent benchmark evaluations (SWE-bench, LiveCodeBench, Terminal-Bench, etc.).

👉 [Harbor Integration Documentation](https://www.comet.com/docs/opik/integrations/harbor)

***

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.9.40...1.9.56)

*Releases*: `1.9.41`, `1.9.42`, `1.9.43`, `1.9.44`, `1.9.45`, `1.9.46`, `1.9.47`, `1.9.48`, `1.9.49`, `1.9.50`, `1.9.51`, `1.9.52`, `1.9.53`, `1.9.54`, `1.9.55`, `1.9.56`

# December 9, 2025

Here are the most relevant improvements we've made since the last release:

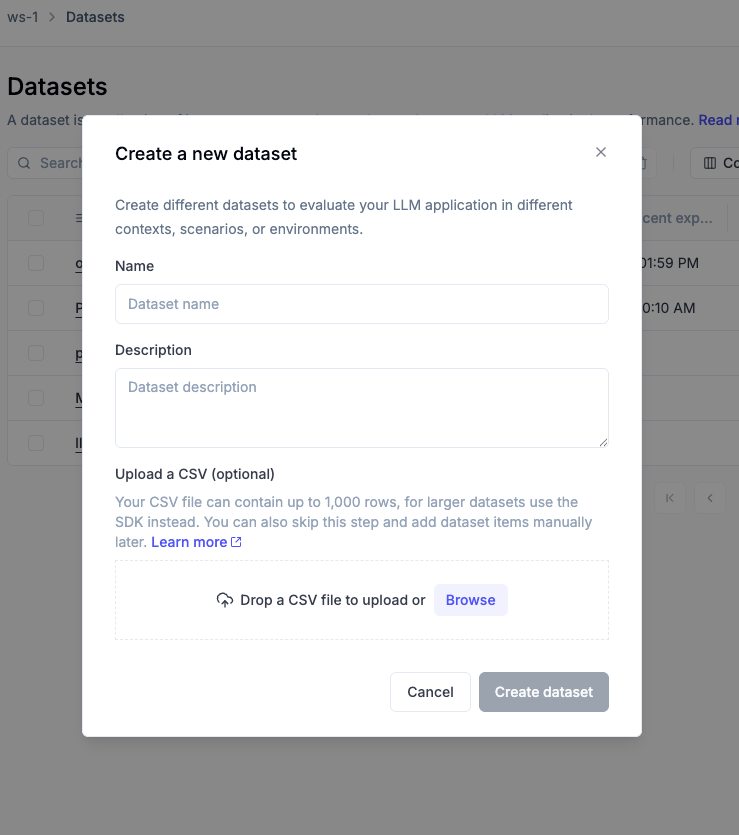

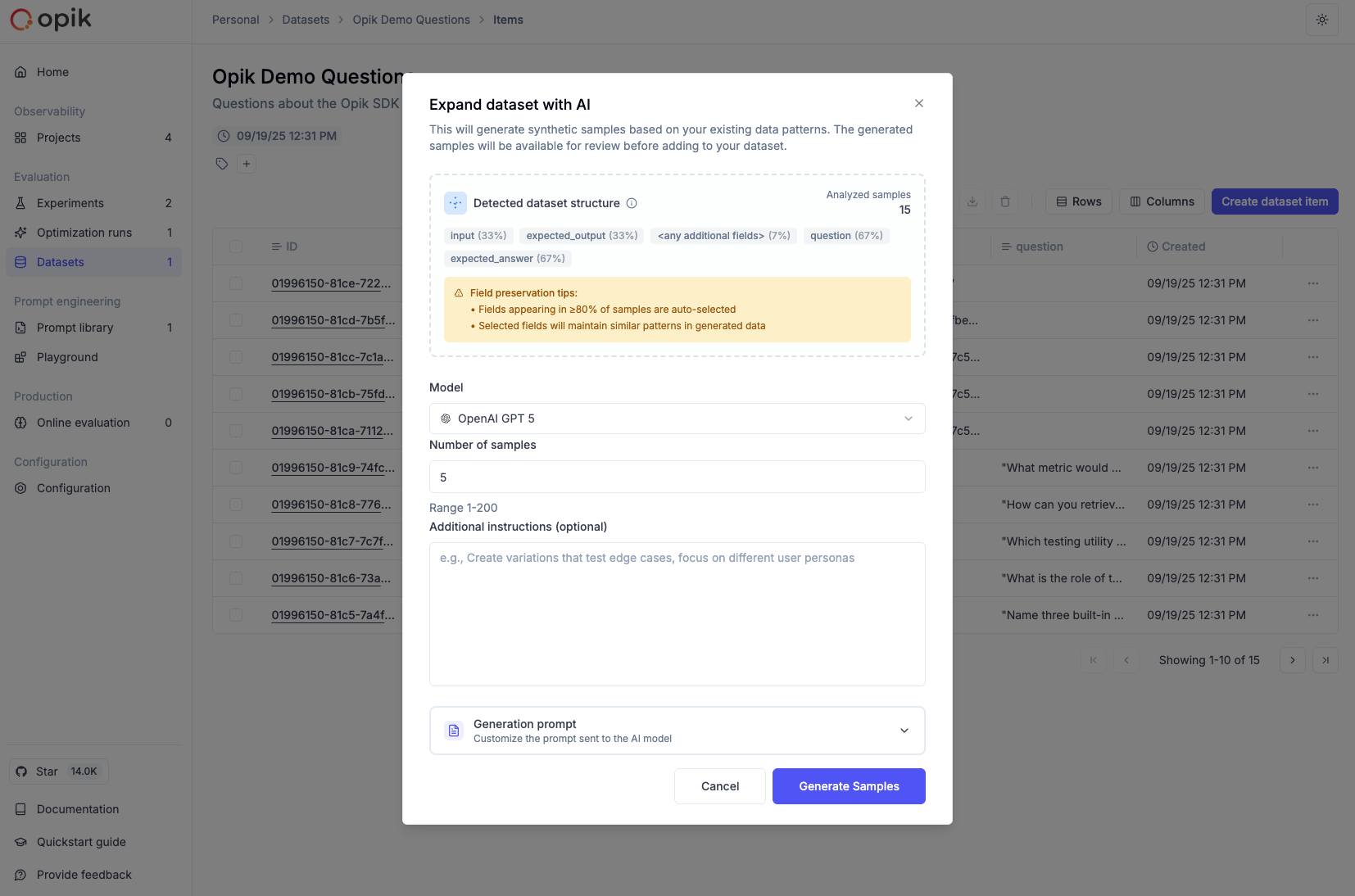

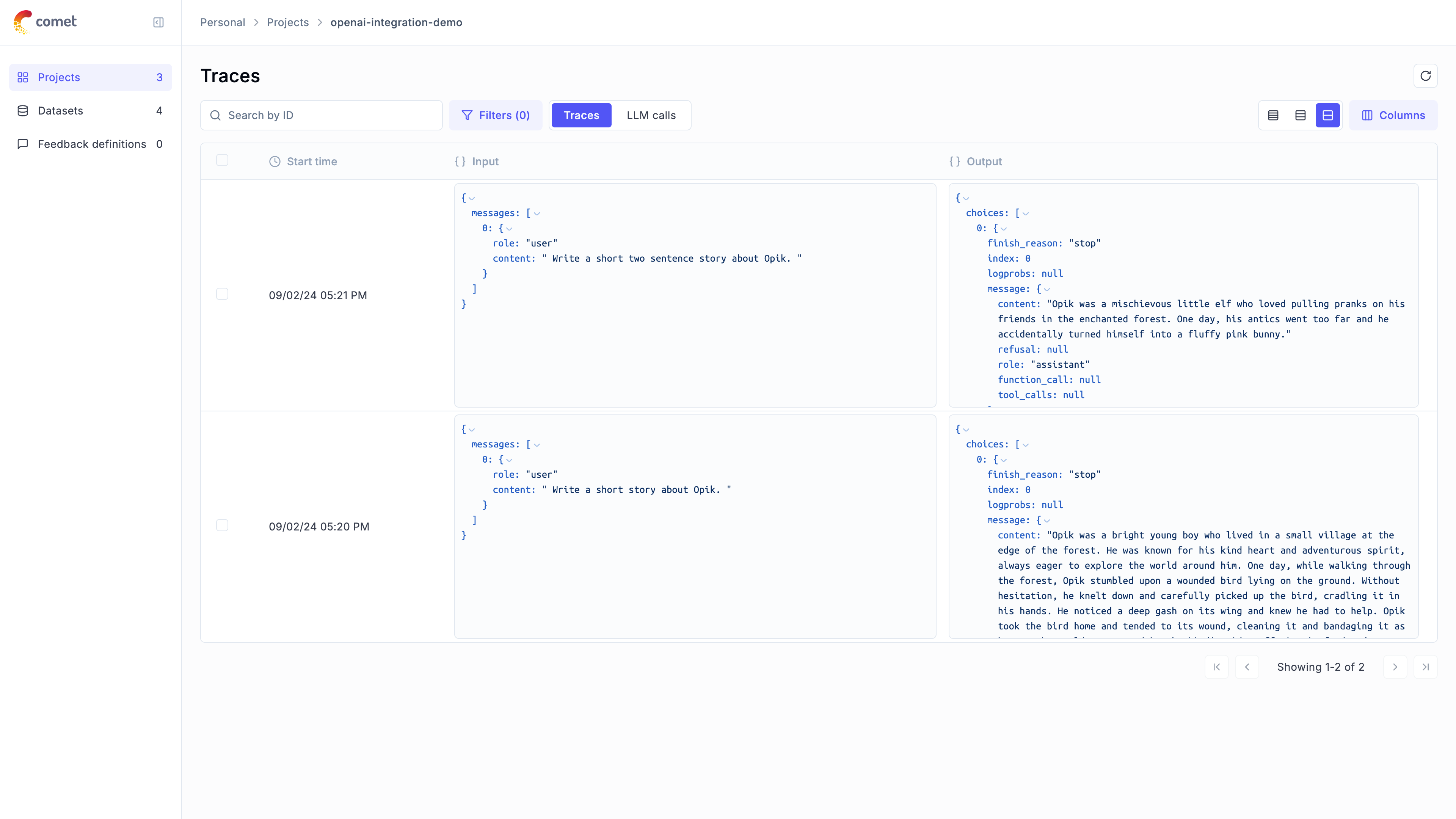

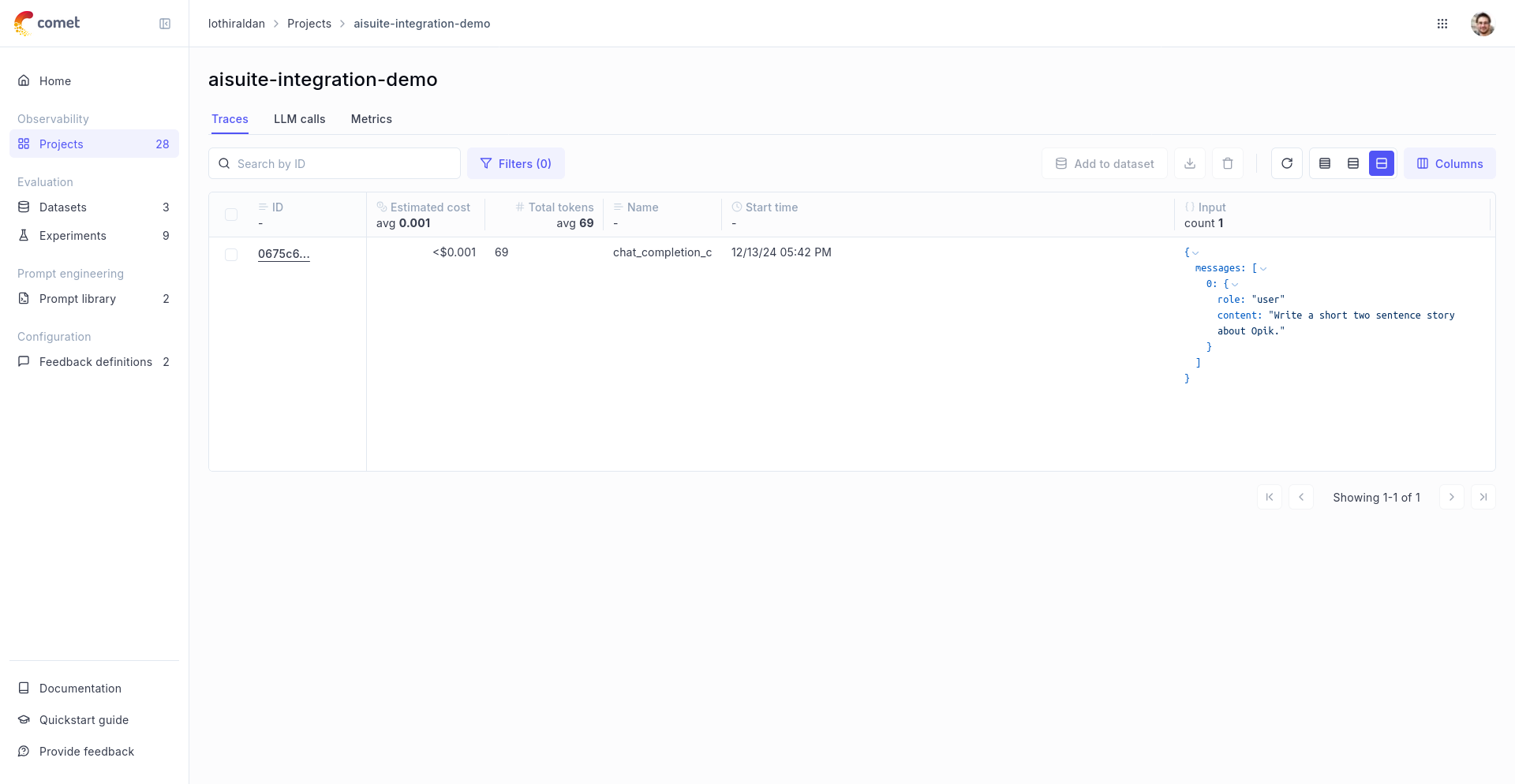

## 📊 Dataset Improvements

We've enhanced dataset functionality with several key improvements:

* **Edit Dataset Items** - You can now edit dataset items directly from the UI, making it easier to update and refine your evaluation data.

* **Remove Dataset Upload Limit for Self-Hosted** - Self-hosted deployments no longer have dataset upload limits, giving you more flexibility for large-scale evaluations.

* **Dataset Item Tagging Support** - Added comprehensive tagging support for dataset items, enabling better organization and filtering of your evaluation data.

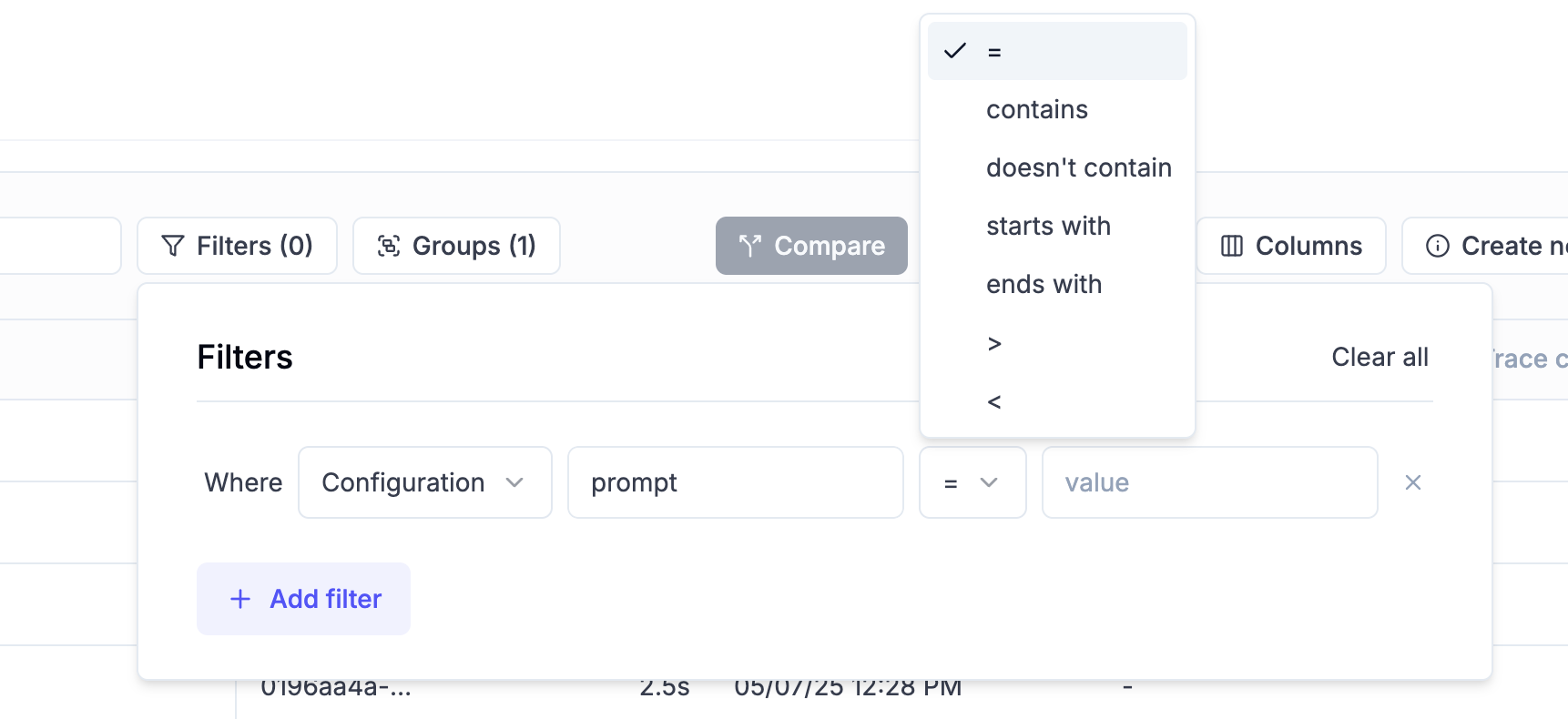

* **Dataset Filtering Capabilities by Any Column** - Filter datasets by any column in both the playground and dataset view, giving you flexible ways to find and work with specific data subsets.

* **Ability to Rename Datasets** - Rename datasets directly from the UI, making it easier to organize and manage your evaluation datasets.

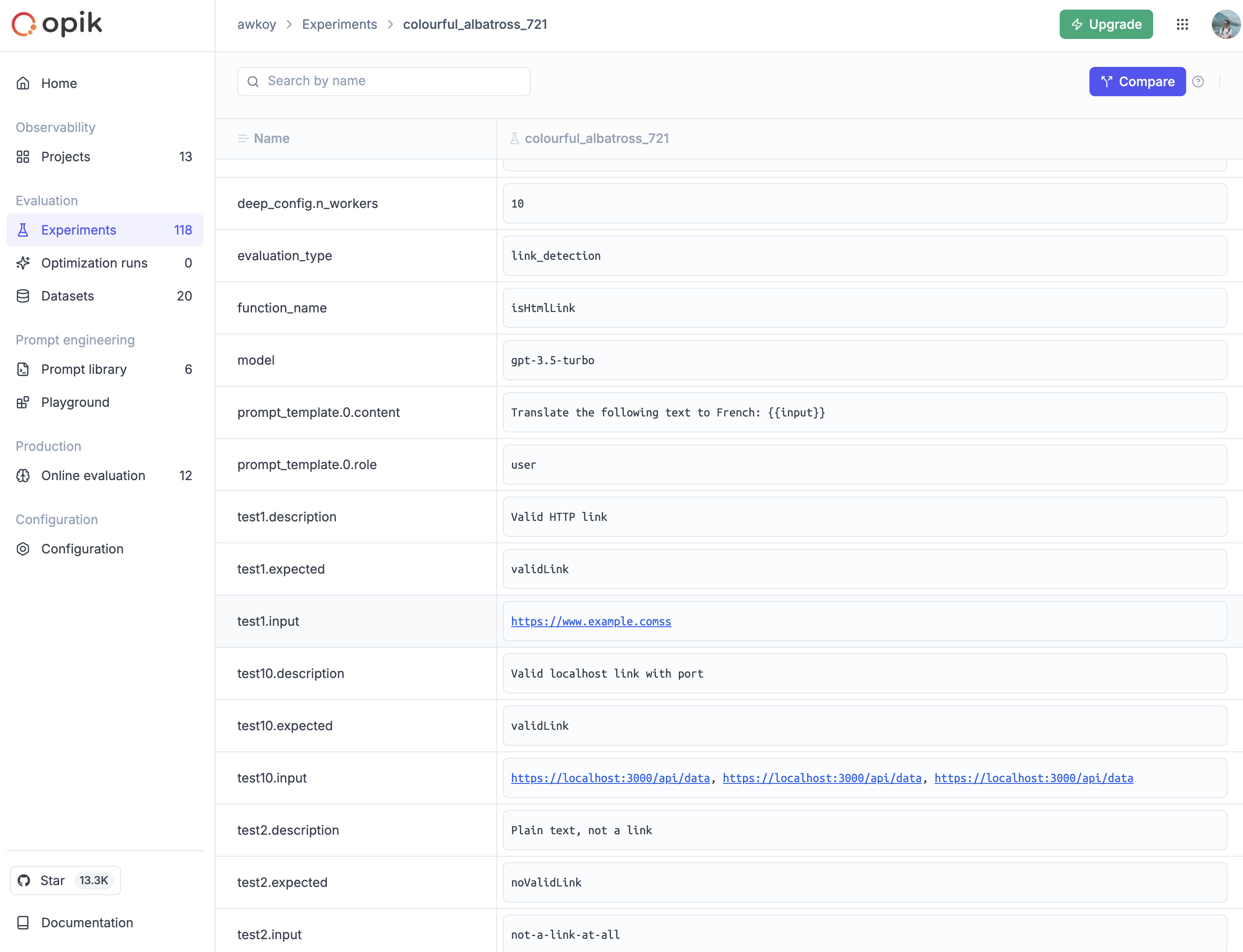

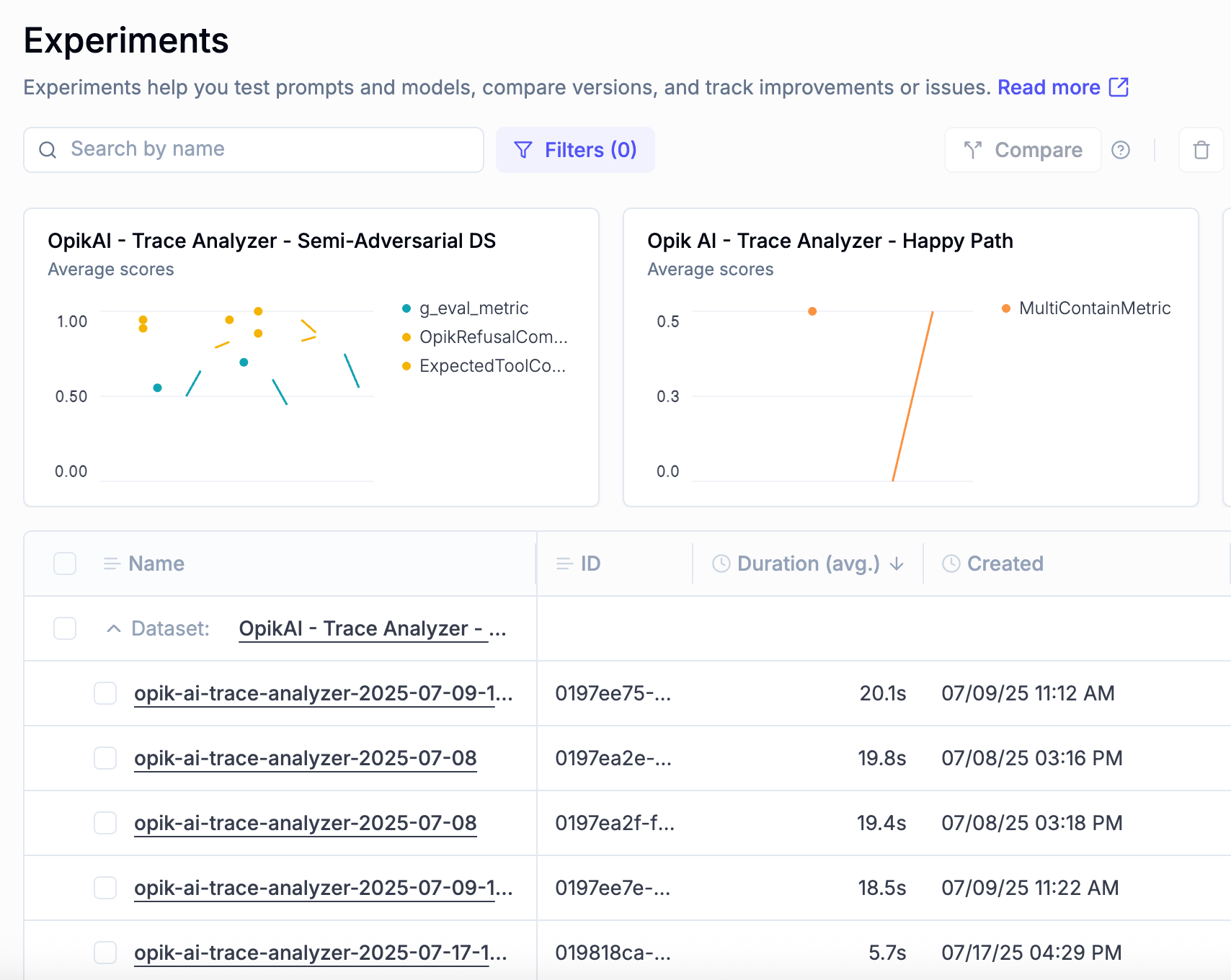

## 📈 Experiment Updates

We've made significant improvements to experiment management and analysis:

* **Experiment-Level Metrics** - Compute experiment-level metrics (as opposed to experiment-item-level metrics) for better insights into your evaluation results. Read more in the [experiment-level metrics documentation](https://www.comet.com/docs/opik/evaluation/evaluate_your_llm#computing-experiment-level-metrics).

* **Rename Experiments & Metadata** - Update experiment names and metadata config directly from the dashboard, giving you more control over experiment organization.

* **Token & Cost Columns** - Token usage and cost are now surfaced in the experiment items table for easy scanning and cost visibility.

## 📈 More Insights!

**Thread-level insights**

Added new metrics to the threads table with thread-level metrics and statistics, providing users with aggregated insights about their full multi-turn agentic interactions:

* Duration percentiles (p50, p90, p99) and averages

* Token usage statistics (total, prompt, completion tokens)

* Cost metrics and aggregations

* Also added filtering support by project, time range, and custom filters

**Experiment insights**

Added additional aggregation methods in headers for experiment items.

This new release adds percentile aggregation methods (p50, p90, p99) for all numerical metrics in experiment items table headers, extending the existing pattern used for duration to cost, feedback scores, and total tokens.

## 🔌 Integrations

**Support for GPT-5.2 in Playground and Online Scoring**

Added full support for GPT 5.2 models in both the playground and online scoring features for OpenAI and OpenRouter providers.

**Harbor Integration**

Added a comprehensive Opik integration for Harbor, a benchmark evaluation framework for autonomous LLM agents. The integration enables observability for agent benchmark evaluations (SWE-bench, LiveCodeBench, Terminal-Bench, etc.).

👉 [Harbor Integration Documentation](https://www.comet.com/docs/opik/integrations/harbor)

***

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.9.40...1.9.56)

*Releases*: `1.9.41`, `1.9.42`, `1.9.43`, `1.9.44`, `1.9.45`, `1.9.46`, `1.9.47`, `1.9.48`, `1.9.49`, `1.9.50`, `1.9.51`, `1.9.52`, `1.9.53`, `1.9.54`, `1.9.55`, `1.9.56`

# December 9, 2025

Here are the most relevant improvements we've made since the last release:

## 📊 Dataset Improvements

We've enhanced dataset functionality with several key improvements:

* **Edit Dataset Items** - You can now edit dataset items directly from the UI, making it easier to update and refine your evaluation data.

* **Remove Dataset Upload Limit for Self-Hosted** - Self-hosted deployments no longer have dataset upload limits, giving you more flexibility for large-scale evaluations.

* **Dataset Item Tagging Support** - Added comprehensive tagging support for dataset items, enabling better organization and filtering of your evaluation data.

* **Dataset Filtering Capabilities by Any Column** - Filter datasets by any column in both the playground and dataset view, giving you flexible ways to find and work with specific data subsets.

* **Ability to Rename Datasets** - Rename datasets directly from the UI, making it easier to organize and manage your evaluation datasets.

## 📈 Experiment Updates

We've made significant improvements to experiment management and analysis:

* **Experiment-Level Metrics** - Compute experiment-level metrics (as opposed to experiment-item-level metrics) for better insights into your evaluation results. Read more in the [experiment-level metrics documentation](https://www.comet.com/docs/opik/evaluation/evaluate_your_llm#computing-experiment-level-metrics).

* **Rename Experiments & Metadata** - Update experiment names and metadata config directly from the dashboard, giving you more control over experiment organization.

* **Token & Cost Columns** - Token usage and cost are now surfaced in the experiment items table for easy scanning and cost visibility.

## 🎮 Playground Improvements

We've made the Playground more powerful and easier to use for non-technical users:

* **Easy Navigation from Playground to Dataset and Metrics** - Quick navigation links from the playground to related datasets and metrics, streamlining your workflow.

* **Advanced filtering for Playground Datasets** - Filter playground datasets by tags and any other columns, making it easier to find and work with specific dataset items.

* **Pagination for the Playground** - Added pagination support to handle large datasets more efficiently in the playground.

* **Added Experiment Progress Bar in the Playground** - Visual progress indicators for running experiments, giving you real-time feedback on experiment status.

* **Added Model-Specific Throttling and Concurrency Configs in the Playground** - Configure throttling and concurrency settings per model in the playground, giving you fine-grained control over resource usage.

## 🚨 Enhanced Alerts

We've expanded alert capabilities with threshold support:

* **Added Threshold Support for Trace and Thread Feedback Scores** - Configure thresholds for feedback scores on traces and threads, enabling more precise alerting based on quality metrics.

* **Added Threshold to Trace Error Alerts** - Set thresholds for trace error alerts to get notified only when error rates exceed your configured limits.

* **Trigger Experiment Created Alert from the Playground** - Receive alerts when experiments are created directly from the playground.

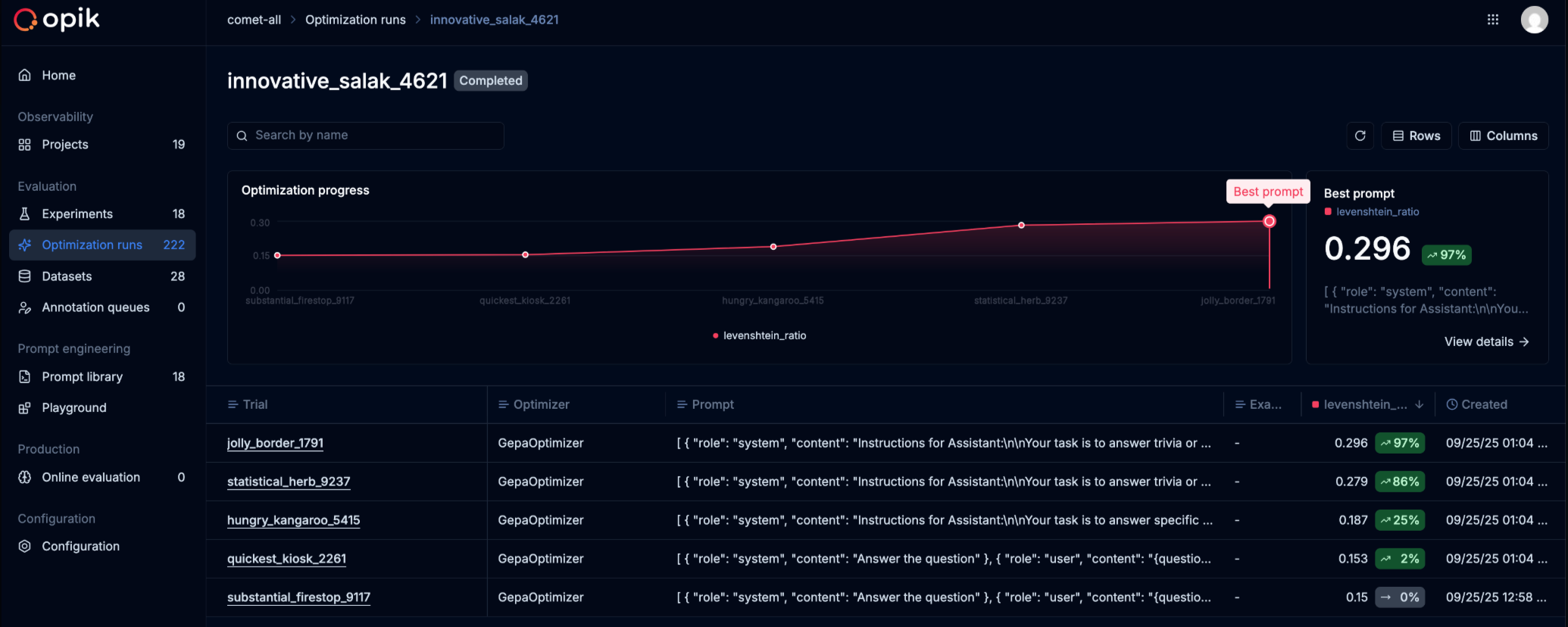

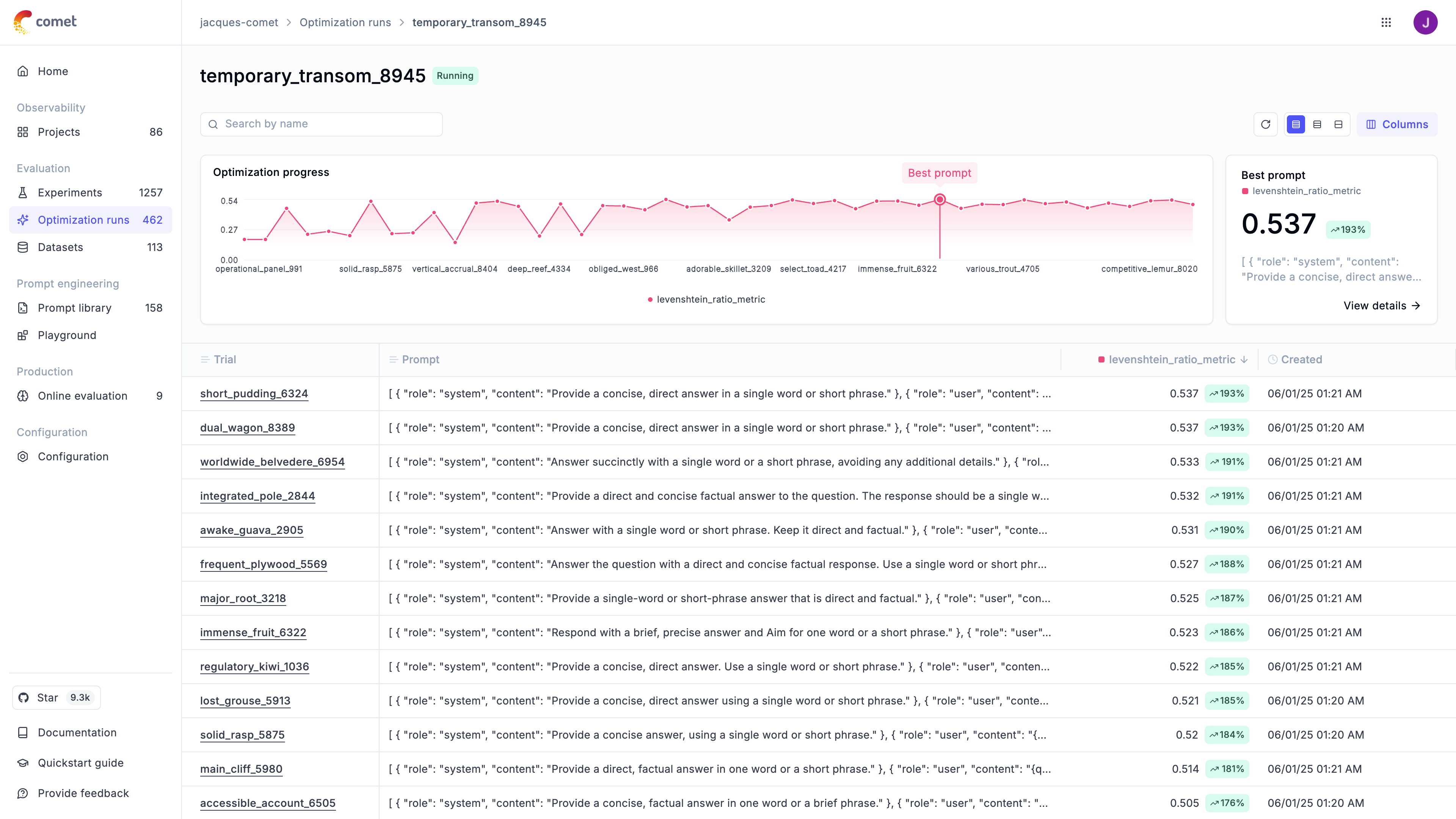

## 🤖 Opik Optimizer Updates

Significant enhancements to the Opik Optimizer:

* **Cost and Latency Optimization Support** - Added support for optimizing both cost and latency metrics simultaneously. Read more in the [optimization metrics documentation](/docs/opik/agent_optimization/optimization/define_metrics#include-cost-and-duration-metrics).

* **Training and Validation Dataset Support** - Introduced support for training and validation dataset splits, enabling better optimization workflows. Learn more in the [dataset documentation](/docs/opik/agent_optimization/optimization/define_datasets#trainvalidation-splits).

* **Example Scripts for Microsoft Agents and CrewAI** - New example scripts demonstrating how to use Opik Optimizer with popular LLM frameworks. Check out the [example scripts](https://github.com/comet-ml/opik/tree/main/sdks/opik_optimizer/scripts/llm_frameworks).

* **UI Enhancements and Optimizer Improvements** - Several UI enhancements and various improvements to Few Shot, MetaPrompt, and GEPA optimizers for better usability and performance.

## 🎨 User Experience Enhancements

Improved usability across the platform:

* **Added `has_tool_spans` Field to Show Tool Calls in Thread View** - Tool calls are now visible in thread views, providing better visibility into agent tool usage.

* **Added Export Capability (JSON/CSV) Directly from Trace, Thread, and Span Detail Views** - Export data directly from detail views in JSON or CSV format, making it easier to analyze and share your observability data.

## 🤖 New Models!

Expanded model support:

* **Added Support for Gemini 3 Pro, GPT 5.1, OpenRouter Models** - Added support for the latest model versions including Gemini 3 Pro, GPT 5.1, and OpenRouter models, giving you access to the newest AI capabilities.

***

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.9.17...1.9.40)

*Releases*: `1.9.18`, `1.9.19`, `1.9.20`, `1.9.21`, `1.9.22`, `1.9.23`, `1.9.25`, `1.9.26`, `1.9.27`, `1.9.28`, `1.9.29`, `1.9.31`, `1.9.32`, `1.9.33`, `1.9.34`, `1.9.35`, `1.9.36`, `1.9.37`, `1.9.38`, `1.9.39`, `1.9.40`

# November 18, 2025

Here are the most relevant improvements we've made since the last release:

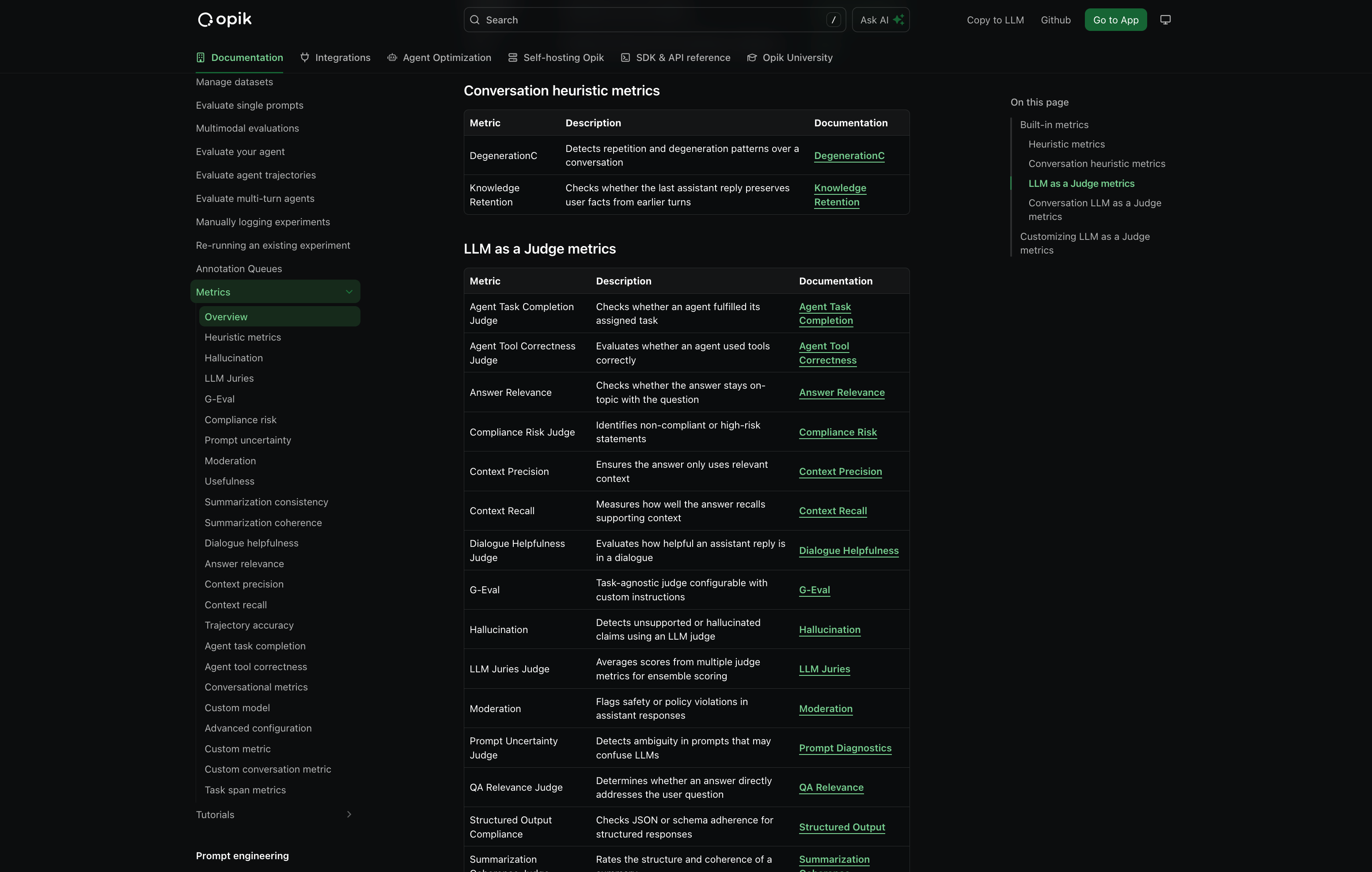

## 📊 More Metrics!

We have shipped **37 new built-in metrics**, faster & more reliable LLM judging, plus robustness fixes.

**New Metrics Added** - We've expanded the evaluation metrics library with a comprehensive set of out-of-the-box metrics including:

* **Classic NLP Heuristics** - BERTScore, Sentiment analysis, Bias detection, Conversation drift, and more

* **Lightweight Heuristics** - Fast, non-LLM based metrics perfect for CI/CD pipelines and large-scale evaluations

* **LLM-as-a-Judge Presets** - More out-of-the-box presets you can use without custom configuration

**LLM-as-a-Judge & G-Eval Improvements**:

* **Compatible with newer models** - Now works seamlessly with the latest model versions

* **Faster default judge** - Default judge is now `gpt-5-nano` for faster, more accurate evals

* **LLM Jury support** - Aggregate scores across multiple models/judges into a single ensemble score for more reliable evaluations

**Enhanced Preprocessing**:

* **Improved English text handling** - Better processing of English text to reduce false negatives

* **Better emoji handling** - Enhanced emoji processing for more accurate evaluations

**Robustness Improvements**:

* **Automatic retries** - LLM judge will retry on transient failures to avoid flaky test results

* **More reliable evaluation runs** - Faster, more consistent evaluation runs for CI and experiments

## 🎮 Playground Improvements

We've made the Playground more powerful and easier to use for non-technical users:

* **Easy Navigation from Playground to Dataset and Metrics** - Quick navigation links from the playground to related datasets and metrics, streamlining your workflow.

* **Advanced filtering for Playground Datasets** - Filter playground datasets by tags and any other columns, making it easier to find and work with specific dataset items.

* **Pagination for the Playground** - Added pagination support to handle large datasets more efficiently in the playground.

* **Added Experiment Progress Bar in the Playground** - Visual progress indicators for running experiments, giving you real-time feedback on experiment status.

* **Added Model-Specific Throttling and Concurrency Configs in the Playground** - Configure throttling and concurrency settings per model in the playground, giving you fine-grained control over resource usage.

## 🚨 Enhanced Alerts

We've expanded alert capabilities with threshold support:

* **Added Threshold Support for Trace and Thread Feedback Scores** - Configure thresholds for feedback scores on traces and threads, enabling more precise alerting based on quality metrics.

* **Added Threshold to Trace Error Alerts** - Set thresholds for trace error alerts to get notified only when error rates exceed your configured limits.

* **Trigger Experiment Created Alert from the Playground** - Receive alerts when experiments are created directly from the playground.

## 🤖 Opik Optimizer Updates

Significant enhancements to the Opik Optimizer:

* **Cost and Latency Optimization Support** - Added support for optimizing both cost and latency metrics simultaneously. Read more in the [optimization metrics documentation](/docs/opik/agent_optimization/optimization/define_metrics#include-cost-and-duration-metrics).

* **Training and Validation Dataset Support** - Introduced support for training and validation dataset splits, enabling better optimization workflows. Learn more in the [dataset documentation](/docs/opik/agent_optimization/optimization/define_datasets#trainvalidation-splits).

* **Example Scripts for Microsoft Agents and CrewAI** - New example scripts demonstrating how to use Opik Optimizer with popular LLM frameworks. Check out the [example scripts](https://github.com/comet-ml/opik/tree/main/sdks/opik_optimizer/scripts/llm_frameworks).

* **UI Enhancements and Optimizer Improvements** - Several UI enhancements and various improvements to Few Shot, MetaPrompt, and GEPA optimizers for better usability and performance.

## 🎨 User Experience Enhancements

Improved usability across the platform:

* **Added `has_tool_spans` Field to Show Tool Calls in Thread View** - Tool calls are now visible in thread views, providing better visibility into agent tool usage.

* **Added Export Capability (JSON/CSV) Directly from Trace, Thread, and Span Detail Views** - Export data directly from detail views in JSON or CSV format, making it easier to analyze and share your observability data.

## 🤖 New Models!

Expanded model support:

* **Added Support for Gemini 3 Pro, GPT 5.1, OpenRouter Models** - Added support for the latest model versions including Gemini 3 Pro, GPT 5.1, and OpenRouter models, giving you access to the newest AI capabilities.

***

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.9.17...1.9.40)

*Releases*: `1.9.18`, `1.9.19`, `1.9.20`, `1.9.21`, `1.9.22`, `1.9.23`, `1.9.25`, `1.9.26`, `1.9.27`, `1.9.28`, `1.9.29`, `1.9.31`, `1.9.32`, `1.9.33`, `1.9.34`, `1.9.35`, `1.9.36`, `1.9.37`, `1.9.38`, `1.9.39`, `1.9.40`

# November 18, 2025

Here are the most relevant improvements we've made since the last release:

## 📊 More Metrics!

We have shipped **37 new built-in metrics**, faster & more reliable LLM judging, plus robustness fixes.

**New Metrics Added** - We've expanded the evaluation metrics library with a comprehensive set of out-of-the-box metrics including:

* **Classic NLP Heuristics** - BERTScore, Sentiment analysis, Bias detection, Conversation drift, and more

* **Lightweight Heuristics** - Fast, non-LLM based metrics perfect for CI/CD pipelines and large-scale evaluations

* **LLM-as-a-Judge Presets** - More out-of-the-box presets you can use without custom configuration

**LLM-as-a-Judge & G-Eval Improvements**:

* **Compatible with newer models** - Now works seamlessly with the latest model versions

* **Faster default judge** - Default judge is now `gpt-5-nano` for faster, more accurate evals

* **LLM Jury support** - Aggregate scores across multiple models/judges into a single ensemble score for more reliable evaluations

**Enhanced Preprocessing**:

* **Improved English text handling** - Better processing of English text to reduce false negatives

* **Better emoji handling** - Enhanced emoji processing for more accurate evaluations

**Robustness Improvements**:

* **Automatic retries** - LLM judge will retry on transient failures to avoid flaky test results

* **More reliable evaluation runs** - Faster, more consistent evaluation runs for CI and experiments

👉 Access the metrics docs here: [Evaluation Metrics Overview](/docs/opik/evaluation/metrics/overview)

## 🔒 Anonymizers - PII Information Redaction

We've added **support for PII (Personally Identifiable Information) redaction** before sending data to Opik. This helps you protect sensitive information while still getting the observability insights you need.

With anonymizers, you can:

* **Automatically redact PII** from traces and spans before they're sent to Opik

* **Configure custom anonymization rules** to match your specific privacy requirements

* **Maintain compliance** with data protection regulations

* **Protect sensitive data** without losing observability

👉 Read the full docs: [Anonymizers](/docs/opik/production/anonymizers)

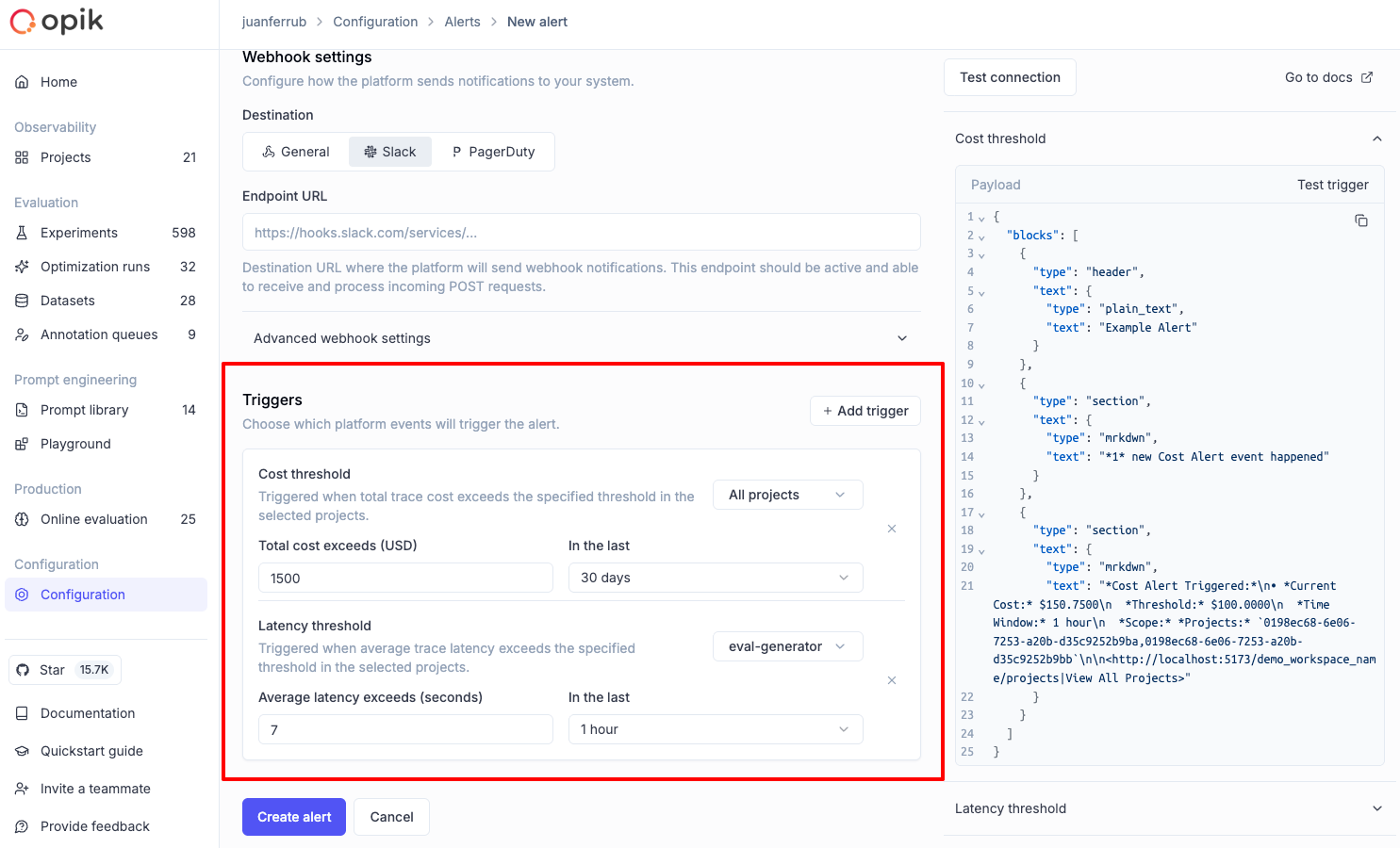

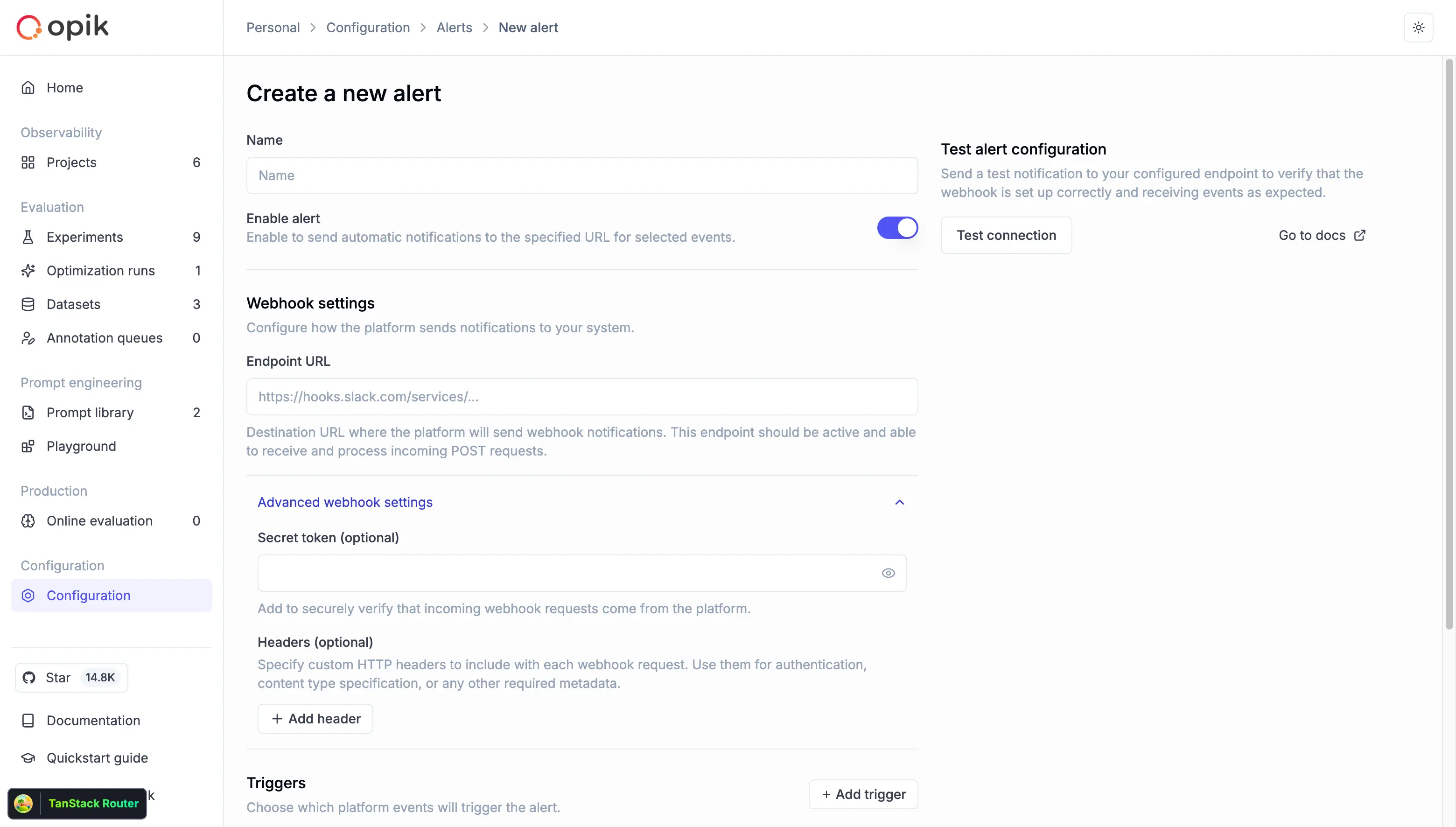

## 🚨 New Alert Types

We've expanded our alerting capabilities with new alert types and improved functionality:

* **Experiment Finished Alert** - Get notified when an experiment completes, so you can review results immediately or trigger your CI/CD pipelines.

* **Cost Alerts** - Set thresholds for cost metrics and receive alerts when spending exceeds your limits

* **Latency Alerts** - Monitor response times and get notified when latency exceeds configured thresholds

These new alert types help you stay on top of your LLM application's performance and costs, enabling proactive monitoring and faster response to issues.

👉 Access the metrics docs here: [Evaluation Metrics Overview](/docs/opik/evaluation/metrics/overview)

## 🔒 Anonymizers - PII Information Redaction

We've added **support for PII (Personally Identifiable Information) redaction** before sending data to Opik. This helps you protect sensitive information while still getting the observability insights you need.

With anonymizers, you can:

* **Automatically redact PII** from traces and spans before they're sent to Opik

* **Configure custom anonymization rules** to match your specific privacy requirements

* **Maintain compliance** with data protection regulations

* **Protect sensitive data** without losing observability

👉 Read the full docs: [Anonymizers](/docs/opik/production/anonymizers)

## 🚨 New Alert Types

We've expanded our alerting capabilities with new alert types and improved functionality:

* **Experiment Finished Alert** - Get notified when an experiment completes, so you can review results immediately or trigger your CI/CD pipelines.

* **Cost Alerts** - Set thresholds for cost metrics and receive alerts when spending exceeds your limits

* **Latency Alerts** - Monitor response times and get notified when latency exceeds configured thresholds

These new alert types help you stay on top of your LLM application's performance and costs, enabling proactive monitoring and faster response to issues.

👉 Read more: [Alerts Guide](/docs/opik/production/alerts)

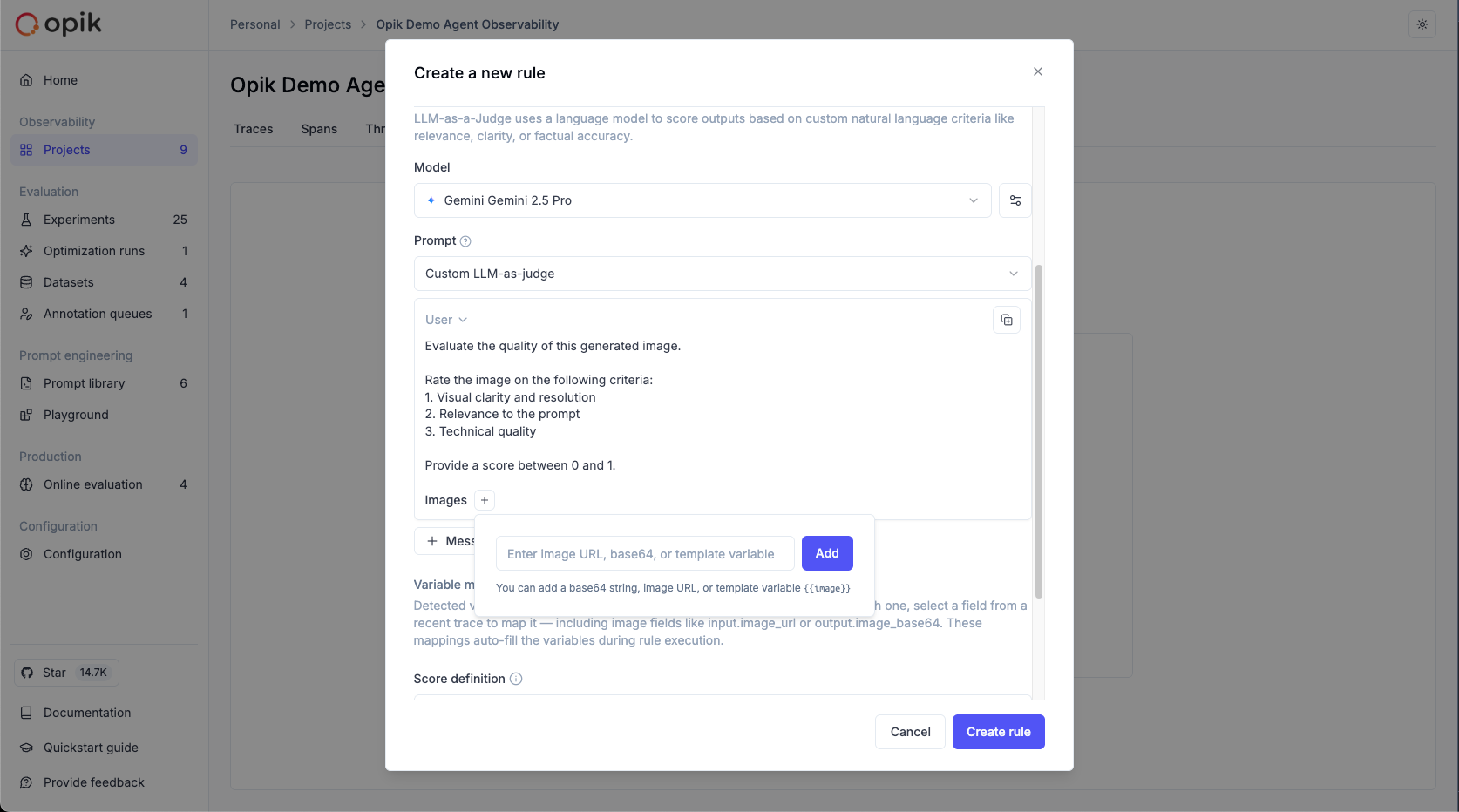

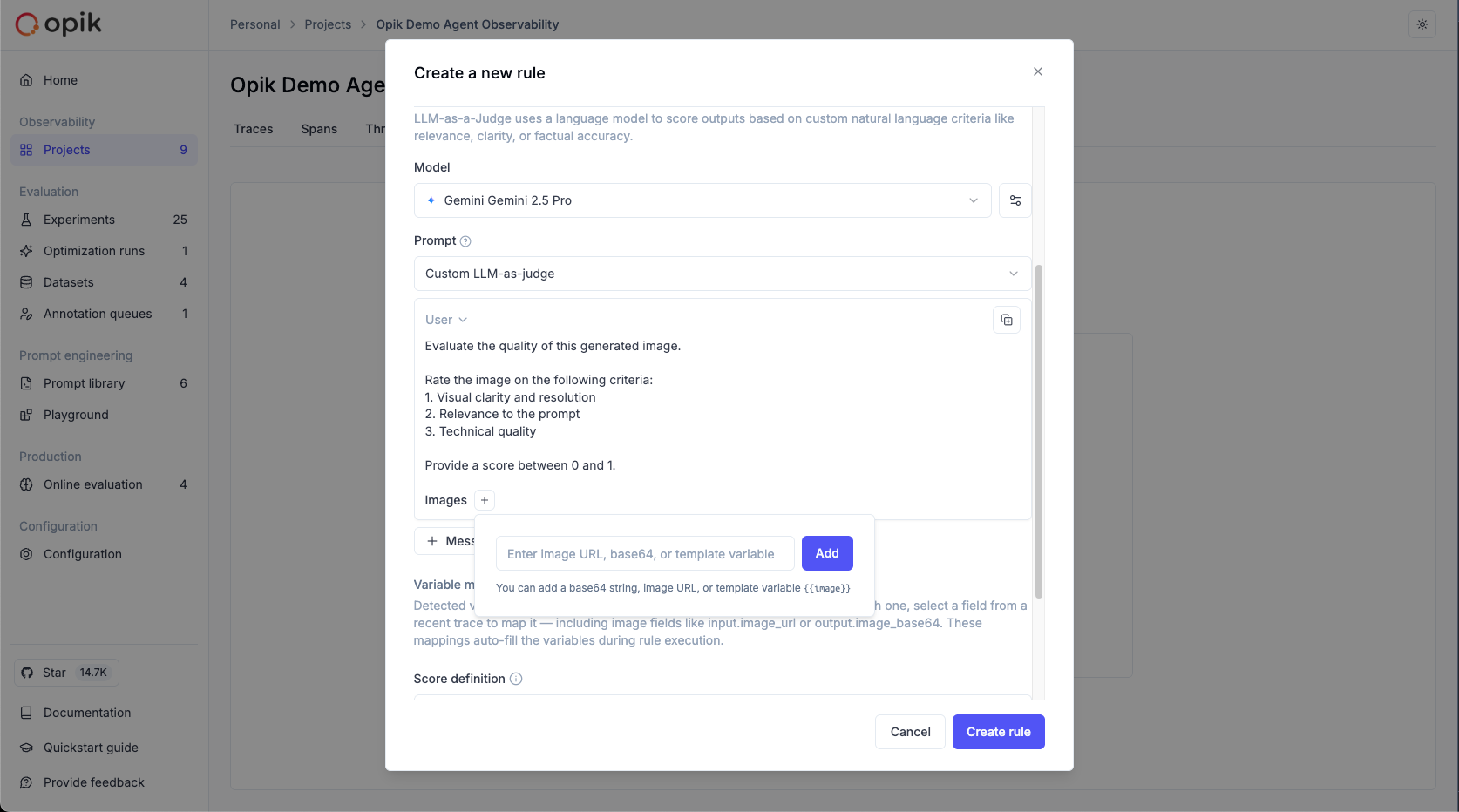

## 🎥 Multimodal Support

We've significantly enhanced multimodal capabilities across the platform:

* **Video LLM-as-a-Judge** - Added support for Video LLM-as-a-Judge, enabling evaluation of video content in your traces

* **Video Cost Tracking** - Added cost tracking for video models, so you can monitor spending on video processing operations

* **Image support in LLM-as-a-Judge** - Both Python and TypeScript SDKs now support image processing in LLM-as-a-Judge evaluations, allowing you to evaluate traces containing images

These enhancements make it easier to build and evaluate multimodal applications that work with images and video content.

## 🔌 Custom AI Providers

We've improved support for custom AI providers with enhanced configuration options:

* **Multiple Custom Providers** - Set up multiple custom AI providers for use in the Playground and online scoring

* **Custom Headers Support** - Configure custom headers for your custom providers, giving you more flexibility in how you connect to enterprise AI services

## 🧪 Enhanced Evals & Observability

We've added several improvements to make evaluation and observability more powerful:

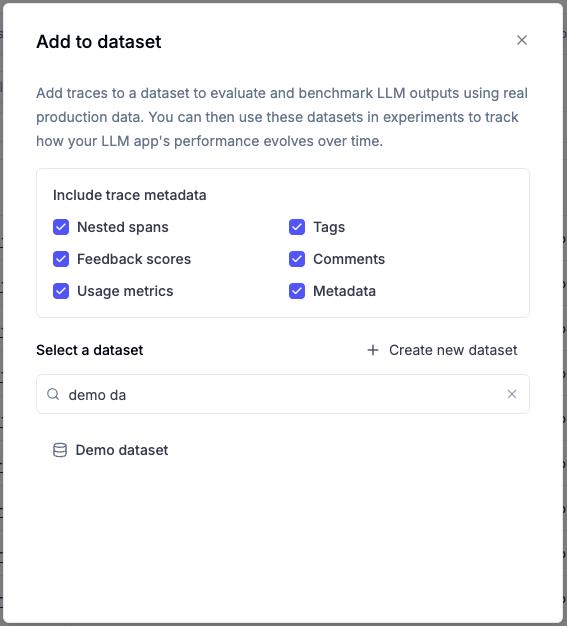

* **Trace and Span Metadata in Datasets** - Ability to add trace and span metadata to datasets for advanced agent evaluation, enabling more sophisticated evaluation workflows

* **Tokens Breakdown Display** - Display tokens breakdown (input/output) in the trace view, giving you detailed visibility into token usage for each span and trace

* **Binary (Boolean) Feedback Scores** - New support for binary (Boolean) feedback scores, allowing you to capture simple yes/no or pass/fail evaluations

## 🎨 UX Improvements

We've made several user experience enhancements across the platform:

* **Improved Pretty Mode** - Enhanced pretty mode for traces, threads, and annotation queues, making it easier to read and understand your data

* **Date Filtering for Traces, Threads, and Spans** - Added date filtering capabilities, allowing you to focus on specific time ranges when analyzing your data

* **New Optimization Runs Section** - Added a new optimization runs section to the home page, giving you quick access to your optimization results

* **Comet Debugger Mode** - Added Comet Debugger Mode with app version and connectivity status, helping you troubleshoot issues and understand your application's connection status. Read more about it [here](/docs/opik/faq#using-comet-debugger-mode-uibrowser)

👉 Read more: [Alerts Guide](/docs/opik/production/alerts)

## 🎥 Multimodal Support

We've significantly enhanced multimodal capabilities across the platform:

* **Video LLM-as-a-Judge** - Added support for Video LLM-as-a-Judge, enabling evaluation of video content in your traces

* **Video Cost Tracking** - Added cost tracking for video models, so you can monitor spending on video processing operations

* **Image support in LLM-as-a-Judge** - Both Python and TypeScript SDKs now support image processing in LLM-as-a-Judge evaluations, allowing you to evaluate traces containing images

These enhancements make it easier to build and evaluate multimodal applications that work with images and video content.

## 🔌 Custom AI Providers

We've improved support for custom AI providers with enhanced configuration options:

* **Multiple Custom Providers** - Set up multiple custom AI providers for use in the Playground and online scoring

* **Custom Headers Support** - Configure custom headers for your custom providers, giving you more flexibility in how you connect to enterprise AI services

## 🧪 Enhanced Evals & Observability

We've added several improvements to make evaluation and observability more powerful:

* **Trace and Span Metadata in Datasets** - Ability to add trace and span metadata to datasets for advanced agent evaluation, enabling more sophisticated evaluation workflows

* **Tokens Breakdown Display** - Display tokens breakdown (input/output) in the trace view, giving you detailed visibility into token usage for each span and trace

* **Binary (Boolean) Feedback Scores** - New support for binary (Boolean) feedback scores, allowing you to capture simple yes/no or pass/fail evaluations

## 🎨 UX Improvements

We've made several user experience enhancements across the platform:

* **Improved Pretty Mode** - Enhanced pretty mode for traces, threads, and annotation queues, making it easier to read and understand your data

* **Date Filtering for Traces, Threads, and Spans** - Added date filtering capabilities, allowing you to focus on specific time ranges when analyzing your data

* **New Optimization Runs Section** - Added a new optimization runs section to the home page, giving you quick access to your optimization results

* **Comet Debugger Mode** - Added Comet Debugger Mode with app version and connectivity status, helping you troubleshoot issues and understand your application's connection status. Read more about it [here](/docs/opik/faq#using-comet-debugger-mode-uibrowser)

***

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.97...1.9.17)

*Releases*: `1.8.98`, `1.8.99`, `1.8.100`, `1.8.101`, `1.8.102`, `1.9.0`, `1.9.1`, `1.9.2`, `1.9.3`, `1.9.4`, `1.9.5`, `1.9.6`, `1.9.7`, `1.9.8`, `1.9.9`, `1.9.10`, `1.9.11`, `1.9.12`, `1.9.13`, `1.9.14`, `1.9.15`, `1.9.16`, `1.9.17`

# November 4, 2025

Here are the most relevant improvements we've made since the last release:

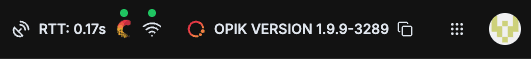

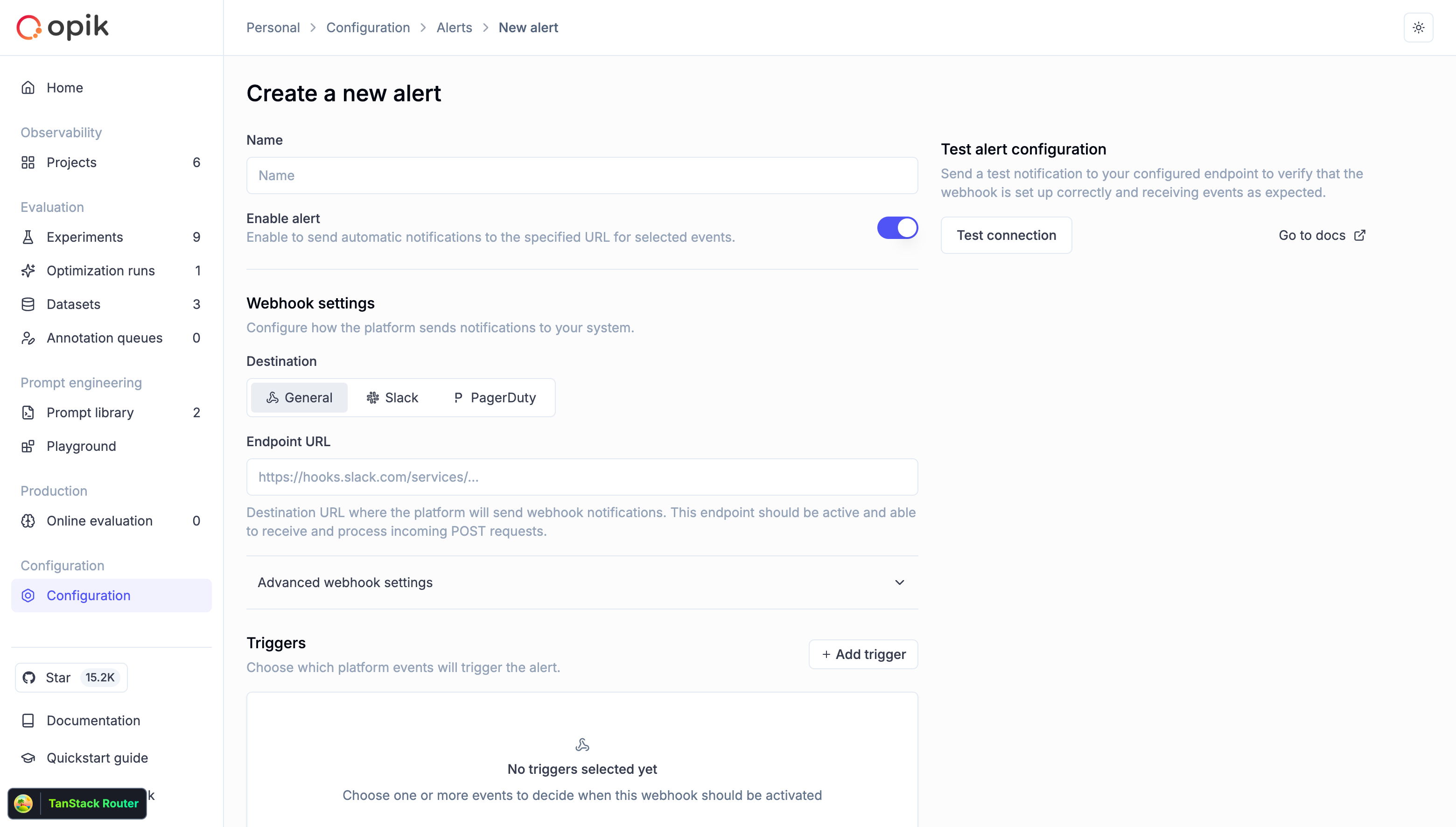

## 🚨 Native Slack and PagerDuty Alerts

We now offer **native Slack and PagerDuty alert integrations**, eliminating the need for any middleware configuration. Set up alerts directly in Opik to receive notifications when important events happen in your workspace.

With native integrations, you can:

* **Configure Slack channels** directly from Opik settings

* **Set up PagerDuty incidents** without additional webhook setup

* **Receive real-time notifications** for errors, feedback scores, and critical events

* **Streamline your monitoring workflow** with built-in integrations

***

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.97...1.9.17)

*Releases*: `1.8.98`, `1.8.99`, `1.8.100`, `1.8.101`, `1.8.102`, `1.9.0`, `1.9.1`, `1.9.2`, `1.9.3`, `1.9.4`, `1.9.5`, `1.9.6`, `1.9.7`, `1.9.8`, `1.9.9`, `1.9.10`, `1.9.11`, `1.9.12`, `1.9.13`, `1.9.14`, `1.9.15`, `1.9.16`, `1.9.17`

# November 4, 2025

Here are the most relevant improvements we've made since the last release:

## 🚨 Native Slack and PagerDuty Alerts

We now offer **native Slack and PagerDuty alert integrations**, eliminating the need for any middleware configuration. Set up alerts directly in Opik to receive notifications when important events happen in your workspace.

With native integrations, you can:

* **Configure Slack channels** directly from Opik settings

* **Set up PagerDuty incidents** without additional webhook setup

* **Receive real-time notifications** for errors, feedback scores, and critical events

* **Streamline your monitoring workflow** with built-in integrations

👉 Read the full docs here - [Alerts Guide](/docs/opik/production/alerts)

## 🖼️ Multimodal LLM-as-a-Judge Support for Visual Evaluation

LLM as a Judge metrics can now evaluate traces that contain images when using vision-capable models. This is useful for:

* **Evaluating image generation quality** - Assess the quality and relevance of generated images

* **Analyzing visual content** in multimodal applications - Evaluate how well your application handles visual inputs

* **Validating image-based responses** - Ensure your vision models produce accurate and relevant outputs

To reference image data from traces in your evaluation prompts:

* In the prompt editor, click the **"Images +"** button to add an image variable

* Map the image variable to the trace field containing image data using the Variable Mapping section

👉 Read the full docs here - [Alerts Guide](/docs/opik/production/alerts)

## 🖼️ Multimodal LLM-as-a-Judge Support for Visual Evaluation

LLM as a Judge metrics can now evaluate traces that contain images when using vision-capable models. This is useful for:

* **Evaluating image generation quality** - Assess the quality and relevance of generated images

* **Analyzing visual content** in multimodal applications - Evaluate how well your application handles visual inputs

* **Validating image-based responses** - Ensure your vision models produce accurate and relevant outputs

To reference image data from traces in your evaluation prompts:

* In the prompt editor, click the **"Images +"** button to add an image variable

* Map the image variable to the trace field containing image data using the Variable Mapping section

👉 Read more: [Evaluating traces with images](/docs/opik/production/rules#evaluating-traces-with-images)

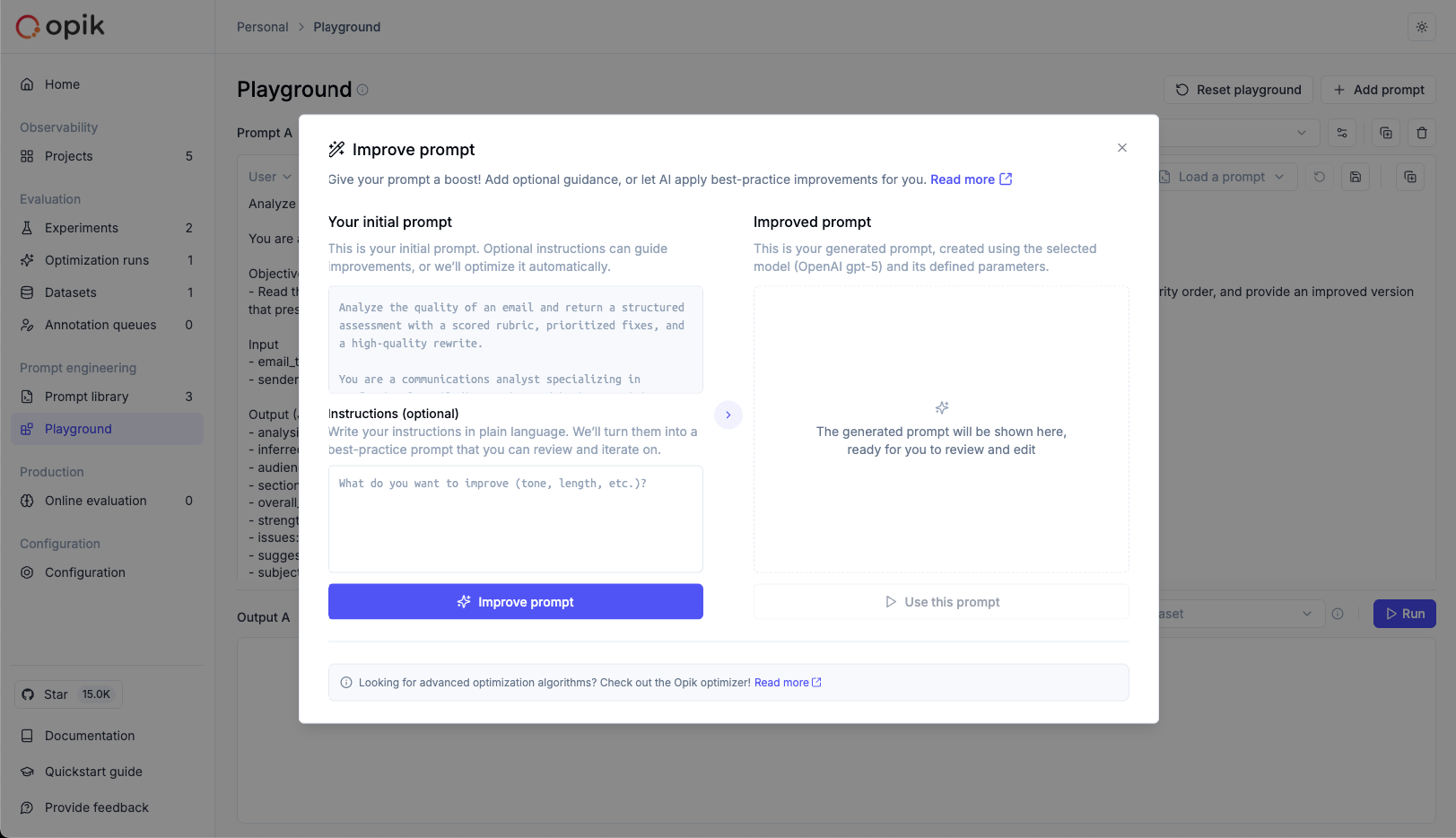

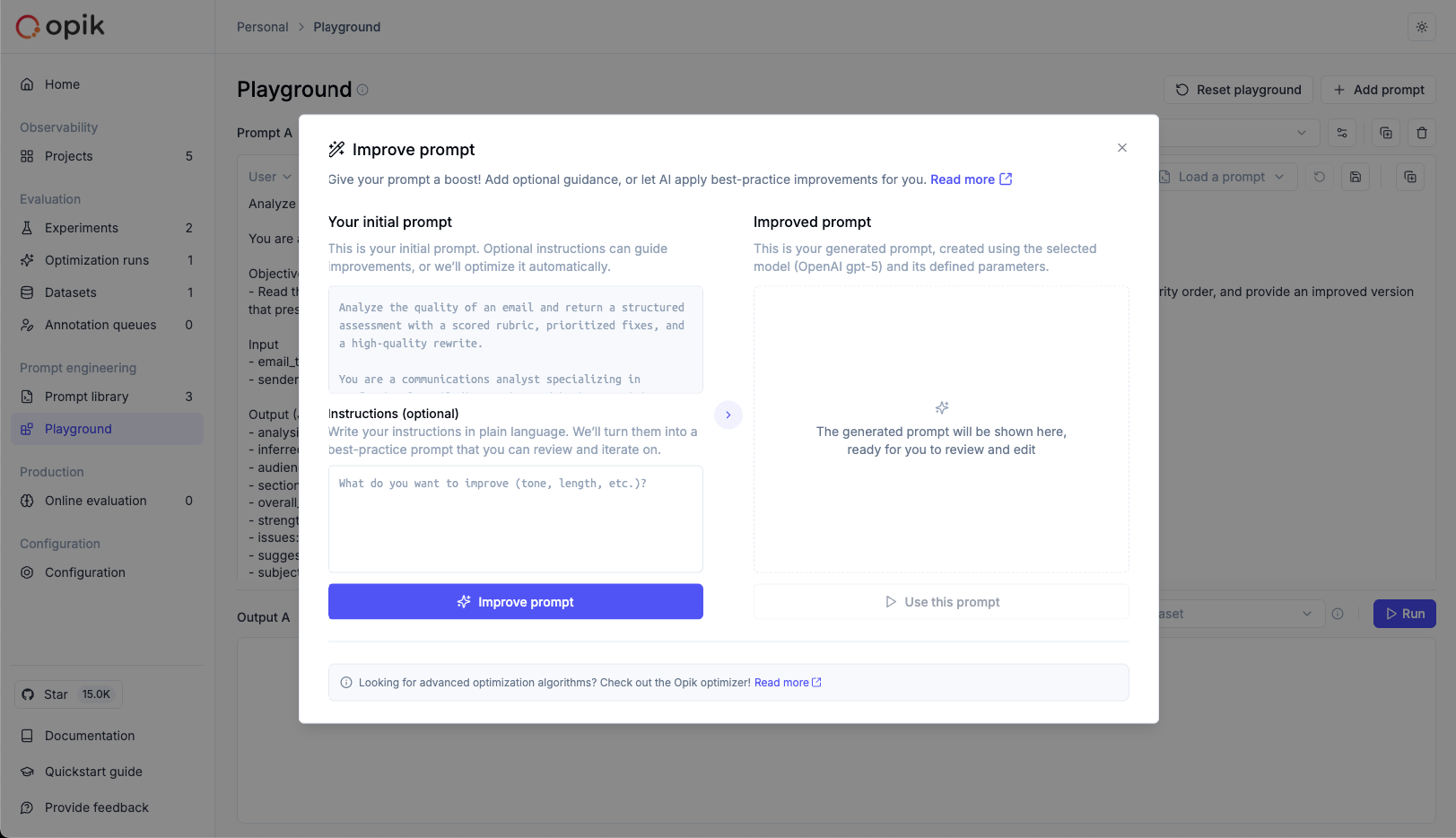

## ✨ Prompt Generator & Improver

We've launched the **Prompt Generator** and **Prompt Improver** — two AI-powered tools that help you create and refine prompts faster, directly inside the Playground.

Designed for non-technical users, these features automatically apply best practices from OpenAI, Anthropic, and Google, helping you craft clear, effective, and production-grade prompts without leaving the Playground.

### Why it matters

Prompt engineering is still one of the biggest bottlenecks in LLM development. With these tools, teams can:

* **Generate high-quality prompts** from simple task descriptions

* **Improve existing prompts** for clarity, specificity, and consistency

* **Iterate and test prompts seamlessly** in the Playground

### How it works

* **Prompt Generator** → Describe your task in plain language; Opik creates a complete system prompt following proven design principles

* **Prompt Improver** → Select an existing prompt; Opik enhances it following best practices

👉 Read more: [Evaluating traces with images](/docs/opik/production/rules#evaluating-traces-with-images)

## ✨ Prompt Generator & Improver

We've launched the **Prompt Generator** and **Prompt Improver** — two AI-powered tools that help you create and refine prompts faster, directly inside the Playground.

Designed for non-technical users, these features automatically apply best practices from OpenAI, Anthropic, and Google, helping you craft clear, effective, and production-grade prompts without leaving the Playground.

### Why it matters

Prompt engineering is still one of the biggest bottlenecks in LLM development. With these tools, teams can:

* **Generate high-quality prompts** from simple task descriptions

* **Improve existing prompts** for clarity, specificity, and consistency

* **Iterate and test prompts seamlessly** in the Playground

### How it works

* **Prompt Generator** → Describe your task in plain language; Opik creates a complete system prompt following proven design principles

* **Prompt Improver** → Select an existing prompt; Opik enhances it following best practices

👉 Read the full docs: [Prompt Generator & Improver](/docs/opik/prompt_engineering/improve)

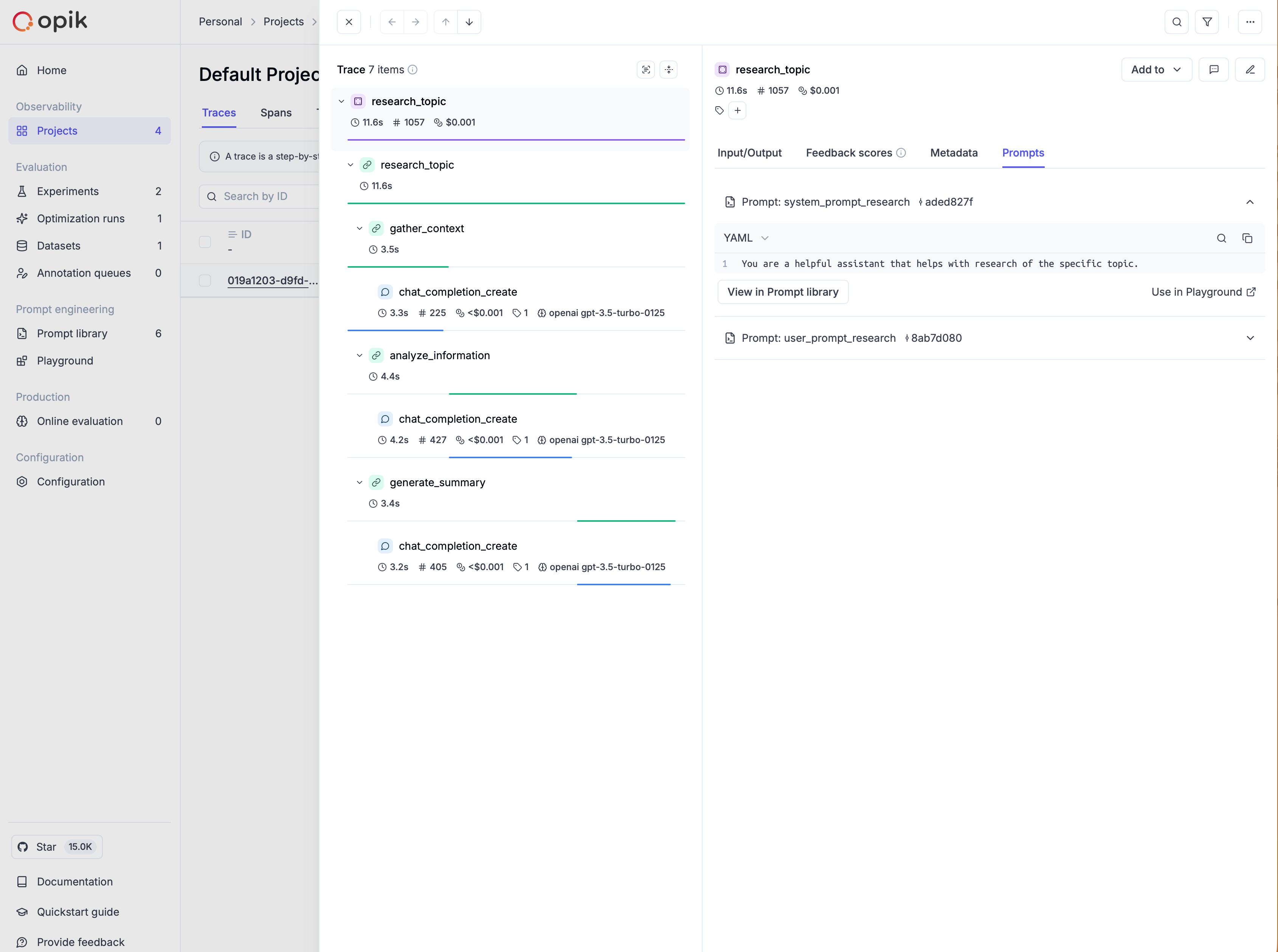

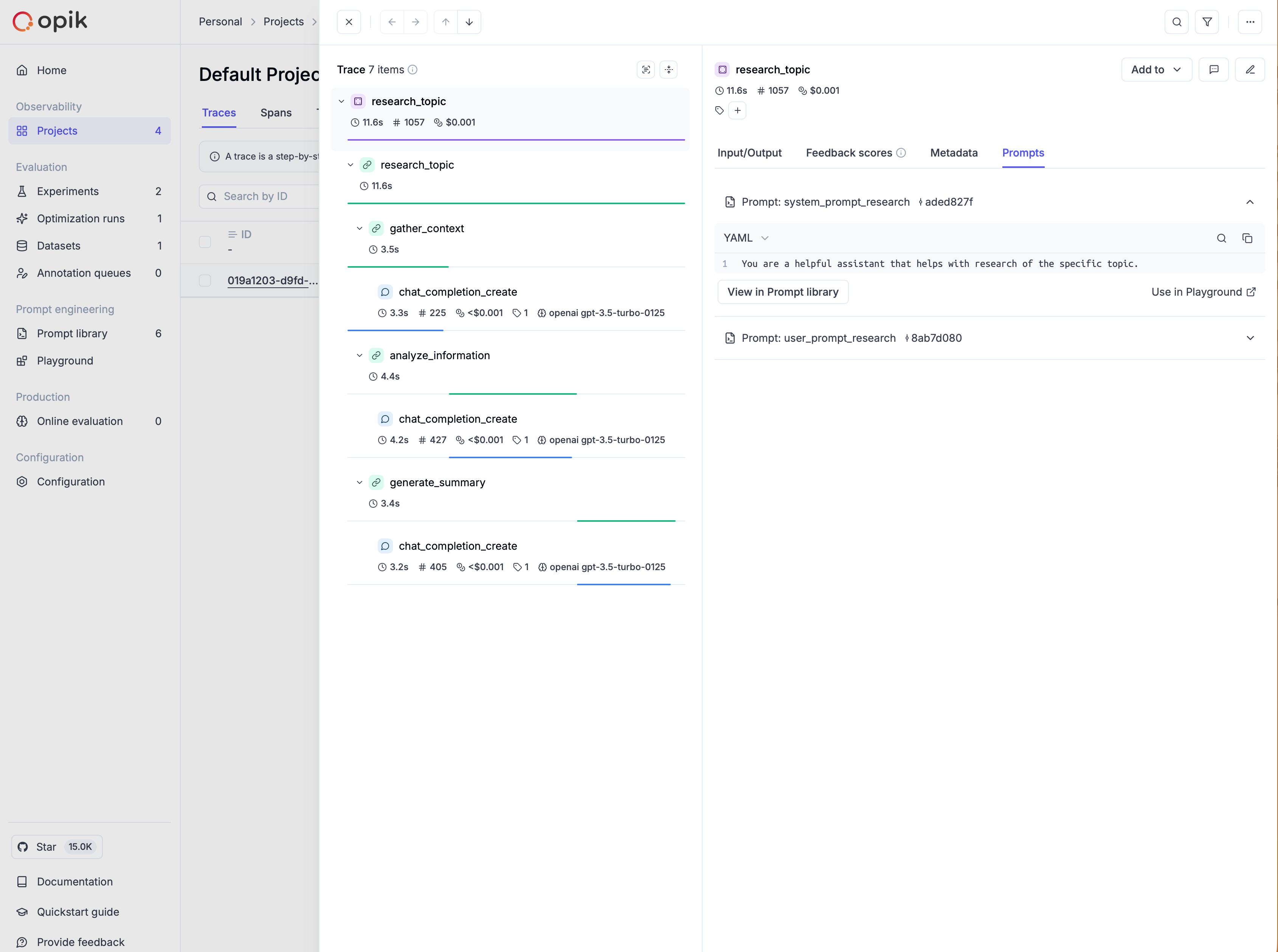

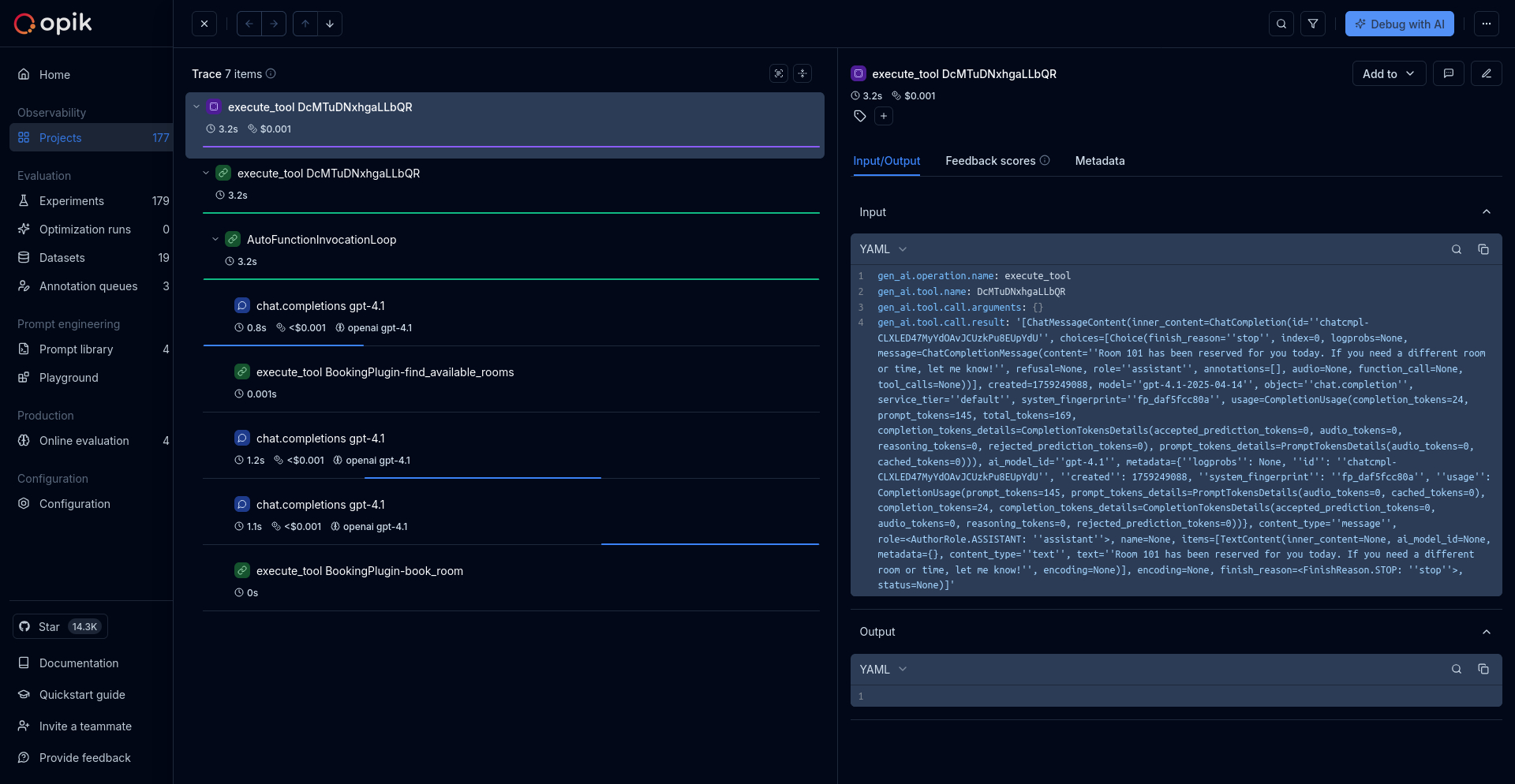

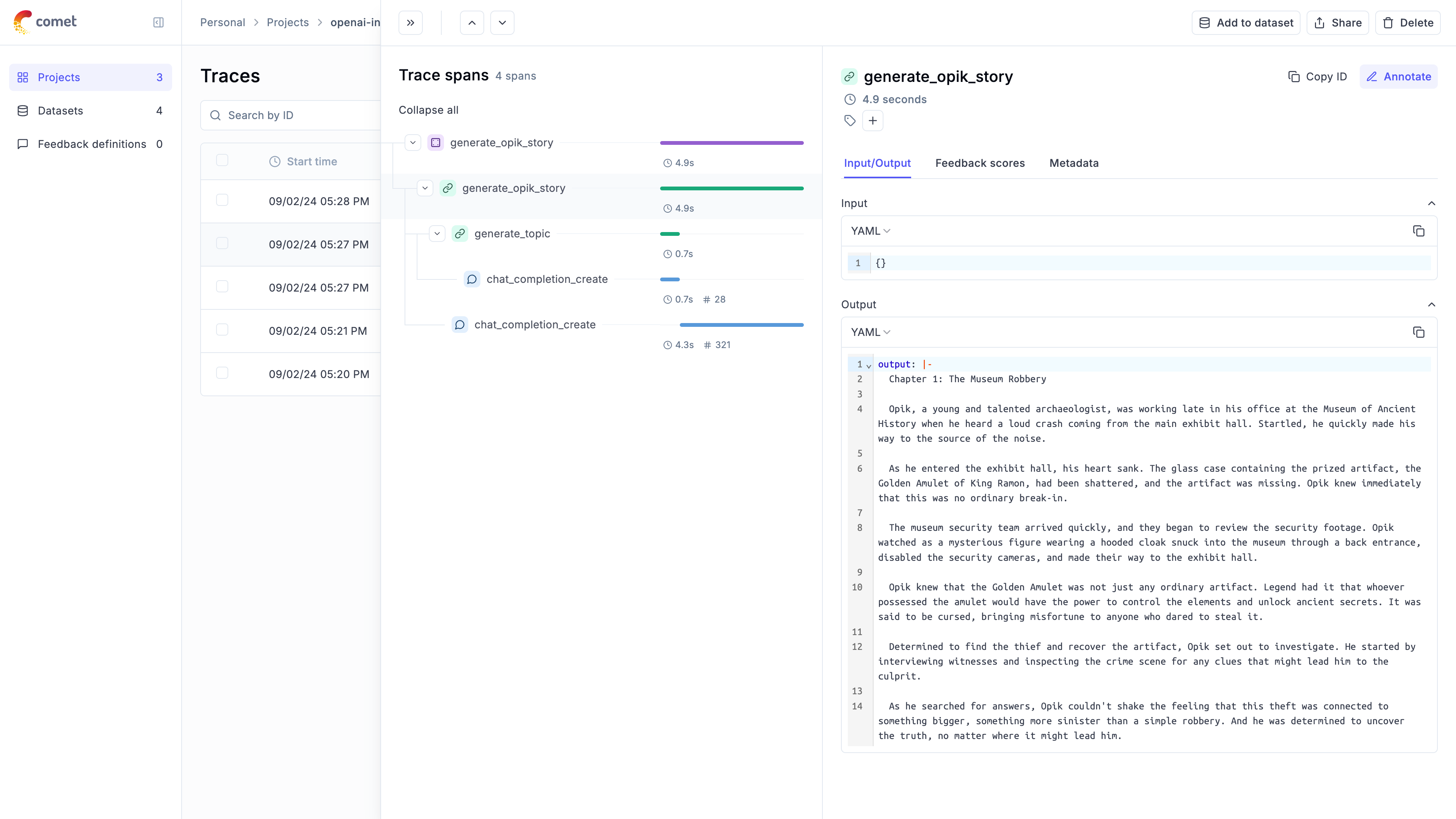

## 🔗 Advanced Prompt Integration in Spans & Traces

We've implemented **prompt integration into spans and traces**, creating a seamless connection between your Prompt Library, Traces, and the Playground.

You can now associate prompts directly with traces and spans using the `opik_context` module — so every execution is automatically tied to the exact prompt version used.

Understanding which prompt produced a given trace is key for users building both simple and advanced multi-prompt and multi-agent systems.

With this integration, you can:

* **Track which prompt version** was used in each function or span

* **Audit and debug prompts** directly from trace details

* **Reproduce or improve prompts** instantly in the Playground

* **Close the loop** between prompt design, observability, and iteration

Once added, your prompts appear in the trace details view — with links back to the Prompt Library and the Playground, so you can iterate in one click.

👉 Read the full docs: [Prompt Generator & Improver](/docs/opik/prompt_engineering/improve)

## 🔗 Advanced Prompt Integration in Spans & Traces

We've implemented **prompt integration into spans and traces**, creating a seamless connection between your Prompt Library, Traces, and the Playground.

You can now associate prompts directly with traces and spans using the `opik_context` module — so every execution is automatically tied to the exact prompt version used.

Understanding which prompt produced a given trace is key for users building both simple and advanced multi-prompt and multi-agent systems.

With this integration, you can:

* **Track which prompt version** was used in each function or span

* **Audit and debug prompts** directly from trace details

* **Reproduce or improve prompts** instantly in the Playground

* **Close the loop** between prompt design, observability, and iteration

Once added, your prompts appear in the trace details view — with links back to the Prompt Library and the Playground, so you can iterate in one click.

👉 Read more: [Adding prompts to traces and spans](/docs/opik/prompt_engineering/prompt_management#adding-prompts-to-traces-and-spans)

## 🧪 Better No-Code Experiment Capabilities in the Playground

We've introduced a series of improvements directly in the Playground to make experimentation easier and more powerful:

**Key enhancements:**

1. **Create or select datasets** directly from the Playground

2. **Create or select online score rules** - Ability to choose the ones that you want to use on each run

3. **Ability to pass dataset items to online score rules** - This enables reference-based experiments, where outputs are automatically compared to expected answers or ground truth, making objective evaluation simple

4. **One-click navigation to experiment results** - From the Playground, users can now:

* Jump into the Single Experiment View to inspect metrics and examples in detail, or

* Go to the Compare Experiments View to benchmark multiple runs side-by-side

## 📊 On-Demand Online Evaluation on Existing Traces and Threads

We've added **on-demand online evaluation** in Opik, letting users run metrics on already logged traces and threads — perfect for evaluating historical data or backfilling new scores.

### How it works

Select traces/threads, choose any online score rule (e.g., Moderation, Equals, Contains), and run evaluations directly from the UI — no code needed.

Results appear inline as feedback scores and are fully logged for traceability.

This enables:

* **Fast, no-code evaluation** of existing data

* **Easy retroactive measurement** of model and agent performance

* **Historical data analysis** without re-running traces

👉 Read more: [Manual Evaluation](/docs/opik/tracing/annotate_traces#manual-evaluation)

## 🤖 Agent Evaluation Guides

We've added two new comprehensive guides on evaluating agents:

### 1. Evaluating Agent Trajectories

This guide helps you evaluate that your agent is making the right tool calls before returning the final answer. It's fundamentally about evaluating and scoring what is happening within a trace.

👉 Read the full guide: [Evaluating Agent Trajectories](/docs/opik/evaluation/evaluate_agent_trajectory)

### 2. Evaluating Multi-Turn Agents

Evaluating chatbots is tough because you need to evaluate not just a single LLM response but instead a conversation. This guide walks you through how you can use the new `opik.simulation.SimulatedUser` method to create simulated threads for your agent.

👉 Read the full guide: [Evaluating Multi-Turn Agents](/docs/opik/evaluation/evaluate_multi_turn_agents)

These new docs significantly strengthen our agent evaluation feature-set and include diagrams to visualize how each evaluation strategy works.

## 📦 Import/Export Commands

Added new command-line functions for importing and exporting Opik data: you can now export all traces, spans, datasets, prompts, and evaluation rules from a project to local JSON or CSV files. Also helps you import data from local JSON files into an existing project.

### Top use cases it is useful for

* **Migrate** - Move data between projects or environments

* **Backup** - Create local backups of your project data

* **Version control** - Track changes to your prompts and evaluation rules

* **Data portability** - Easily transfer your Opik workspace data

Read the full docs: [Import/Export Commands](/docs/opik/tracing/import_export_commands)

***

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.83...1.8.97)

*Releases*: `1.8.83`, `1.8.84`, `1.8.85`, `1.8.86`, `1.8.87`, `1.8.88`, `1.8.89`, `1.8.90`, `1.8.91`, `1.8.92`, `1.8.93`, `1.8.94`, `1.8.95`, `1.8.96`, `1.8.97`

# October 21, 2025

Here are the most relevant improvements we've made since the last release:

## 🚨 Alerts

We've launched **Alerts** — a powerful way to get automated webhook notifications from your Opik workspace whenever important events happen (errors, feedback scores, prompt changes, and more). Opik now sends an HTTP POST to your endpoint with rich, structured event data you can route anywhere.

Now, you can make Opik a seamless part of your end-to-end workflows! With the new Alerts you can:

* **Spot production errors** in near-real time

* **Track feedback scores** to monitor model quality and user satisfaction

* **Audit prompt changes** across your workspace

* **Funnel events** into your existing workflows and CI/CD pipelines

And this is just v1.0! We'll keep adding events and advanced filtering, thresholds and more fine-grained control in future iterations, always based on community feedback.

👉 Read more: [Adding prompts to traces and spans](/docs/opik/prompt_engineering/prompt_management#adding-prompts-to-traces-and-spans)

## 🧪 Better No-Code Experiment Capabilities in the Playground

We've introduced a series of improvements directly in the Playground to make experimentation easier and more powerful:

**Key enhancements:**

1. **Create or select datasets** directly from the Playground

2. **Create or select online score rules** - Ability to choose the ones that you want to use on each run

3. **Ability to pass dataset items to online score rules** - This enables reference-based experiments, where outputs are automatically compared to expected answers or ground truth, making objective evaluation simple

4. **One-click navigation to experiment results** - From the Playground, users can now:

* Jump into the Single Experiment View to inspect metrics and examples in detail, or

* Go to the Compare Experiments View to benchmark multiple runs side-by-side

## 📊 On-Demand Online Evaluation on Existing Traces and Threads

We've added **on-demand online evaluation** in Opik, letting users run metrics on already logged traces and threads — perfect for evaluating historical data or backfilling new scores.

### How it works

Select traces/threads, choose any online score rule (e.g., Moderation, Equals, Contains), and run evaluations directly from the UI — no code needed.

Results appear inline as feedback scores and are fully logged for traceability.

This enables:

* **Fast, no-code evaluation** of existing data

* **Easy retroactive measurement** of model and agent performance

* **Historical data analysis** without re-running traces

👉 Read more: [Manual Evaluation](/docs/opik/tracing/annotate_traces#manual-evaluation)

## 🤖 Agent Evaluation Guides

We've added two new comprehensive guides on evaluating agents:

### 1. Evaluating Agent Trajectories

This guide helps you evaluate that your agent is making the right tool calls before returning the final answer. It's fundamentally about evaluating and scoring what is happening within a trace.

👉 Read the full guide: [Evaluating Agent Trajectories](/docs/opik/evaluation/evaluate_agent_trajectory)

### 2. Evaluating Multi-Turn Agents

Evaluating chatbots is tough because you need to evaluate not just a single LLM response but instead a conversation. This guide walks you through how you can use the new `opik.simulation.SimulatedUser` method to create simulated threads for your agent.

👉 Read the full guide: [Evaluating Multi-Turn Agents](/docs/opik/evaluation/evaluate_multi_turn_agents)

These new docs significantly strengthen our agent evaluation feature-set and include diagrams to visualize how each evaluation strategy works.

## 📦 Import/Export Commands

Added new command-line functions for importing and exporting Opik data: you can now export all traces, spans, datasets, prompts, and evaluation rules from a project to local JSON or CSV files. Also helps you import data from local JSON files into an existing project.

### Top use cases it is useful for

* **Migrate** - Move data between projects or environments

* **Backup** - Create local backups of your project data

* **Version control** - Track changes to your prompts and evaluation rules

* **Data portability** - Easily transfer your Opik workspace data

Read the full docs: [Import/Export Commands](/docs/opik/tracing/import_export_commands)

***

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.83...1.8.97)

*Releases*: `1.8.83`, `1.8.84`, `1.8.85`, `1.8.86`, `1.8.87`, `1.8.88`, `1.8.89`, `1.8.90`, `1.8.91`, `1.8.92`, `1.8.93`, `1.8.94`, `1.8.95`, `1.8.96`, `1.8.97`

# October 21, 2025

Here are the most relevant improvements we've made since the last release:

## 🚨 Alerts

We've launched **Alerts** — a powerful way to get automated webhook notifications from your Opik workspace whenever important events happen (errors, feedback scores, prompt changes, and more). Opik now sends an HTTP POST to your endpoint with rich, structured event data you can route anywhere.

Now, you can make Opik a seamless part of your end-to-end workflows! With the new Alerts you can:

* **Spot production errors** in near-real time

* **Track feedback scores** to monitor model quality and user satisfaction

* **Audit prompt changes** across your workspace

* **Funnel events** into your existing workflows and CI/CD pipelines

And this is just v1.0! We'll keep adding events and advanced filtering, thresholds and more fine-grained control in future iterations, always based on community feedback.

Read the full docs here - [Alerts Guide](/docs/opik/production/alerts)

## 🖼️ Expanded Multimodal Image Support

We've added a better image support across our platform!

### What's new?

**1. Image Support in LLM as a Judge online Evaluations** - LLM as a Judge evaluations now support images alongside text, enabling you to evaluate vision models and multimodal applications. Upload images and get comprehensive feedback on both text and visual content.

**2. Enhanced Playground Experience** - The playground now supports image inputs, allowing you to test prompts with images before running full evaluations. Perfect for experimenting with vision models and multimodal prompts.

**3. Improved Data Display** - Base64 image previews in data tables, better image handling in trace views, and enhanced pretty formatting for multimodal content.

Read the full docs here - [Alerts Guide](/docs/opik/production/alerts)

## 🖼️ Expanded Multimodal Image Support

We've added a better image support across our platform!

### What's new?

**1. Image Support in LLM as a Judge online Evaluations** - LLM as a Judge evaluations now support images alongside text, enabling you to evaluate vision models and multimodal applications. Upload images and get comprehensive feedback on both text and visual content.

**2. Enhanced Playground Experience** - The playground now supports image inputs, allowing you to test prompts with images before running full evaluations. Perfect for experimenting with vision models and multimodal prompts.

**3. Improved Data Display** - Base64 image previews in data tables, better image handling in trace views, and enhanced pretty formatting for multimodal content.

Links to official docs: [Evaluating traces with images](/docs/opik/production/rules#evaluating-traces-with-images) and [Using images in the Plaground](/docs/opik/prompt_engineering/playground#using-images-in-the-playground)

## Opik Optimizer Updates

**1. Support Multi-Metric Optimization** - Support for optimizing multiple metrics simultaneously with comprehensive frontend and backend changes. Read [more](/docs/opik/agent_optimization/optimization/define_metrics#compose-metrics)

**2. HRPO (Hierarchical Reflective Prompt Optimizer)** - New optimizer with self-reflective capabilities. Read more about it [here](/docs/opik/agent_optimization/algorithms/hierarchical_adaptive_optimizer)

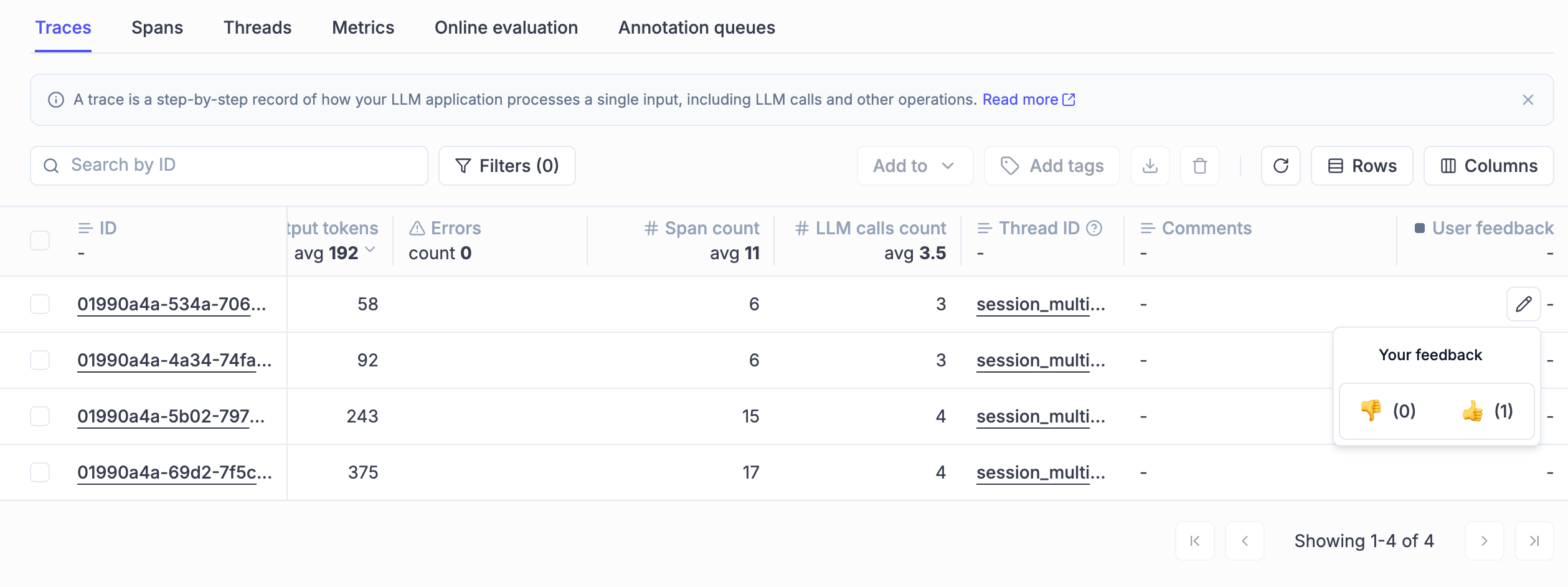

## Enhanced Feedback & Annotation experience

**1. Improved Annotation Queue Export** - Enhanced export functionality for annotation queues: export your annotated data seamlessly for further analysis.

**2. Annotation Queue UX Enhancements**

* **Hotkeys Navigation** - Improved keyboard navigation throughout the interface for a fast annotation experience

* **Return to Annotation Queue Button** - Easy navigation back to annotation queues

* **Resume Functionality** - Continue annotation work where you left off

* **Queue Creation from Traces** - Create annotation queues directly from trace tables

**3. Inline Feedback Editing** - Quickly edit user feedback directly in data tables with our new inline editing feature. Hover over feedback cells to reveal edit options, making annotation workflows faster and more intuitive.

Links to official docs: [Evaluating traces with images](/docs/opik/production/rules#evaluating-traces-with-images) and [Using images in the Plaground](/docs/opik/prompt_engineering/playground#using-images-in-the-playground)

## Opik Optimizer Updates

**1. Support Multi-Metric Optimization** - Support for optimizing multiple metrics simultaneously with comprehensive frontend and backend changes. Read [more](/docs/opik/agent_optimization/optimization/define_metrics#compose-metrics)

**2. HRPO (Hierarchical Reflective Prompt Optimizer)** - New optimizer with self-reflective capabilities. Read more about it [here](/docs/opik/agent_optimization/algorithms/hierarchical_adaptive_optimizer)

## Enhanced Feedback & Annotation experience

**1. Improved Annotation Queue Export** - Enhanced export functionality for annotation queues: export your annotated data seamlessly for further analysis.

**2. Annotation Queue UX Enhancements**

* **Hotkeys Navigation** - Improved keyboard navigation throughout the interface for a fast annotation experience

* **Return to Annotation Queue Button** - Easy navigation back to annotation queues

* **Resume Functionality** - Continue annotation work where you left off

* **Queue Creation from Traces** - Create annotation queues directly from trace tables

**3. Inline Feedback Editing** - Quickly edit user feedback directly in data tables with our new inline editing feature. Hover over feedback cells to reveal edit options, making annotation workflows faster and more intuitive.

Read more about our [Annotation Queues](/docs/opik/evaluation/annotation_queues)

## User Experience Enhancements

**1. Dark Mode Refinements** - Improved dark mode styling across UI components for better visual consistency and user experience.

Read more about our [Annotation Queues](/docs/opik/evaluation/annotation_queues)

## User Experience Enhancements

**1. Dark Mode Refinements** - Improved dark mode styling across UI components for better visual consistency and user experience.

**2. Enhanced Prompt Readability** - Better formatting and display of long prompts in the interface, making them easier to read and understand.

**3. Improved Online Evaluation Page** - Added search, filtering, and sorting capabilities to the online evaluation page for better data management.

**4. Better token and cost control**

* **Thread Cost Display** - Show cost information in thread sidebar headers

* **Sum Statistics** - Display sum statistics for cost and token columns in the traces table.

**2. Enhanced Prompt Readability** - Better formatting and display of long prompts in the interface, making them easier to read and understand.

**3. Improved Online Evaluation Page** - Added search, filtering, and sorting capabilities to the online evaluation page for better data management.

**4. Better token and cost control**

* **Thread Cost Display** - Show cost information in thread sidebar headers

* **Sum Statistics** - Display sum statistics for cost and token columns in the traces table.

**5. Filter-Aware Metric Aggregation** - Better experiment item filtering in the experiments details tables for better data control.

**6. Pretty Mode Enhancements** - Improved the Pretty mode for Input/Output display with better formatting and readability across the product.

## TypeScript SDK Updates

* **Opik Configure Tool** - New `opik-ts` configure tool with a guided developer experience and local flag support

* **Prompt Management** - Comprehensive prompt management implementation

* **LangChain Integration** - Aligned LangChain integration with Python architecture

## Python SDK Improvements

* **Context Managers** - New context managers for span and trace creation

* **Bedrock Integration** - Enhanced Bedrock integration with invoke\_model support

* **Trace Updates** - New `update_trace()` method for easier trace modifications

* **Parallel Agent Support** - Support for logging parallel agents in ADK integration

* **Enhanced feedback score handling** with better category support

## Integration updates

**1. OpenTelemetry Improvements**

* **Thread ID Support** - Added support for thread\_id in OpenTelemetry endpoint

* **System Information in Telemetry** - Enhanced telemetry with system information

**2. Model Support Updates** - Added support for [Claude Haiku 4.5](https://www.anthropic.com/news/claude-haiku-4-5) and updated model pricing information across the platform.

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.62...1.8.83)

*Releases*: `1.8.63`, `1.8.64`, `1.8.65`, `1.8.66`, `1.8.67`, `1.8.68`, `1.8.69`, `1.8.70`, `1.8.71`, `1.8.72`, `1.8.73`, `1.8.74`, `1.8.75`, `1.8.76`, `1.8.77`, `1.8.78`, `1.8.79`, `1.8.80`, `1.8.81`, `1.8.82`, `1.8.83`

# October 3, 2025

Here are the most relevant improvements we've made since the last release:

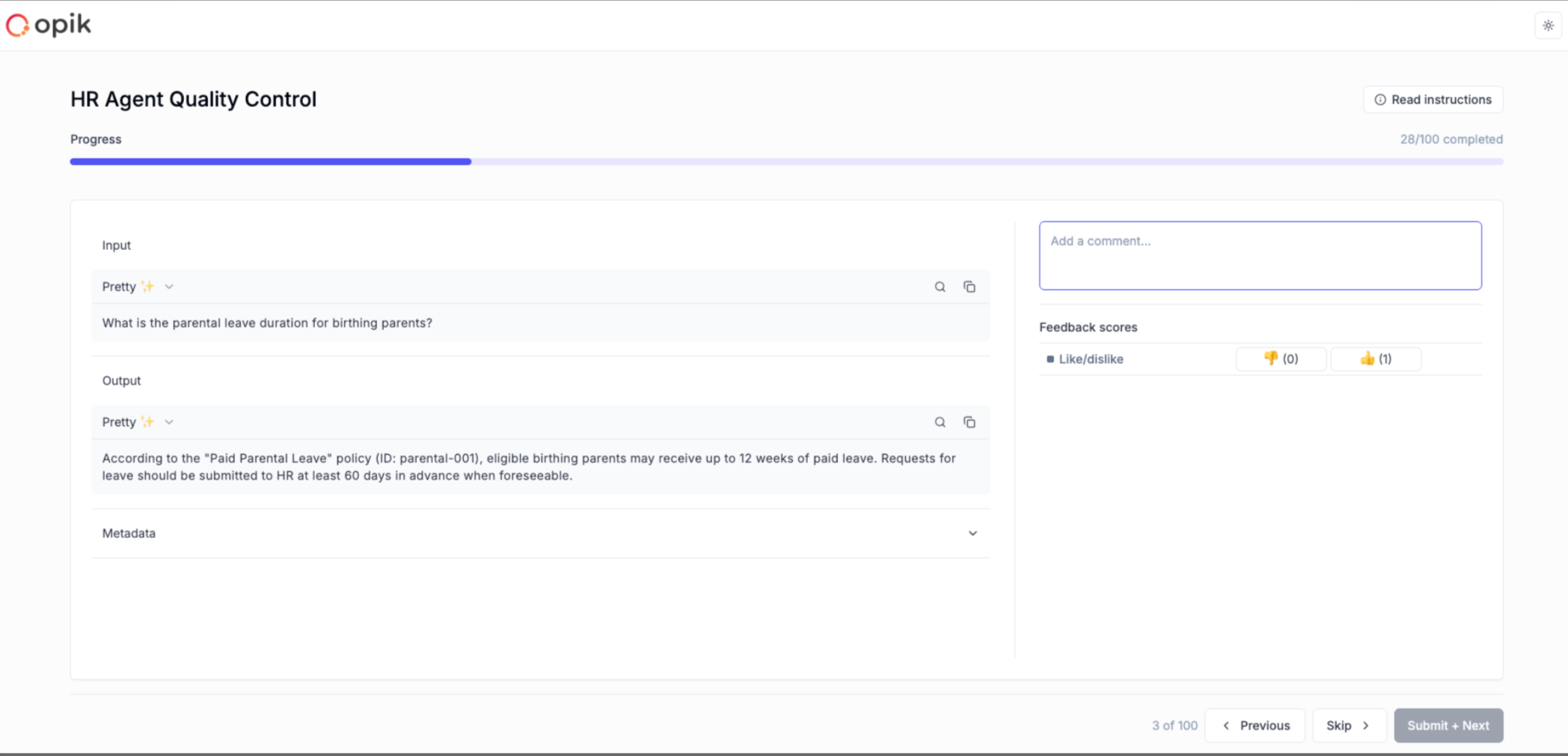

## 📝 Multi-Value Feedback Scores & Annotation Queues

We're excited to announce major improvements to our evaluation and annotation capabilities!

### What's new?

**1. Multi-Value Feedback Scores**

Multiple users can now independently score the same trace or thread. No more overwriting each other's input—every reviewer's perspective is preserved and is visible in the product. This enables richer, more reliable consensus-building during evaluation.

**2. Annotation Queues**

Create queues of traces or threads that need expert review. Share them with SMEs through simple links. Organize work systematically, track progress, and collect both structured and unstructured feedback at scale.

**3. Simplified Annotation Experience**

A clean, focused UI designed for non-technical reviewers. Support for clear instructions, predefined feedback metrics, and progress indicators. Lightweight and distraction-free, so SMEs can concentrate on providing high-quality feedback.

**5. Filter-Aware Metric Aggregation** - Better experiment item filtering in the experiments details tables for better data control.

**6. Pretty Mode Enhancements** - Improved the Pretty mode for Input/Output display with better formatting and readability across the product.

## TypeScript SDK Updates

* **Opik Configure Tool** - New `opik-ts` configure tool with a guided developer experience and local flag support

* **Prompt Management** - Comprehensive prompt management implementation

* **LangChain Integration** - Aligned LangChain integration with Python architecture

## Python SDK Improvements

* **Context Managers** - New context managers for span and trace creation

* **Bedrock Integration** - Enhanced Bedrock integration with invoke\_model support

* **Trace Updates** - New `update_trace()` method for easier trace modifications

* **Parallel Agent Support** - Support for logging parallel agents in ADK integration

* **Enhanced feedback score handling** with better category support

## Integration updates

**1. OpenTelemetry Improvements**

* **Thread ID Support** - Added support for thread\_id in OpenTelemetry endpoint

* **System Information in Telemetry** - Enhanced telemetry with system information

**2. Model Support Updates** - Added support for [Claude Haiku 4.5](https://www.anthropic.com/news/claude-haiku-4-5) and updated model pricing information across the platform.

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.62...1.8.83)

*Releases*: `1.8.63`, `1.8.64`, `1.8.65`, `1.8.66`, `1.8.67`, `1.8.68`, `1.8.69`, `1.8.70`, `1.8.71`, `1.8.72`, `1.8.73`, `1.8.74`, `1.8.75`, `1.8.76`, `1.8.77`, `1.8.78`, `1.8.79`, `1.8.80`, `1.8.81`, `1.8.82`, `1.8.83`

# October 3, 2025

Here are the most relevant improvements we've made since the last release:

## 📝 Multi-Value Feedback Scores & Annotation Queues

We're excited to announce major improvements to our evaluation and annotation capabilities!

### What's new?

**1. Multi-Value Feedback Scores**

Multiple users can now independently score the same trace or thread. No more overwriting each other's input—every reviewer's perspective is preserved and is visible in the product. This enables richer, more reliable consensus-building during evaluation.

**2. Annotation Queues**

Create queues of traces or threads that need expert review. Share them with SMEs through simple links. Organize work systematically, track progress, and collect both structured and unstructured feedback at scale.

**3. Simplified Annotation Experience**

A clean, focused UI designed for non-technical reviewers. Support for clear instructions, predefined feedback metrics, and progress indicators. Lightweight and distraction-free, so SMEs can concentrate on providing high-quality feedback.

[Full Documentation: Annotation Queues](/docs/opik/evaluation/annotation_queues)

## 🚀 Opik Optimizer - GEPA Algorithm & MCP Tool Optimization

### What's new?

**1. GEPA (Genetic-Pareto) Support**

[GEPA](https://github.com/gepa-ai/gepa/) is the new algorithm for optimizing prompts from Stanford. This bolsters our existing optimizers with the latest algorithm to give users more options.

**2. MCP Tool Calling Optimization**

The ability to tune MCP servers (external tools used by LLMs). Our solution uses our existing algorithm (MetaPrompter) to use LLMs to tune how LLMs interact with an MCP tool. The final output is a new tool signature which you can commit back to your code.

[Full Documentation: Annotation Queues](/docs/opik/evaluation/annotation_queues)

## 🚀 Opik Optimizer - GEPA Algorithm & MCP Tool Optimization

### What's new?

**1. GEPA (Genetic-Pareto) Support**

[GEPA](https://github.com/gepa-ai/gepa/) is the new algorithm for optimizing prompts from Stanford. This bolsters our existing optimizers with the latest algorithm to give users more options.

**2. MCP Tool Calling Optimization**

The ability to tune MCP servers (external tools used by LLMs). Our solution uses our existing algorithm (MetaPrompter) to use LLMs to tune how LLMs interact with an MCP tool. The final output is a new tool signature which you can commit back to your code.

[Full Documentation: Tool Optimization](/docs/opik/agent_optimization/algorithms/tool_optimization) | [GEPA Optimizer](/docs/opik/agent_optimization/algorithms/gepa_optimizer)

## 🔍 Dataset & Search Enhancements

* Added dataset search and dataset items download functionality

## 🐍 Python SDK Improvements

* Implement granular support for choosing dataset items in experiments

* Better project name setting and onboarding

* Implement calculation of mean/min/max/std for each metric in experiments

* Update CrewAI to support CrewAI flows

## 🎨 UX Enhancements

* Add clickable links in trace metadata

* Add description field to feedback definitions

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.42...1.8.62)

*Releases*: `1.8.43`, `1.8.44`, `1.8.45`, `1.8.46`, `1.8.47`, `1.8.48`, `1.8.49`, `1.8.50`, `1.8.51`, `1.8.52`, `1.8.53`, `1.8.54`, `1.8.55`, `1.8.56`, `1.8.57`, `1.8.58`, `1.8.59`, `1.8.60`, `1.8.61`, `1.8.62`

# September 5, 2025

Here are the most relevant improvements we've made since the last release:

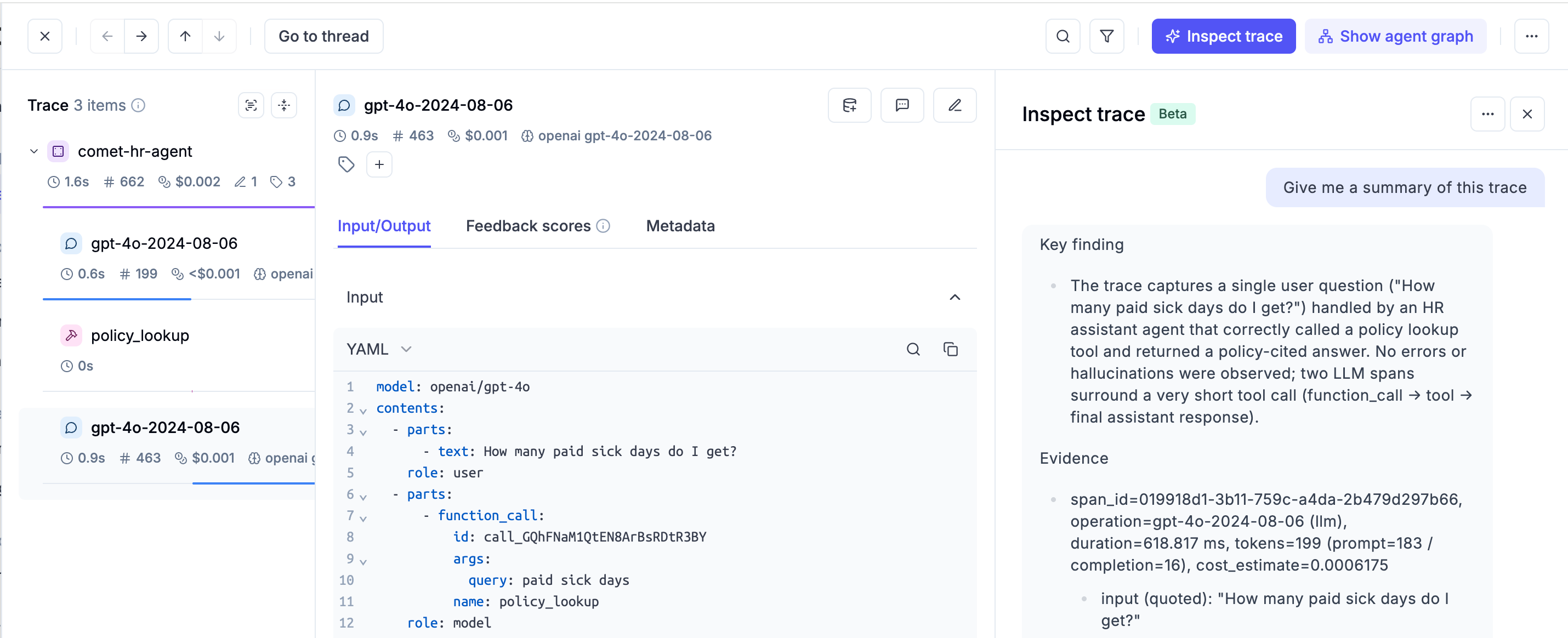

## 🔍 Opik Trace Analyzer Beta is Live!

We're excited to announce the launch of **Opik Trace Analyzer** on Opik Cloud!

What this means: faster debugging & analysis!

Our users can now easily understand, analyze, and debug their development and production traces.

Want to give it a try? All you need to do is go to one of your traces and click on "Inspect trace" to start getting valuable insights.

[Full Documentation: Tool Optimization](/docs/opik/agent_optimization/algorithms/tool_optimization) | [GEPA Optimizer](/docs/opik/agent_optimization/algorithms/gepa_optimizer)

## 🔍 Dataset & Search Enhancements

* Added dataset search and dataset items download functionality

## 🐍 Python SDK Improvements

* Implement granular support for choosing dataset items in experiments

* Better project name setting and onboarding

* Implement calculation of mean/min/max/std for each metric in experiments

* Update CrewAI to support CrewAI flows

## 🎨 UX Enhancements

* Add clickable links in trace metadata

* Add description field to feedback definitions

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.42...1.8.62)

*Releases*: `1.8.43`, `1.8.44`, `1.8.45`, `1.8.46`, `1.8.47`, `1.8.48`, `1.8.49`, `1.8.50`, `1.8.51`, `1.8.52`, `1.8.53`, `1.8.54`, `1.8.55`, `1.8.56`, `1.8.57`, `1.8.58`, `1.8.59`, `1.8.60`, `1.8.61`, `1.8.62`

# September 5, 2025

Here are the most relevant improvements we've made since the last release:

## 🔍 Opik Trace Analyzer Beta is Live!

We're excited to announce the launch of **Opik Trace Analyzer** on Opik Cloud!

What this means: faster debugging & analysis!

Our users can now easily understand, analyze, and debug their development and production traces.

Want to give it a try? All you need to do is go to one of your traces and click on "Inspect trace" to start getting valuable insights.

## ✨ Features and Improvements

* We've finally added **dark mode** support! This feature has been requested many times by our community members. You can now switch your theme in your account settings.

## ✨ Features and Improvements

* We've finally added **dark mode** support! This feature has been requested many times by our community members. You can now switch your theme in your account settings.

* Now you can filter the widgets in the metrics tab by trace and threads attributes

* Now you can filter the widgets in the metrics tab by trace and threads attributes

* Annotating tons of threads? We've added the ability to export feedback score comments for threads to CSV for easier analysis in external tools.

* We have also improved the discoverability of the experiment comparison feature.

* Added new filter operators to the Experiments table

* Annotating tons of threads? We've added the ability to export feedback score comments for threads to CSV for easier analysis in external tools.

* We have also improved the discoverability of the experiment comparison feature.

* Added new filter operators to the Experiments table

* Adding assets as part of your experiment's metadata? We now display clickable links in the experiment config tab for easier navigation.

* Adding assets as part of your experiment's metadata? We now display clickable links in the experiment config tab for easier navigation.

## 📚 Documentation

* We've released [Opik University](/opik/opik-university)! This is a new section of the docs full of video guides explaining the product.

## 📚 Documentation

* We've released [Opik University](/opik/opik-university)! This is a new section of the docs full of video guides explaining the product.

## 🔌 SDK & Integration Improvements

* Enhanced *LangChain* integration with comprehensive tests and build fixes

* Implemented new search\_prompts method in the Python SDK

* Added [documentation for models, providers, and frameworks supported for cost tracking](/docs/opik/tracing/cost_tracking#supported-models-providers-and-integrations)

* Enhanced Google ADK integration to log **error information to corresponding spans and traces**

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.33...1.8.42)

*Releases*: `1.8.34`, `1.8.35`, `1.8.36`, `1.8.37`, `1.8.38`, `1.8.39`, `1.8.40`, `1.8.41`, `1.8.42`

# August 22, 2025

Here are the most relevant improvements we've made in the last couple of weeks:

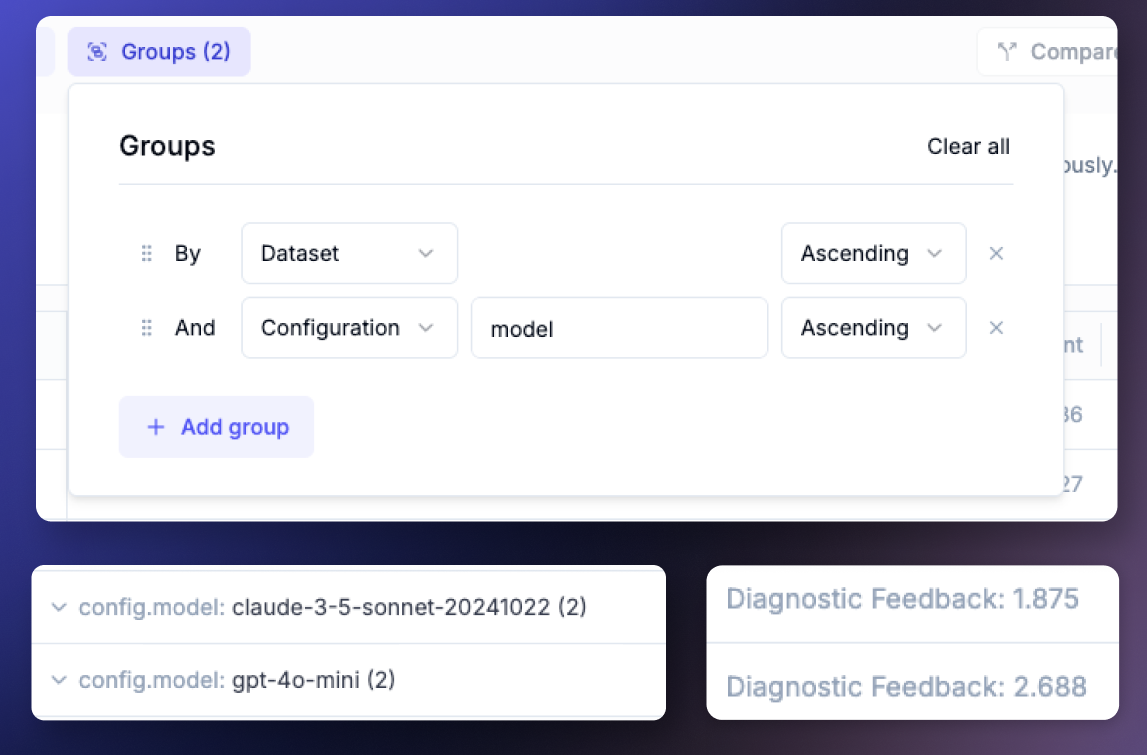

## 🧪 Experiment Grouping

Instantly organize and compare experiments by model, provider, or custom metadata to surface top performers, identify slow configurations, and discover winning parameter combinations. The new Group by feature provides aggregated statistics for each group, making it easier to analyze patterns across hundreds of experiments.

## 🔌 SDK & Integration Improvements

* Enhanced *LangChain* integration with comprehensive tests and build fixes

* Implemented new search\_prompts method in the Python SDK

* Added [documentation for models, providers, and frameworks supported for cost tracking](/docs/opik/tracing/cost_tracking#supported-models-providers-and-integrations)

* Enhanced Google ADK integration to log **error information to corresponding spans and traces**

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.33...1.8.42)

*Releases*: `1.8.34`, `1.8.35`, `1.8.36`, `1.8.37`, `1.8.38`, `1.8.39`, `1.8.40`, `1.8.41`, `1.8.42`

# August 22, 2025

Here are the most relevant improvements we've made in the last couple of weeks:

## 🧪 Experiment Grouping

Instantly organize and compare experiments by model, provider, or custom metadata to surface top performers, identify slow configurations, and discover winning parameter combinations. The new Group by feature provides aggregated statistics for each group, making it easier to analyze patterns across hundreds of experiments.

## 🤖 Expanded Model Support

Added support for 144+ new models, including:

* OpenAI's GPT-5 and GPT-4.1-mini

* Anthropic Claude Opus 4.1

* Grok 4

* DeepSeek v3

* Qwen 3

## 🛫 Streamlined Onboarding

New quick start experience with AI-assisted installation, interactive setup guides, and instant access to team collaboration features and support.

## 🤖 Expanded Model Support

Added support for 144+ new models, including:

* OpenAI's GPT-5 and GPT-4.1-mini

* Anthropic Claude Opus 4.1

* Grok 4

* DeepSeek v3

* Qwen 3

## 🛫 Streamlined Onboarding

New quick start experience with AI-assisted installation, interactive setup guides, and instant access to team collaboration features and support.

## 🔌 Integrations

Enhanced support for leading AI frameworks including:

* **LangChain**: Improved token usage tracking functionality

* **Bedrock**: Comprehensive cost tracking for Bedrock models

## 🔍 Custom Trace Filters

Advanced filtering capabilities with support for list-like keys in trace and span filters, enabling precise data segmentation and analysis across your LLM operations.

## ⚡ Performance Optimizations

* Python scoring performance improvements with pre-warming

* Optimized ClickHouse async insert parameters

* Improved deduplication for spans and traces in batches

## 🛠️ SDK Improvements

* Python SDK configuration error handling improvements

* Added dataset & dataset item ID to evaluate task inputs

* Updated OpenTelemetry integration

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.16...1.8.33)

*Releases*: `1.8.16`, `1.8.17`, `1.8.18`, `1.8.19`, `1.8.20`, `1.8.21`, `1.8.22`, `1.8.23`, `1.8.24`, `1.8.25`, `1.8.26`, `1.8.27`, `1.8.28`, `1.8.29`, `1.8.30`, `1.8.31`, `1.8.32`, `1.8.33`

# August 1, 2025

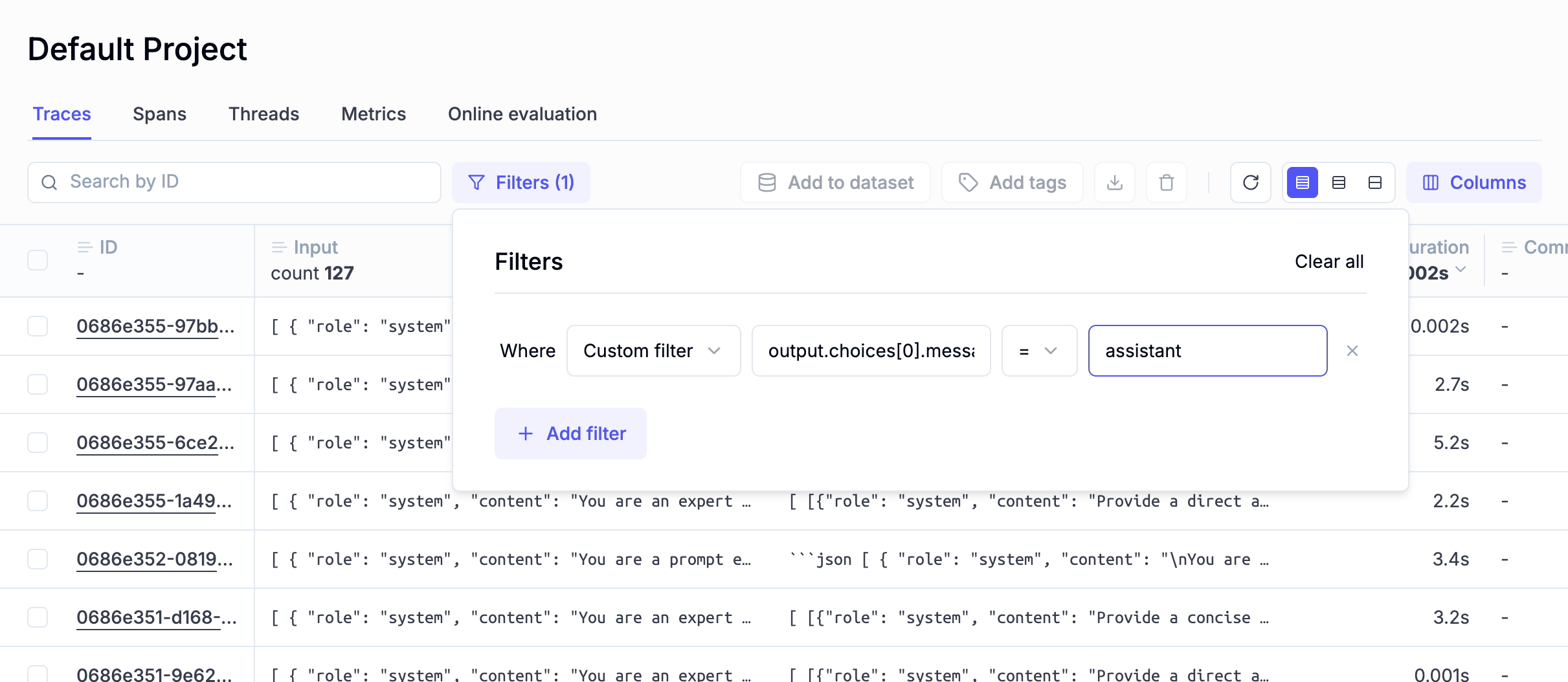

## 🎯 Advanced Filtering & Search Capabilities

We've expanded filtering and search capabilities to help you find and analyze data more effectively:

* **Custom Trace Filters**: Support for custom filters on input/output fields for traces and spans, allowing more precise data filtering

* **Enhanced Search**: Improved search functionality with better result highlighting and local search within code blocks

* **Better Search Results**: Enhanced search result highlighting and improved local search functionality within code blocks

* **Crash Filtering**: Fixed filtering issues for values containing special characters like `%` to prevent crashes

* **Dataset Filtering**: Added support for experiments filtering by datasetId and promptId

## 🔌 Integrations

Enhanced support for leading AI frameworks including:

* **LangChain**: Improved token usage tracking functionality

* **Bedrock**: Comprehensive cost tracking for Bedrock models

## 🔍 Custom Trace Filters

Advanced filtering capabilities with support for list-like keys in trace and span filters, enabling precise data segmentation and analysis across your LLM operations.

## ⚡ Performance Optimizations

* Python scoring performance improvements with pre-warming

* Optimized ClickHouse async insert parameters

* Improved deduplication for spans and traces in batches

## 🛠️ SDK Improvements

* Python SDK configuration error handling improvements

* Added dataset & dataset item ID to evaluate task inputs

* Updated OpenTelemetry integration

And much more! 👉 [See full commit log on GitHub](https://github.com/comet-ml/opik/compare/1.8.16...1.8.33)

*Releases*: `1.8.16`, `1.8.17`, `1.8.18`, `1.8.19`, `1.8.20`, `1.8.21`, `1.8.22`, `1.8.23`, `1.8.24`, `1.8.25`, `1.8.26`, `1.8.27`, `1.8.28`, `1.8.29`, `1.8.30`, `1.8.31`, `1.8.32`, `1.8.33`

# August 1, 2025

## 🎯 Advanced Filtering & Search Capabilities

We've expanded filtering and search capabilities to help you find and analyze data more effectively:

* **Custom Trace Filters**: Support for custom filters on input/output fields for traces and spans, allowing more precise data filtering

* **Enhanced Search**: Improved search functionality with better result highlighting and local search within code blocks

* **Better Search Results**: Enhanced search result highlighting and improved local search functionality within code blocks

* **Crash Filtering**: Fixed filtering issues for values containing special characters like `%` to prevent crashes

* **Dataset Filtering**: Added support for experiments filtering by datasetId and promptId

## 📊 Metrics & Analytics Improvements

We've enhanced the metrics and analytics capabilities:

* **Thread Feedback Scores**: Added comprehensive thread feedback scoring system for better conversation quality assessment

* **Thread Duration Monitoring**: New duration widgets in the Metrics dashboard for monitoring conversation length trends

* **Online Evaluation Rules**: Added ability to enable/disable online evaluation rules for more flexible monitoring

* **Cost Optimization**: Reduced cost prompt queries to improve performance and reduce unnecessary API calls

## 🎨 UX Enhancements